DOI: 10.31038/NRFSJ.2022524

Abstract

Background: Although adequate nutrition and good health are known to promote academic success, the tuition and non-tuition expenses often force most students change their eating patterns after starting tertiary education. The unresolved dilemma is that there are high rates of obesity alongside high rates of hunger on campuses in Jamaica. This study among students in three tertiary institutions in Jamaica examined the risk factors for weight gain and weight loss. More importantly, the aim was to determine whether these weight changes among students affected their academic performance.

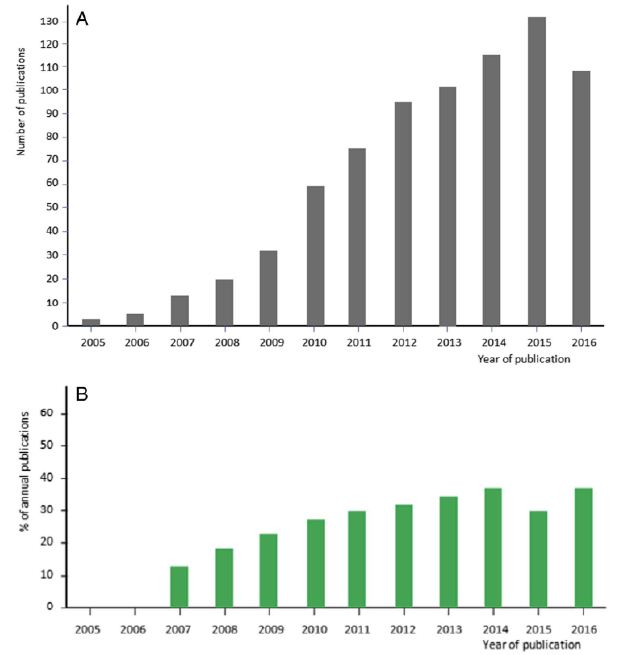

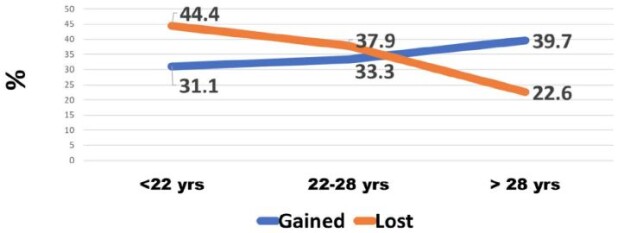

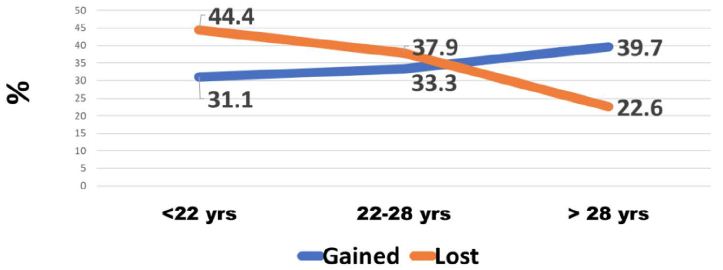

Results: While overall weight gain and weight loss were similar (34-37%), older students experienced more weight gain (39.7%) and males had more weight loss (41.7%). Significantly less fully employed students (24.6%) lost weight than those partially employed (43.9%) or those with no job (43.2%). Disordered eating was high (39.2%) and was associated mainly with weight loss. Lower GPA scores were correlated with weight loss. Key independent factors related to weight change were age, gender, disordered eating, the amount, and type of food consumed, depression and anxiety.

Conclusion: The large proportion of students with weight change cannot be ignored by campus administrators. On-going programs are clearly not sufficient to halt this behaviour. For tertiary institutions to meet their education mandate, authorities must provide an enabling environment for students at risk of major weight changes. Policies and programs such as regular screening of students, and education to impart relevant nutritional knowledge and improve practices, are vital to promote student health and ultimately their academic success.

Keywords

Weight change, Eating disorders, Risk factors, Policies, Higher education

Introduction

University life is a critical period for cementing healthy and sustainable eating habits [1]. Not only do adequate nutrition and good health promote academic success, but in the long run they also reduce government expenditure on management and treatment of chronic diseases. In Jamaica, chronic disease prevalence has increased steadily among the adult population and for the last 3 decades they have been responsible for most of the illness and deaths. In addition, the total economic burden on individuals has been estimated at over US$600 million [2].

While recent research has focused on “the Freshman 15” – a term used to describe the rapid and dramatic increase in weight of college students [3], there is also evidence that food insecurity significantly affects students as well [4,5]. This study therefore examined the apparent paradox between weight gain and weight loss among tertiary students in Jamaica.

National surveys in Jamaica show overweight/obesity trends for adults as 34% in 2000; 49% in 2008 and 54% in 2016 [6]. This trend is astounding – showing a 59% increase in 16 years and clearly calls for bold and sustained corrective action. Further, other surveys have documented an increase in the major behavioural risk factors and NCDs such as diabetes, hypertension, and obesity among adults [7]. In 2017, PAHO estimated that 78% of all deaths are caused by NCDs. Of all NCD deaths 30% occurred prematurely between 30 and 70 years of age [8]. Coupled with such high rates of chronic disease is the fact that almost half (46.0%) of the adult population are classified as having low physical activity and approximately 99.0% of adults consume well below the daily recommended portions of fruits and vegetables [6].

Graduates from tertiary institutions are expected to be the main drivers of sustainable development. These institutions should therefore obtain a better understanding of food insecurity and eating behaviours among students as they not only have the potential to influence academic performance, student retention and graduation rates but also allow such institutions to provide key evidence needed to advocate for and develop policies at the national and regional levels [5].

Methods

A quantitative survey was used to capture the dynamics that can affect student eating behavior and also gain insights into the mechanism through which weight change can harm student academic performance. Three tertiary institutions participated in this self-reporting study: University of Technology, Jamaica, the University of the Commonwealth Caribbean and Shortwood Teachers’ college. About 300 students from each of these institutions were randomly selected to participate. Efforts were made to stratify by faculty in each institution. A pilot test of the questionnaire was done on 20 students at different levels to assess clarity and understanding. The test results were used to modify the structure and content of questions where necessary. To solicit maximum honesty and confidentiality the students were not required giving their names, identification numbers, or any information that can be traced to them individually. After ethical clearances and permissions from the university authorities, coordinators from each institution were assigned to administer the questionnaire. No payment was given to the students for completing the questionnaire. Student responses were scrutinized for completeness and quality. Analysis was planned to reveal several descriptors of weight gain and weight loss. Independent factors were identified using weight gain and weight loss as dependent variables.

Results

Analysis of data from the three institutions combined showed that the proportion of students who reportedly gained weight (34.0%) was close to those who lost weight (36.9%). Stark differences were however found in weight change with various variables.

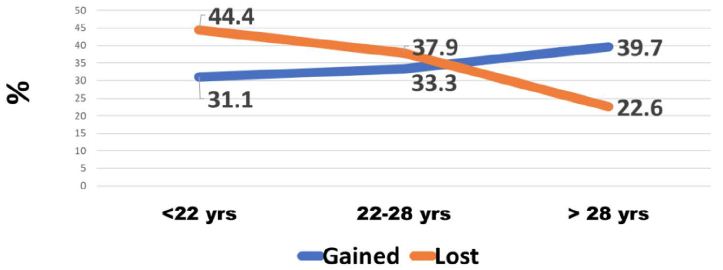

Figure 1 shows that among those less than 22 years old, 44.4% lost weight and 31.1% gained weight. In the 22-28-year-old category, more students also reported that they had lost weight (37.9%) compared to those who had gained (33.3%). In the over 28 years old category, however, more reported that they had gained weight (39.7%) and 22.6% lost weight.

Figure 1: Weight change by age of tertiary students

Among males, the greatest percentage (41.7%) reported that they had lost weight while 24.6% had gained weight. Among females, weight gain was found in 36.6% of them and 35.4% lost weight.

Some students with full-time employment reported that they had gained weight (35.5%) while 24.6% reported they had lost weight. Among students who were employed part-time, the greatest percentage (43.9%) had lost weight, while 35.1% had gained weight. A larger proportion of unemployed students (43.2%) reported that they had lost weight while 32.5% had gained weight (Table 1).

Table 1: Relationship between Employment Status and Weight Change

| Weight Change |

Employment

|

|

Full Time

|

Part Time

|

No Job

|

| Gained (%) |

35.5

|

35.1

|

32.5

|

| Lost (%) |

24.6

|

43.9

|

43.2

|

| No Change (%) |

39.9

|

21.1

|

24.3

|

| Total |

338

|

171

|

465

|

P<.001

As expected, more students (40.8%) who ate 3 times per day gained weight whereas 18.9% lost weight. In contrast, most students who reported that they ate once per day had lost weight (54.0%) while 27.7% had gained weight (Table 2).

Table 2: Relationship between Meal Frequency and Weight Change

|

Weight Change |

Meals per day

|

|

3+

|

2

|

1

|

| Gained (%) |

40.8

|

33.9

|

27.7

|

| Lost (%) |

18.9

|

37.0

|

54.0

|

| No Change (%) |

40.3

|

29.1

|

18.3

|

| Total |

201

|

570

|

202

|

P<.001

Among all students 39.2% indicated that their eating changes were due to a disorder. Of those who reported a disorder 41.6% had lost weight, 37.4% gained and 21.1% had no change in weight. Of those whose consumption changes were not due to a disorder the difference was not as large; 34.6% reported no weight changes, 33.7% reportedly lost weight and 31.7% indicated they had gained weight (Table 3).

Table 3: Changes in eating habits due to a disorder and its relation to weight change

| Weight Change |

Eating changed due to disorder

|

|

Yes

|

No

|

| Gained (%) |

37.4

|

31.7

|

| Lost (%) |

41.6

|

33.7

|

| No Change (%) |

21.1

|

34.6

|

| Total |

380

|

590

|

P<.05

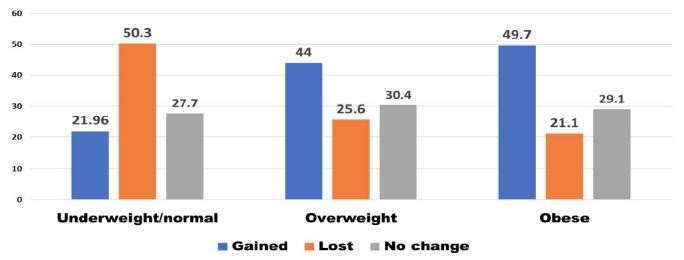

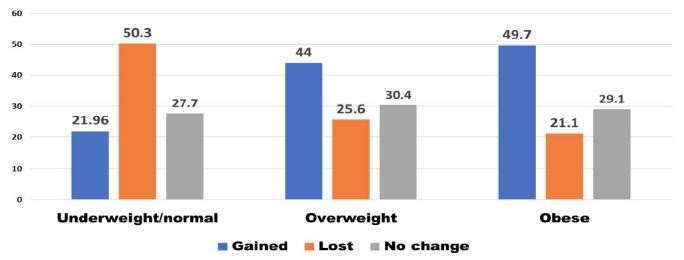

Figure 2 shows that of those who were undernourished or normal, 50.3% said they lost weight. Of those overweight, 44% said they gained weight. Of those who were obese 49.7% said they gained weight.

Figure 2: Weight change according to weight status

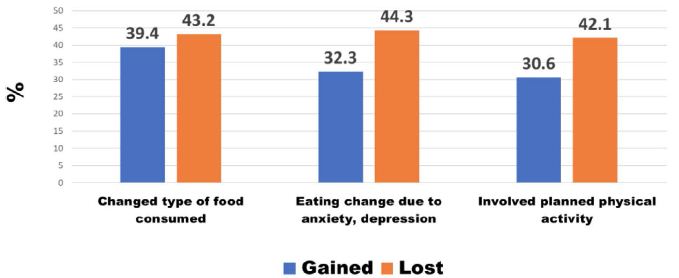

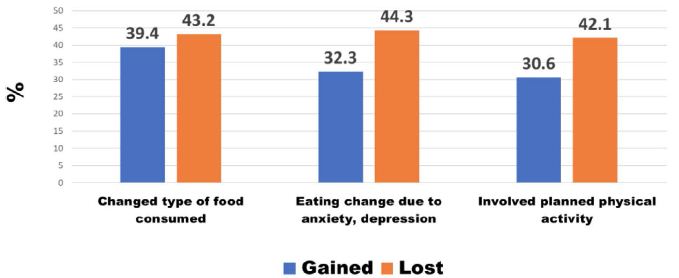

Figure 3 shows that when the type of food changed there was an overall loss in weight. Students were also asked whether anxiety and stress caused changes in their consumption habits. Most students indicated this was the case (59.9%). Of these, 44.3% reported that they had lost weight, 32.3% had gained weight. Of those whose eating had not changed because of stress and anxiety, 36.7% gained weight and 25.6% reportedly had lost weight.

Figure 3: Other Causes of weight change

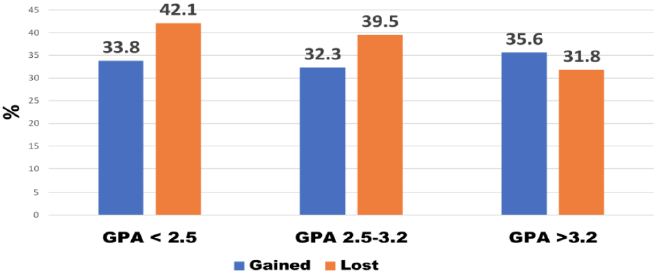

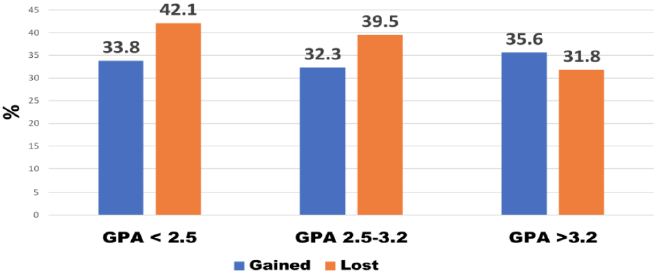

A minority of students (39.3%) indicated that they were involved in planned physical activity. Among these students, 42.1% reported that they had lost weight, 30.6% had gained weight (Figure 3). The duration of physical activity was categorized as less than 1 hour and 1-2 hours per week. There was no difference in weight change according to the duration of physical activity. Most students reported that they were not involved in planned physical activity (60.7%). Of these, 35.9% reportedly gained weight, 33.6% lost weight and 30.5% experienced no change in weight. The grade point averages (GPAs) for students were used to denote academic performance. Figure 4 shows that students with lower GPAs (<3.2) experienced more weight loss than the high achievers (GPA >3.2).

Figure 4: Weight change in relation to academic performance (GPA)

The likelihood ratio test was used to identify the independent factors related to weight gain and weight loss. Table 4 shows that the full-time employed student was the main factor related to weight gain, whereas eating one meal per day was the main factor related to weight loss.

Table 4: Independent factors related to weight change

|

Factors and weight change

|

|

Weight gain vs. no change

|

Weight loss vs. no change

|

| Full-time employed student (p> 001)

Older student (p<.05)

Female student (p<.05)

Eating disorder (p<.05)

Type of food consumed p<.05

|

1 vs. 3 Meals per day (p<.001)

Depression & anxiety (p<.05)

Type of food consumed (p<.05) |

Discussion

Previous studies in Jamaica revealed high levels of food insecurity among university students [9]. The natural hypothesis from this observation is that it will express itself as weight loss. However, with obesity on the rise in Jamaica, that hypothesis needed to be tested. Weight gain among students who are food insecure may be the result of poor food choices, lifestyle habits (harmful use of alcohol or lack of engagement in physical activity) or the body’s response to stress including finances, academic pressures and work life. While evidence from the Caribbean is sparse and mostly anecdotal, a review of the literature shows that in several countries a double-burden of sorts exists – both food insecurity and overweight/obesity, particularly among women and youth [10].

Evidence from other parts of the world suggests that university students consume more unhealthy foods (processed foods with high total and saturated fats) and have lower intakes of fresh fruit and vegetables [10-12]. Such behaviours may carry on to adulthood thereby contributing to the burden of disease seen among middle- and older-aged adults at the population level.

The self-reported weight changes in this study indicate significantly increasing weight gain with age. This is consistent with the observation that the 18-29 year-old age group is often viewed as being at high risk for weight-related behaviour change as the transition from adolescence to adulthood is made and there is more freedom regarding the type and amount of food consumed [11]. Even when students are aware of the health consequences of overconsumption of unhealthy foods, food choices have been shown to be heavily influenced by convenience and taste [12].

Most published studies have focused specifically on weight gain, but this study shows that weight loss is relatively high, particularly among males. Globally, studies examining sex-related changes in weight have shown that both males and females experience weight changes when they enter university with the greatest changes in weight taking place in the first semester [13].

This study found higher weight gain among students fully employed. Working full time has been thought to be associated with unhealthy eating and consequently weight gain [14].

Research shows that stress can result in changes in food intake patterns and among university students has been linked to maladaptive eating behaviours such as consumption of unhealthy foods and overeating [15]. In fact, there is an apparent link between the types of foods that are viewed as ‘comfort foods’ which are used to cope with stressors and age – with younger persons seeming to prefer snack-related foods such as ice cream, candy, and sweet breads [16]. As university enrolment often results in major life changes for example new living arrangements, new social situations and increased academic and time demands, stress and its consequent effect on dietary habits may be commonplace [16]. As expected in this study, students who ate more meals achieved more weight gain. But this relationship is complex. The effect of meal frequency plays an important role not just in academic achievement but also long-term health consequences. The omission of one or more meals from the diet has been linked to poorer diet quality, increased risk of abdominal adiposity and increased BMI [17-21]. Reasons for meal skipping among university students include a lack of hunger, depression, lack of time, lack of money, and lack of cooking skills [22,23].

The cost of food has been shown to be a key factor that influences what students purchase [24]. Foods that are relatively cheaper also tend to be high in salt, sugar, fat and flavour additives which have been identified as contributory factors to the obesity epidemic. Disordered eating is associated with weight gain, overweight and obesity among adolescents/young adults [25,26]. The high percentage of disordered eating among Jamaican students is worrisome. And it resulted mainly in weight loss. Studies have shown that disordered eating is more prevalent among those who may be experiencing feelings of anxiety, loneliness and stress, all of which are common among university students [27].

Among university students, research shows that physical activity levels continue to decline while engagement in sedentary activities continue to increase [28]. This study shows a minority of students involved in planned physical activity. Several factors have been proposed regarding this decline among university students e.g. university students have greater control of their daily lives and are not mandated to participate in physical activity, the impact of residence (on- or off-campus), time demands (including time spent on social media) and access to facilities where physical activities are offered or where physical activity can be engaged in safely [29]. Data from the United Kingdom suggest that up to 60% of university students are not meeting physical activity recommendations [30].

Campus administration has an obligation to equip students not only with knowledge for the world of work but also for a healthy lifestyle which is integrally linked to work performance. Efforts can include: (1) Screening students at the start of each school year for food insecurity to gauge the type and quantity of support that is required. (2) Collaborating with food manufacturers and supermarkets for food donations to university/college campuses. (3) Conduct of an interactive “Cooking on a Budget” program during the semester which teaches students how to cook quick, cheap, and healthy meals on a budget. (4) Meal Program – Provision of a student space which can be accessed by students and provides coffee/tea, affordable and healthy snacks, sandwiches etc. (5) Pantry Program – Installation of a student-run pantry that students can access which includes toiletries and grocery vouchers for students in need.

Acknowledgment

We thank the University of Technology, Jamaica, for providing funding through the Research Development Fund, managed by the University’s Research Management Office, the School of Graduate Studies, Research & Entrepreneurship. Gratitude is expressed to Mr. Kevin Powell (University of the Commonwealth Caribbean) and Ms. Ava-Marie Reid (Shortwood Teachers’ College who coordinated the data collection at their respective institutions.

References

- Kim HS, Ahn J, No JK (2012) Applying the Health Belief Model to college students’ health behavior. Nutrition Research and Practice 6: 551-558. [crossref]

- Ministry of Health and Wellness Jamaica (2013). National strategic and action plan for the prevention and control of NCDs in Jamaica 2013-2018. Kingston, Jamaica Retrieved from http://moh.gov.jm/wp-content/uploads/2015/05/National-Strategic-and-Action-Plan-for-the-Prevention-and-Control-Non-Communicable-Diseases-NCDS-in-Jamaica-2013-2018.pdf

- Sharif MR, Sayyah M (2018) Assessing physical and demographic conditions of freshman” 15″ male medical students. International Journal of Sport Studies for Health 1: e67421.

- Freudenberg N, Goldrick-Rab S, Poppendieck J (2019) College students and SNAP: The new face of food insecurity in the United States. American Journal of Public Health 109: 1652-1658. [crossref]

- Payne-Sturges DC, Tjaden A, Caldeira KM, Vincent KB, Arria AM (2018) Student hunger on campus: Food insecurity among college students and implications for academic institutions. American Journal of Health Promotion 32: 349-354. [crossref]

- JHLS (2000). Jamaica Health and Lifestyle Survey. Ministry of Health 2000.

______ (2008). Jamaica Health and Lifestyle Survey. Ministry of Health 2008

______ (2018). Jamaica Health and Lifestyle Survey. Ministry of Health 2018

- Ministry of Health (2013) National Strategic and Action Plan for the Prevention And Control Non-Communicable Diseases (NCDS) in JAMAICA 2013-2018. Retrieved from: https://www.moh.gov.jm/wp-content/uploads/2015/05/National-Strategic-and-Action-Plan-for-the-Prevention-and-Control-Non-Communicable-Diseases-NCDS-in-Jamaica-2013-2018.pdf

- PAHO (2017) Regional mortality estimates 2000-2015. PAHO 2017.

- Henry FJ, Nelson M, Aarons R (2020) Learning on empty. In University Student Life and learning: Challenges for Change. Ed: Fitzroy J. Henry, University of Technology, Jamaica Press 102-112.

- Au L, Zhu S, Ritchie L, Nhan L, Laraia B, et al. (2019) Household Food Insecurity Is Associated with Higher Adiposity Among US Schoolchildren Ages 10–15 Years (OR02-05-19). Current Developments in Nutrition 149: 1642-1650. [crossref]

- Nelson MC, Story M, Larson NI, Neumark-Sztainer D, Lytle LA (2008) Emerging adulthood and college-aged youth: an overlooked age for weight-related behavior change. Obesity 16: 2205-2211. [crossref]

- Abraham S, Noriega B, Shin J (2018) College students eating habits and knowledge of nutritional requirements. Journal of Nutrition and Human Health 106: 46-53. [crossref]

- Lloyd-Richardson EE, Bailey S, Fava JL, Wing R, Network TER (2009) A prospective study of weight gain during the college freshman and sophomore years. Preventive Medicine 48: 256-261. [crossref]

- Escoto KH, Laska, MN, Larson N, Neumark-Sztainer D, Hannan PJ (2012) Work hours and perceived time barriers to healthful eating among young adults. American Journal of Health Behavior 36: 786-796. [crossref]

- Lyzwinski LN, Caffery L, Bambling M, Edirippulige S (2019) The mindfulness app trial for weight, weight-related behaviors, and stress in university students: randomized controlled trial. JMIR mHealth and uHealth 7: e12210. [crossref]

- Kandiah J, Yake M, Jones J, Meyer M (2006) Stress influences appetite and comfort food preferences in college women. Nutrition Research 26: 118-123.

- Chung H-Y, Song M-K, Park M-H (2003) A study of the anthropometric indices and eating habits of female college students. Journal of Community Nutrition 5: 21-28.

- Kerver JM, Yang EJ, Obayashi S, Bianchi L, Song WO (2006) Meal and snack patterns are associated with dietary intake of energy and nutrients in US adults. Journal of the American Dietetic Association 106: 46-53. [crossref]

- Ma Y, Bertone ER, Stanek III EJ, Reed GW, Hebert JR, et al. (2003) Association between eating patterns and obesity in a free-living US adult population. American Journal of Epidemiology 158: 85-92. [crossref]

- Musaiger A, Radwan H (1995) Social and dietary factors associated with obesity in university female students in United Arab Emirates. Journal of the Royal Society of Health 115: 96-99. [crossref]

- Timlin MT, Pereira MA (2007) Breakfast frequency and quality in the etiology of adult obesity and chronic diseases. Nutrition Reviews 65: 268-281. [crossref]

- Afolabi W, Towobola S, Oguntona C, Olayiwola I (2013) Pattern of fast-food consumption and contribution to nutrient intakes of Nigerian University students. Int J Educ Res 1: 1-10.

- Lee J-E, Yoon W-Y (2014) A study of dietary habits and eating-out behavior of college students in Cheongju area. Technology and Health Care 22: 435-442. [crossref]

- Tam R, Yassa B, Parker H, O’Connor H, Allman-Farinelli M (2017) University students’ on-campus food purchasing behaviors, preferences, and opinions on food availability. Nutrition 37: 7-13. [crossref]

- Barrack MT, West J, Christopher M, Pham-Vera A-M (2019) Disordered eating among a diverse sample of first-year college students. Journal of the American College of Nutrition 38: 141-148. [crossref]

- Goldschmidt AB, Aspen VP, Sinton MM, Tanofsky-Kraff M, Wilfley DE (2008) Disordered eating attitudes and behaviors in overweight youth. Obesity 16: 257-264. [crossref]

- Costarelli V, Patsai A (2012) Academic examination stress increases disordered eating symptomatology in female university students. Eating and Weight Disorders-Studies on Anorexia, Bulimia and Obesity 17: e164-e169. [crossref]

- Lauderdale ME, Yli-Piipari S, Irwin CC, Layne TE (2015) Gender differences regarding motivation for physical activity among college students: A self-determination approach. The Physical Educator 72.

- Calestine J, Bopp M, Bopp CM, Papalia Z (2017) College student work habits are related to physical activity and fitness. International Journal of Exercise Science 10: 1009. [crossref]

- Aceijas C, Bello-Corassa R, WaldhäuslN S, Lambert N, Cassar S (2016) Barriers and determinants of physical activity among UK university students Carmen Aceijas. European Journal of Public Health 26.