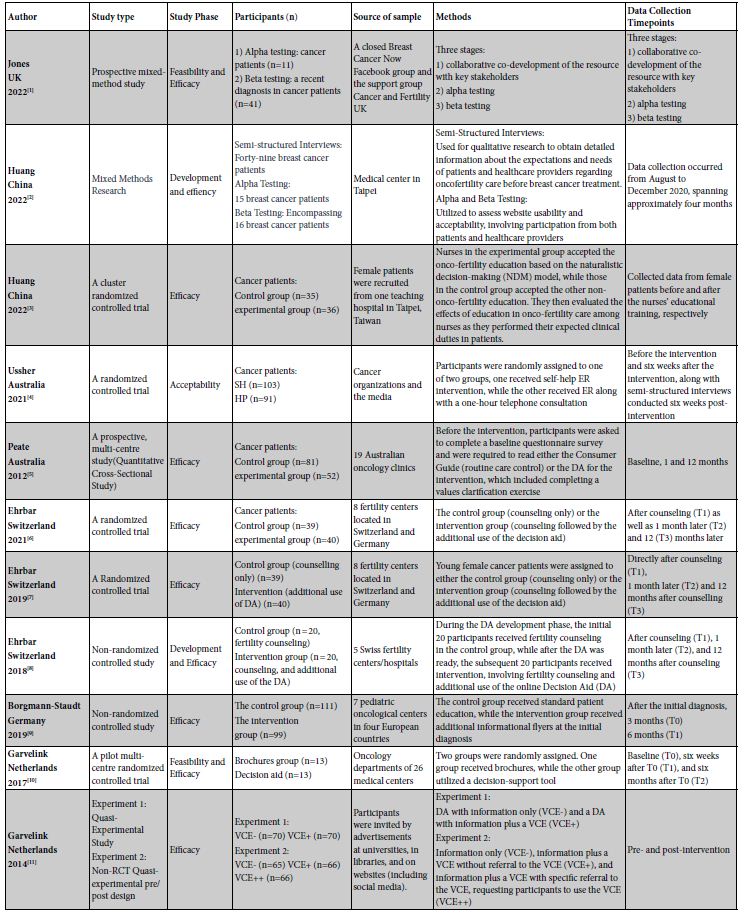

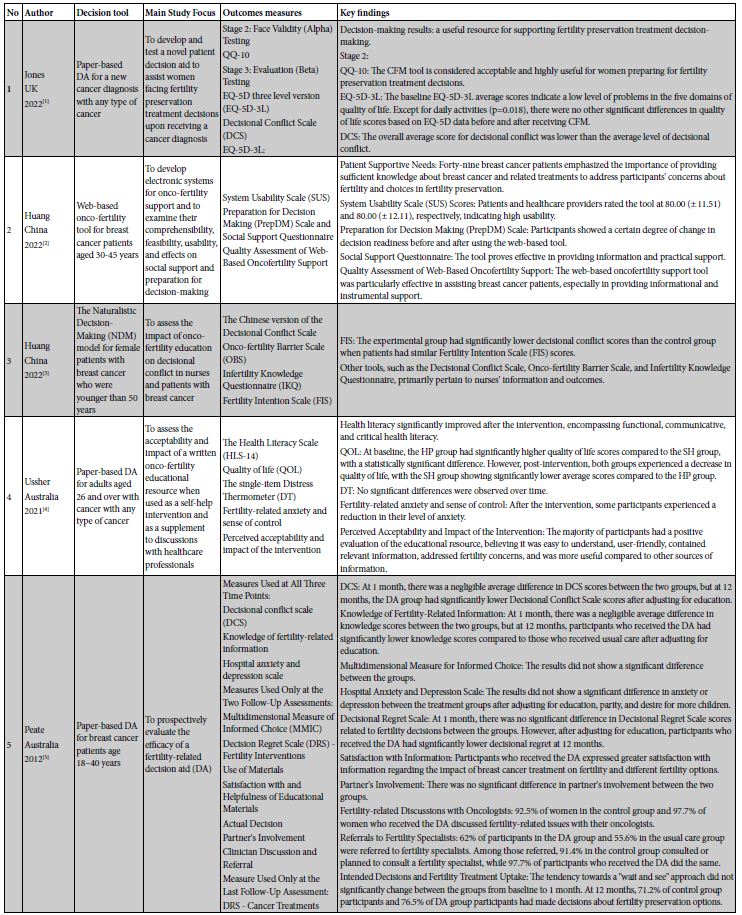

Abstract

Purpose: Climate change poses a significant threat to human health, particularly affecting the endocrine system. This study aims to explore the impact of climate change on various endocrine pathways and its implications for morbidity and mortality rates.

Methods: A review of literature was conducted to investigate the relationship between climate change and the endocrine system. Relevant databases were searched for studies on the effects of temperature changes, air pollution, and vector-borne diseases on hormone levels and endocrine health. Factors influencing the degree of impact, such as climate-related stressors and individual susceptibility, were also examined.

Findings: Climate change exerts a notable influence on the endocrine system, leading to hormone imbalances and increased mortality rates. Direct and indirect effects of climate change events, including temperature changes, air pollution, and vector-borne diseases, contribute to these disruptions. Certain components of the endocrine system, such as the adrenal gland, thyroid gland, HPA axis, and reproductive organs, are particularly vulnerable to environmental changes.

Implications: Understanding the mechanisms by which climate change affects endocrine disorders is crucial for addressing this global health issue. Efforts to mitigate the impact of climate change on human health should consider the specific vulnerabilities of the endocrine system and prioritize interventions to minimize morbidity and mortality associated with endocrine-related conditions.

Keywords

Climate change, Endocrine disorders, Health implications, Endocrine-disrupting chemicals (EDCs), Environmental factors

Introduction

Climate change, primarily caused by human activities like greenhouse gas emissions from power generation, manufacturing, and deforestation, results in significant alterations in weather patterns and temperatures. This has profound implications for public health, increasing morbidity and mortality due to extreme temperature conditions [1,2]. Recent research spanning from 2000 to 2019 indicates that approximately 5 million deaths worldwide can be attributed to non-optimal temperatures, with a substantial portion occurring in East and South Asia, highlighting regional disparities in climate change impacts [3]. Furthermore, environmental factors influenced by climate change can impact the endocrine system, potentially leading to disruptions in hormonal balance and the development of disorders. This paper will explore the multifaceted impact of climate change on human health, shedding light on both direct consequences, such as mortality, and indirect consequences related to endocrine disorders. By examining these connections, the goal is to deepen our understanding of the challenges posed by climate change in the global public health landscape and contribute to discussions on mitigating its adverse effects to safeguard public health.

This literature review provides a comprehensive analysis of the effects of climate change on the endocrine system, an area that remains relatively unexplored in current knowledge. By mixing the results of different published studies, we explore new insights into how climate-related stressors such as temperature fluctuations, air pollution, and vector-borne diseases disrupt hormonal balance and contribute to increased morbidity and mortality. Our approach shows the direct and indirect pathways through which climate change produces its impacts and provides a detailed understanding of the mechanisms involved in such processes. This research not only fills a major gap in the literature but also highlights the urgent need to address endocrine health in the context of climate change.

Climate Change

Climate Change Mechanisms and Human Health

Climate change encompasses significant alterations in regional and global climate over time. These changes affect various climate parameters; average and peak temperatures, humidity, precipitation, atmospheric pressure, water salinity, and the shrinking of mountain and polar glaciers [4]. In 2020, the global average surface temperature rose by 0.94 degrees Celsius compared to the average between 1951 and 1980. Projections suggest that by 2100, this average could increase by 4 degrees Celsius above the average recorded between 1986 and 2005, significantly surpassing previous projections [5]. The primary driver of Earth’s warming is the emission of greenhouse gases resulting from human activities, particularly methane and nitrous oxide. These gases retain warmth within the lower atmosphere, leading to temperature increases which leads to both short-term and long-term threats to human health and well-being.

Extreme Events

Sub-Optimal Temperatures

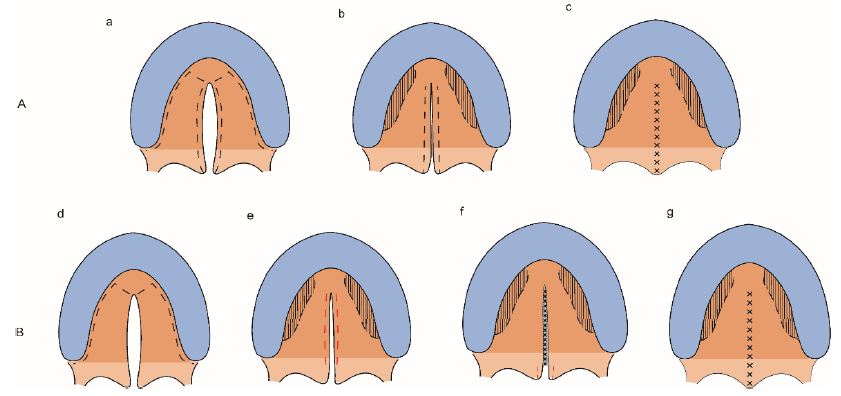

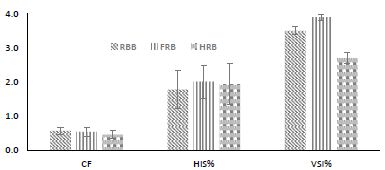

Extremes in temperatures, both low and high, increase mortality rates. Deaths from suboptimal temperatures were estimated to be 9.43% of total deaths worldwide from 2000 to 2019 [6]. Being exposed to high temperatures is linked with an increased likelihood of emergency department visits and hospital admissions because of cardiovascular, respiratory, and endocrine disorders [7-9], the exact temperature thresholds for these health impacts may not be explicitly stated in the cited literature (Figure 1).

Figure 1: Annual average excess deaths due to non-optimal temperature and regional proportion for 2000-19 by continent and region.

Blauw and colleagues’ research, investigating the relationship between fluctuations in outdoor temperature and the prevalence of diabetes mellitus in the USA from 1990 to 2009, demonstrated that for every 1-degree Celsius increase in outdoor temperature, there could be an association with more than 100,000 new cases of diabetes in the country, Blauw also proposes that brown adipose tissue (BAT) metabolism correlates with temperature, where lower temperatures lead to increased fatty acid metabolism by BAT and increased insulin sensitivity, the opposite is hypothesized to be true. More data is needed to understand the direct link between the two [10]. This aligns with the interconnected nature of climate change and diabetes, knowing that individuals with diabetes are at a higher risk of dehydration and cardiovascular events during extreme heat. Evidence has also shown that high temperatures can trigger behavioural and mental disorders, leading to an increase in cases of anxiety, depression, and suicidal attempts [11,12]. Schwartz’s analysis of 160,062 deaths in Wayne County, Michigan, among individuals aged 65 or older found that patients with diabetes faced a higher risk of mortality on hot days, highlighting the vulnerability of this demographic to heat stress [13]. Associations were also observed between daily maximum temperatures and visits to the Emergency Department in Atlanta, Georgia, for cases of internal diseases including diabetes, emphasizing broader health implications related to rising temperatures[14]. An analysis of 4,474,943 general practitioner consultations in Great Britain spanning from 2012 to 2014 indicated increased odds of seeking medical consultation linked to high temperatures [15]. Furthermore, the African continent faces a heightened risk of rising temperatures, as projections suggest increasingly severe heat extremes occurring over shorter durations. Kapwata et al.’s findings suggest potential temperature increases of 4-6 degrees Celsius for the African region during the period 2071-2100, posing heightened vulnerability to heat-related illnesses, especially among young children and the elderly [16]. Overall, these findings underscore the urgent need to tackle the intricate interplay among climate change, rising temperatures, and the heightened susceptibility to diabetes and associated health complications.

Wildfires

High temperatures and low precipitation increase the risk of wildfires, which can directly lead to burns, injuries, and premature deaths [17]. Compounds released during wildfires, such as benzene and free radicals, can have far-reaching consequences on human health. In fact, the exposure to environmental pollutants from wildfires can disrupt the endocrine system, leading to imbalances in hormonal regulation. Specifically, the released compounds may impact the functioning of the digestive, hematopoietic, and reproductive systems, creating a cascade effect that elevates the susceptibility to endocrine disorders [18].

Floods and Storms

Floods, instigated by heavy rainfall and elevating sea levels, are one of the most prevalent and devastating natural disasters. They lead to injuries and drownings and exacerbate the risk of water and vector-borne diseases like dengue, malaria, yellow fever, and West Nile virus [19,20]. Diabetic patients have altered immune responses to different pathogens and are at risk of developing severe infections leading to increased morbidity and mortality.

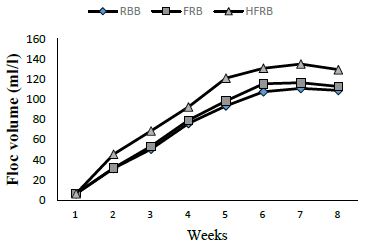

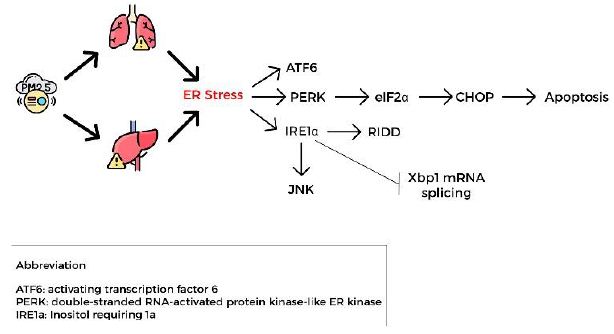

Air Pollution

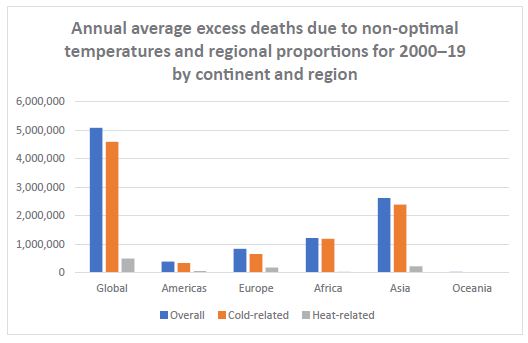

Air pollution results in decreased lung function in both children and adults, exacerbating the symptoms of asthma and chronic obstructive pulmonary disease (COPD). In addition, several studies have shown an association between air pollution and insulin resistance, leading to a heightened risk of developing diabetes (Figure 2) particulate matter smaller than 2.5 um increases the probability of developing diabetes and exacerbates the risk of diabetes-related complications [21,22].

Figure 2: Pathophysiology of air pollution and insulin resistance.

Food Insecurities

Extreme weather events may lead to decreased crop production, which can in turn lead to famines and malnutrition of populations leading to increased risk of infectious diseases [23].

Vulnerable Populations in Healthcare

Climate change may impact a particular section of a community more due to risk factors in those individuals. The vulnerable population in a community i.e. poor, elderly, disabled, children, prisoners, and substance abusers encounter heightened levels of mental and physical stress as a result of exposure to natural disasters. Reviews have shown that social factors affecting vulnerable individuals have been correlated with more or less capacity to adjust to changing environmental conditions and natural disasters [24]. In the upcoming decades, disparities may intensify, not solely due to regional variations in environmental changes such as water scarcity and soil erosion, but also due to disparities in economic status, society, human capital, and political influence [25].

Impact of Climate Change on Endocrine Disorders

Climate change exerts multifaceted effects on environmental factors, including temperature, pollution, and exposure to chemicals, which contribute to endocrine disruption. The interplay between these elements underscores the intricacy of the correlation between climate change and endocrine health. Temperature fluctuations, altered seasons, and extreme weather events induced by climate change can have significant repercussions on endocrine health [26]. Furthermore, altered seasonal patterns can disrupt circadian rhythms, affecting the production and regulation of hormones critical for various physiological processes. Extreme weather events, such as heatwaves or hurricanes, may cause stress responses in the body, potentially influencing the endocrine system and contributing to hormonal imbalances [27]. Climate-induced changes in food availability, quality, and contaminants also play a pivotal role in influencing endocrine disorders. Shifts in temperature and precipitation patterns can affect crop yields and nutritional content, impacting the availability of key nutrients essential for hormonal balance. Additionally, climate change may lead to the introduction of new contaminants into the food chain, potentially disrupting endocrine function. Pesticides, pollutants, and other environmental chemicals can mimic or interfere with hormones, contributing to the development of endocrine disorders [28].

Endocrine Disorders

Specific Endocrine Disorders and Climate Change

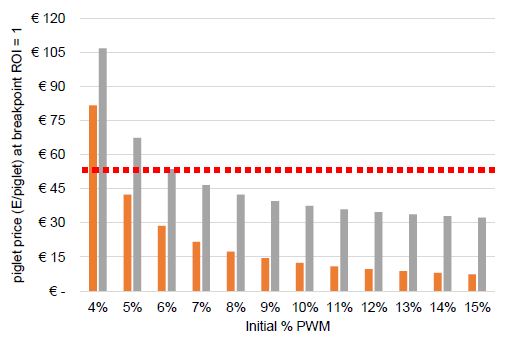

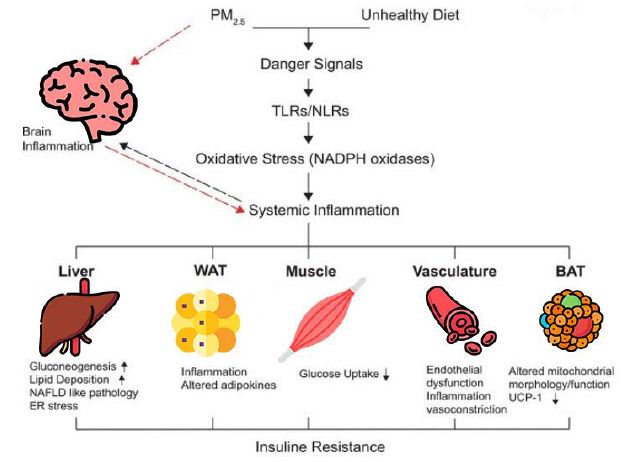

Thyroid Dysfunction: Research by Brent et al. (2010) reported that environmental agents influencing the thyroid may also trigger autoimmune thyroid disease, which is often the cause of functional thyroid disorders. Detecting abnormal thyroid function associated with environmental exposure is commonly attributed to direct effects of agents and toxicants triggering autoimmune thyroid problems with consideration to factors like thyroid autoantibody status, iodine intake, smoking history, family history of autoimmune thyroid disease, pregnancy, and medication use. Recognition of thyroid autoimmunity as a contributing factor to changes induced by environmental agents is crucial in these studies, illuminating the pathogenesis of autoimmune thyroid disease and exploring the possible influence of environmental factors in its development, a broad range of environmental pollutants can interfere with thyroid function; many of which have direct inhibition of thyroid hormone or induce detectable high levels of TSH consequently. Other substances such as polychlorinated biphenyls (PCBs) have a thyroid hormone agonist effect [29]. Additionally, air pollutants with levels as low as PM2.5 have been associated with hypothyroidism. There is robust evidence of increased cardiovascular morbidity and mortality associated with subclinical hypothyroidism (Figure 3) [30].

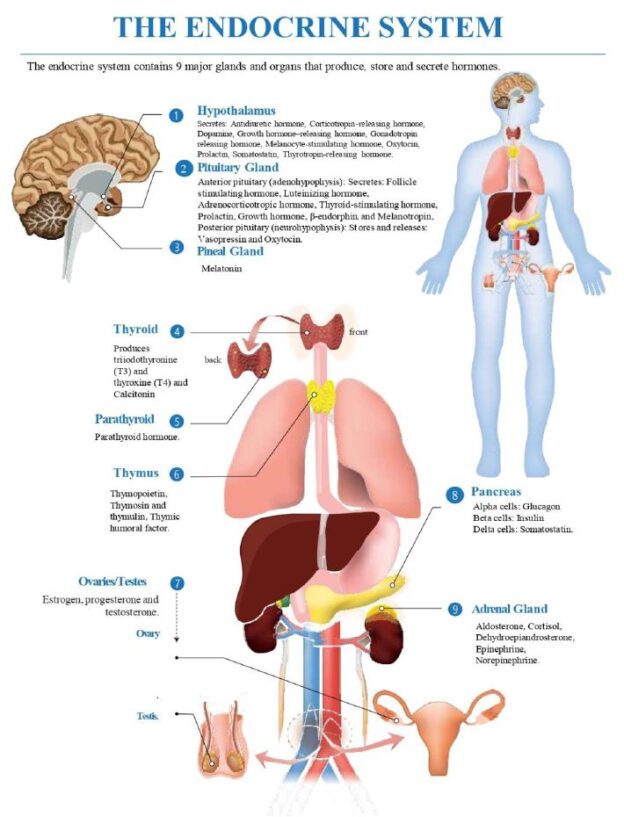

Figure 3: Overview of endocrine system.

Diabetes: Ratter-Rieck et al. (2023) reported that individuals with diabetes face heightened susceptibility to the dangers posed by elevated ambient temperatures and heatwaves due to impaired responses to heat stress. This is of particular concern as the frequency of extreme heat exposure has risen in recent decades, coinciding with the growing number of people living with diabetes, which reached 536 million in 2021 and is expected to rise to 783 million by 2045 [31]. Furthermore, evidence has linked PM concentrations to increased oxidative stress, impaired endothelial function, and it is also suggested that PM alters coagulation and inflammation cascades via epigenetic disruption [32].

A Chinese study concluded that higher PM10 levels are associated with an increase in mortality short term with a 5% overall mortality rate from diabetes with high levels of PM10 [32].

Reproductive Disorders: The “Lancet Countdown on health and climate change: code red for a healthy future report for 2021” emphasizes on the critical health risks posed by climate change [33], affecting human health through food shortages, water quality reduction, displacement, and increased disease vectors. The effects can manifest directly, such as heat stress and exposure to wildfire smoke impacting cellular processes, or indirectly, resulting in vector-borne diseases, population displacement, depression, and violence. It underscores the discrepancies in exposures among various sociodemographic groups and pregnant individuals. Vulnerable populations, including women, lower-income individuals, pregnant women, and children are disproportionately affected. The mechanisms underlying these effects involve endocrine disruption, reactive oxygen species induction, DNA damage, and disruption of normal cellular functions [33].

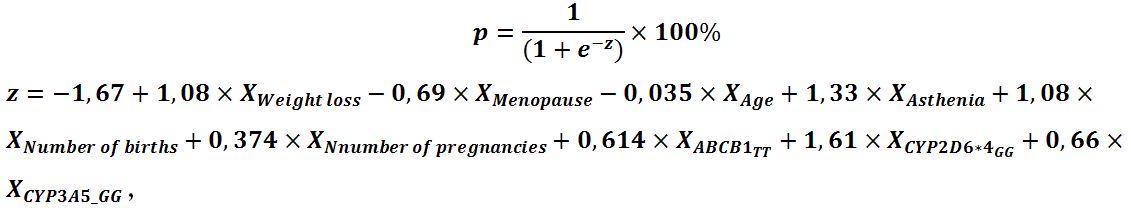

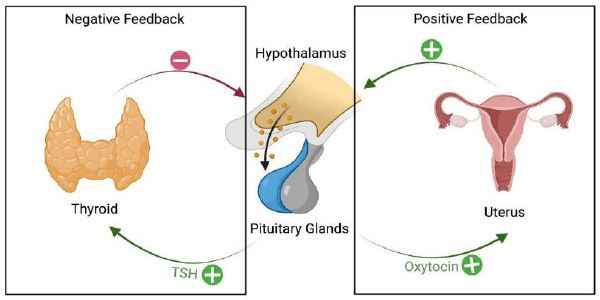

Regulatory Mechanisms

The endocrine system is regulated by feedback mechanisms involving the hypothalamus, pituitary gland, and target organs [34-36]. The feedback mechanism can be either positive or negative, depending on the effect of the hormone on the target organ [36]:

– A negative feedback mechanism occurs when the original effect of the stimulus is reduced by the output [36].

– A positive feedback mechanism occurs when the original effect of the stimulus is amplified by the output [35].

The hypothalamic-pituitary axis involves the production of hormones by the hypothalamus, which either stimulate or suppress the release of hormones from the pituitary gland [34]. Consequently, the pituitary gland produces hormones that stimulate or inhibit the release of hormones from other endocrine glands [34]. The target organs produce hormones that provide feedback, either positive or negative, to the hypothalamus and pituitary gland to regulate hormone production (Table 1) [34].

Table 1: EDC Drugs: Site of Action and Organ Impact.

| EDC Name | Target Organ | Hormones Effects |

| Phthalates | Thyroid | Reduced free and serum T4 Increased TSH |

| Perchlorate | Thyroid | Inhibited Iodine uptake Inhibited thyroid synthesis Reduced neonate cognitive function |

| Etomidate, Ketoconazole, Cardiac Glycosides | Adrenal Gland | Inhibited 11β-hydroxylase |

| General EDCs | Adrenal Gland | Inhibited StAR, aromatase, hydroxysteroid dehydrogenases, Disrupted aldosterone synthesis |

Common Endocrine Disorders

Diabetes Mellitus

Diabetes mellitus is a condition defined by high blood glucose levels. Type 1 diabetes mellitus results from autoimmune destruction of pancreatic β-cells whereas, type 2 diabetes mellitus is due insulin resistance. Severe hyperglycemia symptoms include excessive urination, thirst, weight loss, and blurred vision. Chronic hyperglycemia can impair growth, increase infection susceptibility, and lead to life-threatening conditions like ketoacidosis or hyperosmolar syndrome. Long-term complications of diabetes include retinopathy, nephropathy, peripheral and autonomic neuropathy, cardiovascular diseases, hypertension, and lipid metabolism abnormalities [37].

Diabetes can be diagnosed using plasma glucose criteria, which include either the fasting plasma glucose (FPG) value, the 2-hour plasma glucose (2-h PG) value during a 75-gram oral glucose tolerance test (OGTT), or A1C criteria.

Thyroid Disorders

Hyperthyroidism

Hyperthyroidism is characterized by excess thyroid hormone production. Causes include thyroid autonomy and Graves’ disease, which affects younger women and the elderly [38]. Symptoms of hyperthyroidism include heart palpitations, tiredness, hand tremors, anxiety, poor sleep, weight loss, heat sensitivity, and excessive sweating. Common physical observations include tachycardia and tremor [39]. Diagnostic measures involve assessing thyroid hormone levels: elevated free thyroxine (T4) and triiodothyronine (T3), alongside suppressed thyroid-stimulating hormone (TSH) [40]. Treatment often starts with thionamide and radioiodine therapy, the latter is preferred, especially in the US, considering pregnancy risks for women [38].

Hypothyroidism

Hypothyroidism is a thyroid hormone deficiency. It occurs more frequently in women, older individuals (>65 years), and white populations, with a higher risk in those with autoimmune diseases [41,42]. Symptoms of hypothyroidism include fatigue, cold intolerance, weight gain, constipation, voice changes, and dry skin. However, the clinical presentation varies with age, gender, and time to diagnosis. Diagnosis relies on thyroid hormone level criteria. Primary hypothyroidism is defined by elevated TSH levels and decreased free thyroxine levels, while mild or subclinical hypothyroidism, often indicating early thyroid dysfunction, is characterized by high TSH levels and normal free thyroxine levels [43]. Early thyroid hormone therapy and supportive measures can prevent progression to myxedema coma [43-45].

Polycystic Ovary Syndrome (PCOS)

PCOS is a prevalent endocrine disorder among females, affecting 5% to 15% of the population. Diagnosis requires chronic anovulation, hyperandrogenism, and polycystic ovaries with Rotterdam’s criteria incorporating two of these three features [46]. PCOS is often underdiagnosed, leading to complications like infertility, metabolic syndrome, obesity, diabetes, cardiovascular risks, depression, sleep apnea, endometrial cancer, and liver diseases. Management involves lifestyle changes, hormonal therapies, and insulin-sensitizing agents [47].

Adrenal Disorders

Cushing’s Syndrome

Cushing syndrome, also known as hypercortisolism, is a condition resulting from high cortisol levels, often due to iatrogenic corticosteroid use or herbal therapies [48]. It causes weight gain, fatigue, mood swings, hirsutism, and immune system impairment. Treatment options include tapering exogenous steroids, surgical resection, radiotherapy, medications and bilateral adrenalectomy for unresectable ACTH tumors [49].

Addison’s Disease (Primary Adrenal Insufficiency)

Addison’s disease is a rare but life-threatening adrenal insufficiency. It primarily impacts glucocorticoid and mineralocorticoid production and can manifest acutely during illnesses. It is more common in women aged 30-50 and is often linked to autoimmune conditions. There are two main types of Addison’s disease: primary adrenal insufficiency, where the adrenal glands themselves are damaged, and secondary adrenal insufficiency, where the dysfunction is due to a lack of stimulation from the pituitary gland or hypothalamus [50]. Diagnosis is challenging due to its variable presentation, and it can lead to an acute adrenal crisis with severe dehydration and shock [51].

Pheochromocytoma

Pheochromocytomas are rare, benign tumors in the adrenal medulla or paraganglia, often causing symptoms like high blood pressure, headaches, heart palpitations, and excessive sweating [52]. These tumors are often linked to genetic mutations, and it is recommended that all patients undergo genetic testing. Symptoms are caused by overproduction of catecholamines. Radiological imaging helps locate the tumor and determine its spread and elevated levels of metanephrines or normetanephrines confirm the diagnosis. Surgery is the only effective therapy while drugs are used to address hypertension, arrhythmias, and fluid retention before surgery [53].

Adrenal Hemorrhage

Adrenal hemorrhage is a rare condition involving bleeding in the adrenal glands, causing a range of symptoms from mild abdominal pain to serious cardiovascular collapse. It is caused by various factors like traumatic abdominal trauma, sepsis, blood clotting issues, blood thinner use, pregnancy, stress, antiphospholipid syndrome, and essential thrombocytosis, it can occur unilaterally or bilaterally. Rapid diagnosis requires high clinical suspicion, and diagnostic techniques involve imaging and biochemical evaluations [54].

Primary Hyperaldosteronism

Also known as Conn syndrome, is a condition causing hypertension and low potassium levels due to excessive aldosterone release from the adrenal glands. It’s a common secondary cause of hypertension, especially in resistant cases and women. Diagnosis involves aldosterone-renin ratio assessment, confirmatory tests such as saline infusion tests or captopril challenge, and imaging studies like adrenal CT or MRI scans [55]. Treatment options include aldosterone antagonists, potassium-saving diuretics, surgery, and corticosteroids [56].

Medical Impact of Endocrine Disorders

Health Consequences

Cardiovascular Complications

Uncontrolled diabetes is associated with vessel damage due to atherosclerosis, which increases the risk of heart attacks and strokes [57-59]. Hyperglycemia also contributes to inflammation, oxidative stress, and endothelial dysfunction, further promoting the development of cardiovascular complications [52-54].

Metabolic Disturbances

Conditions with hormonal imbalances, like PCOS and hypothyroidism, cause weight gain and obesity. Obesity is a major risk factor for numerous health issues, including type 2 diabetes, heart disease, and joint problems [57-59].

Autoimmune Disorders

Autoimmune disorders are common in endocrine disorders such as Hashimoto’s thyroiditis and Graves’ disease [57-61]. These conditions can increase the risk of developing other autoimmune disorders, such as rheumatoid arthritis or lupus [57-59].

Quality of Life Implications

Physical Well-being

Many endocrine disorders, such as diabetes, are associated with physical symptoms such as fatigue, pain, weakness, and discomfort [62-64].

Emotional and Psychological Well-being

Hormonal imbalances affect neurotransmitters in the brain, leading to mood disorders such as depression, anxiety, and irritability [62-65].

Social and Relationship Impact

Individuals with endocrine disorders may experience social isolation due to their symptoms or the demands of managing their condition, limiting their social interactions [62-64].

Financial Burden

Treating and managing endocrine disorders often involves ongoing medical costs, medications, doctor visits, and surgical procedures. These expenses can create a financial burden on individuals and their families, impacting their overall financial well-being [62-64].

Self-esteem and Body Image

PCOS or hypothyroidism result in weight gain and changes in appearance, which may impact self-esteem and body image. Individuals with obesity-related endocrine conditions may experience societal stigmatization and discrimination, which can lead to emotional distress and lower self-esteem [62-64].

The Intersection: Climate Change and Endocrine Disorders

Endocrine Disruption Mechanisms

Endocrine Disrupting Chemicals (EDCs)

Endocrine disruptors, are foreign substances interfering with the endocrine system’s physiologic function, leading to detrimental effects. EDCs exposure may occur through food, water, and skin exposure in adults. Fatal exposure happens through placental transmission and breastfeeding. Direct effects are seen in the exposed population and subsequent generations. EDCs are the most well-known with more than 4000 agents polluting the environment [66]. EDCs mimic endocrine action by binding to a variety of hormone receptors and may act as either agonists or antagonists. Nuclear and membrane-bound receptors are typically the two messenger systems utilized by EDCs [67]. The most commonly recognized EDCs may be agricultural, and industrial chemicals, heavy metals, drugs, and phytoestrogens [66,67]. Human exposure mostly occurs through the act of consuming these substances that accumulate in fatty tissue due to their affinity for fat. Furthermore, endocrine-disrupting chemicals interfere with the production, function, and breakdown of sex hormones affecting the growth of the fetus and reproductive abilities. These factors are associated with developmental problems, infertility, hormone-sensitive malignancies, and disruptions in energy balance. Moreover, EDCs disrupt the functioning of the hypothalamic-pituitary-thyroid and adrenal axis. However, evaluating the complete extent of ECDs influence on physical health is difficult, because of the delayed consequences, diverse onset ages, and the susceptibility of certain demographics [67] (Table 2).

Table 2: Impact of Environmental Disruptors on Endocrine Pathways by Organ.

| Organ | Environmental disruptor | Impact on endocrine pathways |

| Pituitary | Pesticides, PVC. | Precocious puberty. Delayed puberty. Disruption of circadian rhythm. |

| Thyroid | Xenoestrogens, Heat. | Decreased iodine uptake. Thyroid hormone antagonism. Increased degradation of Thyroid hormone. |

| Pancreas | Heat, Particulate matter | Insulin resistance. |

| Adrenal | Xenoestrogens, Hexachlorobenzene | Adrenal biosynthetic defect. |

| Gonads | Phthalates, Bisphenol A, Polybrominated diphenyl ethers, diethylstilbestrol, free radicals, and benzene from wildfires. | Male infertility. Female infertility. Endometriosis. Reproductive tract malignancy. Polycystic ovary syndrome. |

Hormonal Imbalances

EDCs can interfere with hormonal homeostasis in different mechanisms such as affecting hormone synthesis, release, transport, metabolism, and action, mainly utilizing the structural similarity with thyroid hormones [68]. The impact of EDCs on the endocrine system is not fully understood. However, existing literature and medical research suggest an association between EDCs and endocrine disorders, with EDCs being implicated as potential causes of obesity, diabetes mellitus, and other diseases [28,69-72].

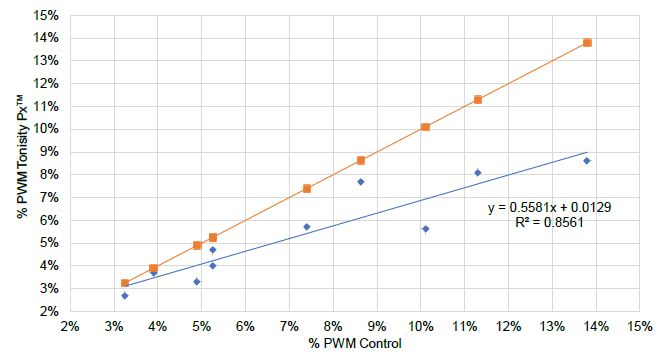

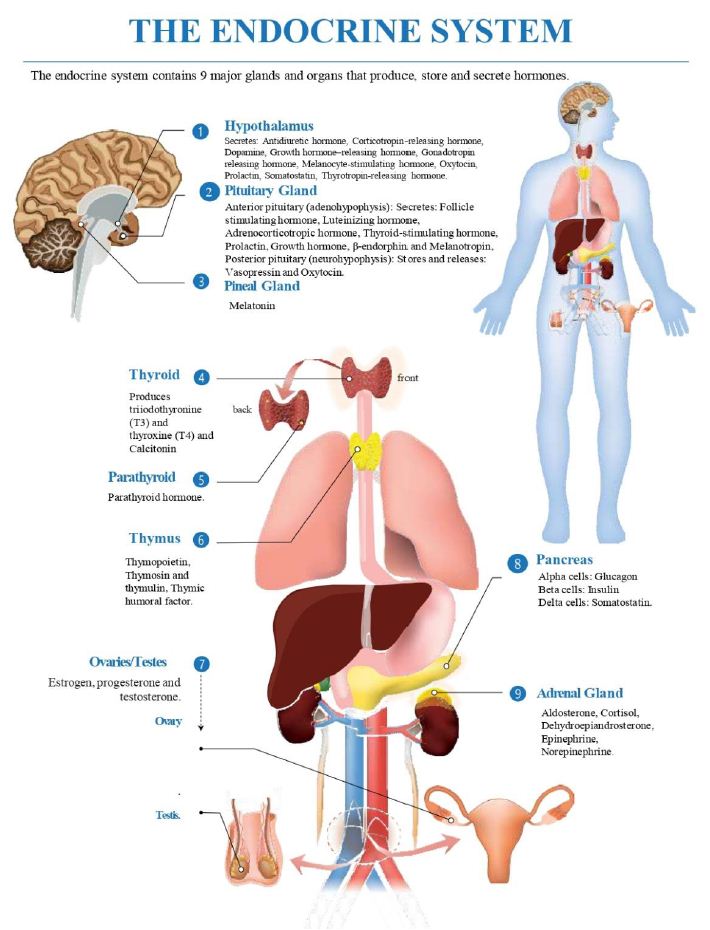

Phthalates, as a chemical commonly used in pharmaceuticals [73], have been described to have a negative association with free and total serum T4 and increased TSH levels [74] . Perchlorate, a significant EDC, mainly inhibits iodine uptake with doses as small as 5 mg/kg/day via reducing iodine uptake in the thyroid, with higher doses inhibiting thyroid synthesis [75]; its exposure risks reduced cognitive function in neonates [76] . In the adrenals, EDCs work by inhibiting or inducing enzymes, including steroid acute regulatory protein (StAR), aromatase, and hydroxysteroid dehydrogenases [77,78], involved in steroidogenesis with a minor role in aldosterone synthesis disruption [66] . Drugs like etomidate, ketoconazole, and cardiac glycosides may inhibit steroidogenesis by interfering with 11β-hydroxylase. The interference obstructs the process of converting 11-deoxycortisol into cortisol, which affects the production of glucocorticoids and mineralocorticoids (Figure 4).

Figure 4: Feedback regulation of hormone production: positive and negative.

Decreased cortisol levels impact the body’s reaction to stress and its metabolism, which may result in symptoms of adrenal insufficiency. Moreover, the interruption of aldosterone production might potentially lead to disturbances in electrolyte levels and the regulation of blood pressure. The many intricacies of this route emphasize the need of maintaining a precise hormonal equilibrium for optimal physiological functioning [66]. Furthermore, environmental toxins play a critical role in influencing reproductive health outcomes. Heavy metals and other environmental toxins can impair reproductive health in females by altering hormone function, leading to adverse reproductive health events. In males, these toxins could affect semen quality, altering sperm concentration, movement, and morphology [90,91] (Table 3).

Table 3: Climate change impact on different phases of reproductive life.

| Phase | Impact Factors | Description |

| Preconception | Temperature Extremes | Affects fertility and sperm quality; heat stress can reduce conception rates. |

| Air Quality | Exposure to pollutants can reduce reproductive health and increase the risk of infertility. | |

| Nutrition and Food Security | Poor nutrition can lead to decreased fertility; food insecurity affects overall health. | |

| Water Quality and Availability | Contaminated water can lead to reproductive health issues; water scarcity impacts general health. | |

| Pregnancy | Temperature Extremes | Higher risk of heat stress, preterm birth, and other complications. |

| Air Quality | Poor air quality can lead to pregnancy complications such as preeclampsia and low birth weight. | |

| Nutrition and Food Security | Malnutrition can affect fetal development; food insecurity can lead to nutrient deficiencies. | |

| Water Quality and Availability | Risk of waterborne diseases affecting maternal health. | |

| Childbirth | Temperature Extremes | Increased risk during labor and delivery, particularly in hot climates. |

| Air Quality | Respiratory issues during childbirth due to poor air quality. | |

| Water Quality and Availability | Clean water is essential for safe delivery; water scarcity and contamination can lead to complications. | |

| Postpartum | Nutrition and Food Security | Impact on breastfeeding and recovery; malnutrition can affect milk production and maternal health. |

| Temperature Extremes | Heat stress can affect the health of both mother and infant. | |

| Air Quality | Poor air quality can affect respiratory health of both mother and newborn. |

Climate Change Factors Affecting Endocrine Health

Temperature Extremes and Hormonal Responses

Humans can survive in extreme environmental conditions and adapt to varying temperatures ranging from humid tropical forests to polar deserts [79,80]. Stress hormones and other stress-initiated systems have a major role in adaptive responses to environmental changes [81]. Mazzeo et al.’s study and Woods et al.’s investigation found that catecholamine and cortisol responses to physical exercise differ under conditions of hypoxia versus normoxia; with higher concentrations noted under hypoxic conditions [82,83]. The secretion of catecholamine (particularly adrenaline and noradrenaline) and cortisol during physical stress is influenced by the presence of either low oxygen levels (hypoxic) or normal oxygen levels (normoxic) in the body. When there is a lack of oxygen (hypoxia), the body responds by releasing more catecholamines, which increases the heart rate and respiratory rate. Moreover, the levels of cortisol are elevated, which promotes the mobilization of energy stores. Under typical oxygen conditions, exercise still stimulates the synthesis of catecholamines and cortisol, although the quantities may not reach the same levels as observed in low oxygen environments (hypoxia). The body’s physiological responses during physical activity are facilitated by these endocrine reactions, which are crucial for adapting to varying amounts of oxygen in the surroundings [84]. The endocannabinoids lipid mediators and N-acyl ethanolamines are closely linked to acclimation at various physiological levels, including central nervous, peripheral metabolic, and psychologic systems in response to environmental factors to achieve physiological homeostasis [85].

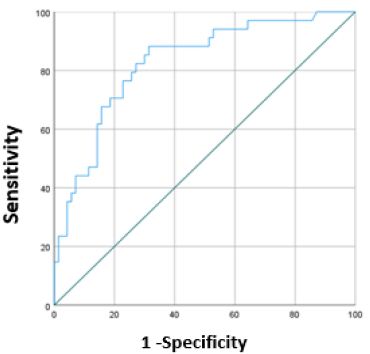

Environmental Toxins and Endocrine Dysfunction

Numerous epidemiological studies have indicated a link between exposure to environmental toxins and the risk of developing type 2 diabetes mellitus. Inflammation triggered by exposure to particulate matter (PM2.5) in air pollution is a prevalent mechanism that might interact with other proinflammatory factors related to diet and lifestyle, influencing susceptibility to cardiometabolic diseases [86]. Exposure to PM2.5 has been demonstrated to disrupt insulin receptor substrate (IRS) phosphorylation, leading to impaired PI3K-Akt signaling and inhibition of insulin-induced glucose transporter translocation [86] (Figure 5).

Figure 5: PM2.5 exposure impact

Foodborne toxins such as cereulide produced by Bacillus cereus might contribute to the increase in the prevalence of both type 1 and 2 diabetes through its uncoupling of oxidative phosphorylation by permeabilizing the mitochondrial membrane [87]. The production of mitochondrial ATP is crucial for generating insulin in response to glucose stimulation. Therefore, cereulide’s toxic effects on mitochondria could particularly harm beta-cell function and viability.

Altered Nutritional Patterns and Metabolic Health

Prudent diets including fruits, vegetables, whole grains, fish, and legumes have been associated with favorable effects on bone metabolism including lower serum bone resorption marker C-terminal telopeptide (CTX) in women; higher 25-hydroxyvitamin D (25OHD) and lower parathyroid hormone in men [9]. Also linked to favorable effects on glucose metabolism such as lower insulin and homeostatic model assessment insulin resistance (HOMA-IR) [9]. Consuming Western diets, characterized by the intake of soft drinks, potato chips, french fries, meats, and desserts, has been linked to adverse impacts on bone metabolism which include elevated levels of bone-specific alkaline phosphatase and reduced levels of 25OHD in women, as well as higher CTX levels in men [9]. They were also linked to higher glucose, insulin, and HOMA-IR [88].

Stress, Mental Health, and Endocrine Function

Climate-induced Stress and Hormone Levels

The primary responses to heat stress involve the activation of the hypothalamic-pituitary-adrenal axis, leading to a subsequent rise in plasma glucocorticoid levels. Epinephrine and norepinephrine are the main hormones elevated in prolonged exposure to environmental heat stress. Plasma thyroid hormones have been observed to decrease under heat stress as compared to thermoneutral conditions. Decreasing levels of thyroid hormones and plasma growth hormones have a synergistic effect in the reduction of heat production. Decreased growth hormone is necessary for survival during heat stress and Insulin-like growth factor-1 (IGF-1) has been found to be decreased during summer months [88].

Mental Health Implications for Endocrine Patients

Cortisol is a well-researched hormone in psycho-neuroendocrinology, crucial for stress-related and mental health disorders [92]. It’s assessed through serum, saliva, or urine for short-term levels, while hair cortisol can indicate long-term levels [93]. Stress triggers acute and chronic responses in cortisol release, affecting the HPA (hypothalamus-pituitary-adrenal) axis [88]. The HPT (hypothalamus-pituitary-thyroid) axis is also impacted by stress, showing transient activation and suppression with acute and chronic stressors [94]. Stress persistence is crucial in understanding stress reactions and their connection to cortisol levels and HPA axis dysfunction, which is linked to various mental disorders like major depressive disorder [95,96].

Empirical Evidence and Research Findings

Epidemiological Studies on Climate and Endocrine Disorders

Diabetes and Climate-Related Factors

Diabetes and climate change are interconnected global health challenges. Rising temperatures, heat waves, heavy rainfall, and extreme weather events are impacting diabetic patients [31,97]. These individuals exhibit heightened vulnerability to heat and climate-related stress due to impaired vasodilation and sweating responses, also diabetic patients’ susceptibility to complications will be increased [10]. Additionally, Mora et al., in their systematic review, showed that 58% of infectious agents were aggravated by climate change, posing a high risk of infections and their complications on diabetic patients with compromised immune systems [98].

Thyroid Function and Environmental Exposures

Recent research highlights the impact of environmental factors on thyroid function, assessed through TSH, FT4, and FT3 levels. Exposure to high levels of particulate matter (PM2.5) is associated with lower FT4 levels and a higher risk of hypothyroxinemia [99-101]. PM2.5, composed of fine particles carrying contaminants including heavy metals, is breathed and enters the circulation, causing disruption to thyroid function. Hypothyroxinaemia, caused by decreased levels of FT4, has a negative impact on fetal development throughout pregnancy. Exposure to PM2.5 also induces inflammation and oxidative stress, which further disrupts the control of the thyroid [102]. In adults, elevated TSH and decreased FT4 levels were associated with long-term exposure to nitric oxide and carbon monoxide [103]. Additionally, outdoor temperature was negatively linked to TSH and FT3 but positively correlated with free thyroxine and the FT4/FT3 ratio. A 10 μg/m3 increase in fine particulate matter (PM2.5) was linked to a 0.12 pmol/L decrease in FT4 and a 0.07 pmol/L increase in FT3, with a significantly negative association between PM2.5 levels and the FT4/FT3 ratio [104].

PCOS and Dietary Changes

Diet and lifestyle choices are pivotal in the development and management of PCOS. Key factors include addressing insulin resistance, considering weight and body composition, adopting a balanced diet, and the potential benefits of a low-glycemic Index (GI) diet. Tailored dietary plans aim to reduce chronic inflammation through increased antioxidant intake [105]. Additionally, research indicates a link between air pollutants, such as Q4, PM2.5, NOx, NO2, NO, and SO2, and a higher risk of PCOS, as shown by Lin et al in 2019 [106].

Case Studies in Climate-Impacted Regions

Vulnerable Populations and Healthcare Access

Climate change debates must consider demographic groups’ disproportionate healthcare access and vulnerability. Low-income communities, elderlies, children, disabled people and those with pre-existing endocrine disorders face unique climate change challenges. Climate gentrification harms vulnerable residents’ health. Environmental pollutants and endocrine disorders are more common in marginalized climate-vulnerable communities. Addressing vulnerable populations’ healthcare access disparities to reduce climate change’s endocrine and health effects is essential. Vulnerable populations in climate and health research should not be seen as homogenous due to age, health issues, geography, or time. Climate assessments should address health and inequality.

A systematic overview highlights the unique challenges faced by women in low- and middle-income countries (LMICs) due to climate change and natural disasters. The review suggests a wide range of harmful effects on female reproductive health, noting that the mean age of menarche has decreased due to climate change. Additionally, some research found a statistically significant relationship between rising global temperatures and adverse pregnancy outcomes. All of these effects are more pronounced in LMICs, which are more severely impacted by climate change [107].

Environmental Justice Considerations

Climate and endocrine diseases research must consider environmental justice which involves all socioeconomic groups in lawmaking, implementation, and enforcement. Climate-related harm is unequally distributed by population exposure, sensitivity, and adaptation [1]. Climate risks and environmental degradation disproportionately affect low-income and minority groups, who often face greater exposure to environmental hazards and have fewer resources to adapt or recover. This exacerbates health disparities and highlights the need for inclusive environmental justice policies. Additionally, there should be more consideration of vulnerable groups within these communities, who are at higher risk from climate-related events [107].

Mitigation and Adaptation Strategies

Climate Change Mitigation Efforts

There are two categories of mitigation efforts; reduction of further greenhouse gas emissions and creation of carbon sinks to decrease atmospheric greenhouse gas. The Intergovernmental Panel on Climate Change, held in March 2023, called for global action to limit worldwide temperature increase to less than 1.5 C when compared to the preindustrial period [108]. Per the World Health Organization, a global effort requires political collaborations with medical professionals, which has prompted medical representation at United Nations Framework Convention on Climate Change (UNFCCC) meetings and Conference of the Parties (COP) [109]. Local and national endocrinology groups have been promoting climate change-based policies including suggesting a climate change agenda in the 2022-2026 Environmental Protection Agency strategic guidelines in the United States of America [110].

In addition, practitioners can contribute to the fight against climate change by adopting environmentally friendly practices and promoting treatments that lead to decrease greenhouse emissions and improve health [111]. One method physicians can use is carbon accounting, where physicians measure the amount of disposable goods that have been discarded by their practice and take measures to decrease usage [112]. When ordering supplies for medical centers, physicians can consider environmentally preferable purchasing, such as focusing on reusables, which supports companies committed to mitigating the impacts of product production on climate change [113].

Personal and Community Health Measures

By recognizing the importance of lifestyle modifications for both endocrine disorders and climate change, individuals can take control of their health while actively contributing to environmental preservation. By choosing to use their muscles rather than motors, people can both lessen their release of greenhouse gases and increase their calorie expenditure. Engaging in regular physical activity stands as one of the pillars of maintaining good health; it can enhance brain health, facilitates weight management, lower the risk of various diseases, strengthens bones and muscles, and enhances the capacity to perform daily tasks [114]. Food consumption has a direct relationship with the global epidemics of Obesity and Diabetes Mellitus [115,116]. Improved dietary decisions can lead to enhanced health outcomes for both individuals and the environment. Diets rich in plant-based foods and lower in meat consumption (flexitarian diets) not only contribute to health advantages but also diminish greenhouse gas emissions originating from agriculture [117].

EDCs disrupt hormone biosynthesis, metabolism, or function, leading to deviations from normal homeostatic control or reproductive processes. There is growing evidence that these hazardous chemicals contribute to the increased prevalence of cancers, cardiovascular and respiratory diseases, allergies, neurodevelopmental and congenital defects, and endocrine disruption. The Ostrava Declaration, adopted by the Sixth Ministerial Conference on Environment and Health in 2017, encourages the substitution of such hazardous chemicals and improving information availability [118].

Policy and Advocacy in Medical Research

Government Initiatives and Regulations

Integrating Climate and Health Policies

Governmental and nongovernmental bodies around the world such as CDC, WMO, WHO, and BAMS are increasingly recognizing the link between climate change and public health. Integrating climate and health policies is essential to mitigate the adverse effects of climate change by implementing regulations to reduce air pollution, vector-borne disease control, and supporting research into climate-related health impacts [119].

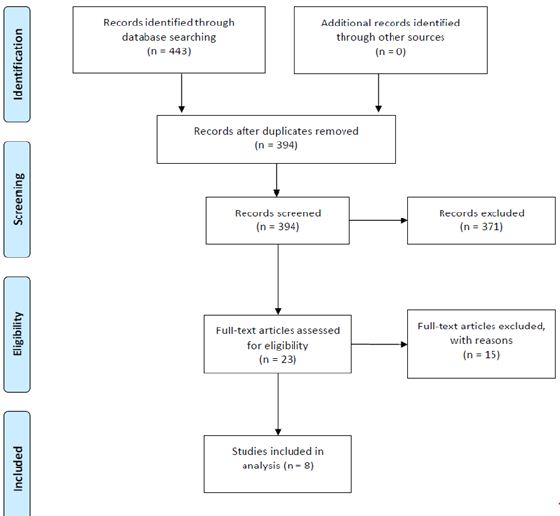

Surveillance and Reporting

A study on adaptation efforts by 117 UNFCCC parties established a global baseline for adaptation trends. National Communications data unveiled 4,104 distinct initiatives. Despite advanced impact assessments and research, translating knowledge into actions remains limited. Infrastructure, technology, and innovation dominate reported adaptations. Common vulnerabilities include floods, droughts, food and water safety, rainfall, diseases, and ecosystem health. However, vulnerable sub-populations receive infrequent consideration in these initiatives [120]. Also, Greenhouse gases are one of the important factors for climate change so (MERV) guidelines which stand for minimum efficiency reporting value and consist of four guidelines which are needed for the greenhouses to accurately determine their net GHG, and other, benefits: [121].

- Improve data reliability for estimating GHG benefits.

- Enable real-time data to allow adjustments during the project.

- Establish consistency and transparency in reporting across various project types and contributors.

- Boost project credibility with stakeholders.

NGO Efforts in Medical Research

Advocacy for Environmental Health

NGOs act as catalysts in advocating for environmental health policies and practices, with a significant presence in international environmental governance. While they are recognized as essential contributors to environmental protection, there’s a lack of systematic evaluations of their roles, especially in relation to other governing bodies [122].

Promoting Evidence-Based Practices

NGOs contribute significantly to medical research by promoting evidence-based practices instead of data analysis only within healthcare systems. They work to ensure that medical interventions and treatments are based on rigorous scientific research, which ultimately leads to better patient outcomes. In addition, NGOs play a vital role in connecting scientific breakthroughs with clinical application, guaranteeing that the most recent research insights translate into tangible advantages for patients in everyday practice.

Challenges and Public Health Implications

Climate change can have a significant impact on the incidence, diagnosis, and management of endocrine disorders. For instance, exposure to extreme temperatures, air pollution, and other environmental factors can disrupt the endocrine system and lead to hormonal imbalances [123].

The public health implications of endocrine disorders are significant. These conditions can cause long-term disability, reduce the quality of life of affected individuals, and increase the risk of mortality. The burden of endocrine disorders is expected to increase in the coming years due to climate change and other factors [123].

To mitigate the effects of endocrine disorders in the context of climate change, several strategies can be employed. These include reducing exposure to environmental toxins, promoting healthy lifestyles, and investing in research to better understand the link between climate change and endocrine disorders [124]. Additionally, public health campaigns can be launched to raise awareness about the risks of endocrine disorders and the importance of early diagnosis and treatment [125].

Technology can play a crucial role in mitigating the effects of endocrine disorders in the context of climate change. For instance, digital technologies can monitor and address the increase of climate-sensitive infectious diseases, thereby safeguarding the well-being of communities worldwide [125,126]. Additionally, telemedicine can be used to provide remote care to patients with endocrine disorders, reducing the need for in-person visits and improving access to care [127]. However, the use of technology in healthcare also poses several challenges. For example, the use of electronic health records (EHRs) can lead to privacy concerns and data breaches. Additionally, the use of artificial intelligence (AI) in healthcare raises ethical concerns, such as the potential for bias and discrimination.

Future Research Directions in Medical Climate Science

Knowledge Gaps and Areas of Uncertainty

While extensive research, primarily derived from animal studies, has shed light on the potential hazards, there are critical gaps in our knowledge regarding EDCs exposure routes and threshold concentrations. Comprehensive assessments of drinking water supplies are essential to bridge these gaps. Additionally, questions persist about the long-term impacts of EDC exposure, especially during crucial developmental stages, highlighting the need for further studies to unravel these uncertainties [69].

Long-Term Health Projections

The sudden surge in metabolic disorders, reproductive abnormalities, endocrine dysfunction, and cancers raises concerns about long-term health projections. With the escalation of these diseases linked to global industrialization and the pervasive presence of EDCs in our environment, understanding the future health landscape is crucial [28]. Long-term health projections must consider the complex interplay of EDCs with human biology, emphasizing the importance of ongoing research to anticipate and mitigate potential health crises in the coming years.

Artificial Intelligence (AI) and Technological Advancements and Innovative Solutions

Machine learning models, such as the optimized Random Forest (RF) models, have emerged as powerful tools to address knowledge gaps. These models, utilizing simple descriptors and data from extensive projects, provide accurate predictions of EDC effects, enabling a better understanding of the risks associated with various substances [128]. Incorporating AI in prioritization and tiered testing workflows not only fills existing knowledge gaps but also propels us toward more efficient and precise screening processes. These advancements also facilitate the development of targeted interventions and policies, ensuring a healthier future for generations to come.

Future Perspective and Conclusion

Further research should focus on finding relationships between various endocrine disorders and different environmental factors such as climate change and environmental toxins. Extensive understanding of the causative factors will facilitate the development of medical treatments and public health policies. Embracing diverse perspectives can enhance our understanding and foster breakthroughs. To conclude, urgent action should be taken to challenge the effects of climate change on endocrine disorders; public health policies should be implemented to decrease overall exposure to endocrine disrupting chemicals by utilizing testing of various substances to reduce potential exposure in different environmental settings. Additionally, technology could be integrated into public health and preventive medicine for purposes of disease tracking and increasing accessibility to telemedicine regardless of the patient’s physical location. Although promising, other factors should be taken in consideration when technology is used such to maintain patient’s confidentiality, including privacy concerns and ethical dilemmas regarding patient data. Effective strategies demand community education, NGOs involvement, and continuous monitoring. Addressing challenges, including financial inconsistencies, requires implementing measures that promote resilience.

Declarations

Acknowledgement

The authors would like to acknowledge ACC Medical Student Member Community’s Cardiovascular Research Initiative for organizing this project.

Funding

Authors received no external funding for this project

Conflicts of Interest

Authors wish to declare no conflict of interest.

Authorship contributions

Conceptualization of Ideas: Abdulkader Mohammad, Adriana Mares, Aayushi Sood

Data Curation

Abdulkader Mohammad, Adriana Mares, Aayushi Sood

Visualization

Abdulkader Mohammad, Adriana C. Mares, Abd Alrazak Albasis, Mayassa Kiwan, Sara Subbanna

Writing of Initial Draft

Abdulkader Mohammad, Adriana C. Mares, Abd Alrazak Albasis, Mayassa Kiwan, Sara Subbanna, Jannel A. Lawrence, Shariq Ahmad Wani, Bashar Khater, Amir Abdi, Anu Priya, Christianah T. Ademuwagun MS, Kahan Mehta, Nagham Ramadan, Anubhav Sood

Review and Editing

Abdulkader Mohammad, Adriana C. Mares, Harsh Bala Gupta, Aayushi Sood

Declaration of Interest

None.

Disclosures

The authors have no conflicts of interest to disclose. 1) This paper is not under consideration elsewhere. 2) All authors have read and approved the manuscript. 3) All authors take responsibility for all aspects of the reliability and freedom from bias of the data presented and their discussed interpretation. 4) The authors have no conflict of interest to disclose

Abbreviations: 25OHD: 25-Hydroxyvitamin D; ACTH: Adrenocorticotropic Hormone; AHA: American Heart Association; AI: Artificial Intelligence; BAMS: Bulletin of the American Meteorological Society; BAT: Brown Adipose Tissue; CDC: Centres for Disease Control and Prevention; COP: Conference of the Parties; COPD: Chronic Obstructive Pulmonary Disease; CTX: C-terminal Telopeptide; EDCs: Endocrine Disrupting Chemicals; EHRs: Electronic Health Records; FPG: Fasting Plasma Glucose; FT3: Free Triiodothyronine; FT4: Free Thyroxine; HbA1c: Hemoglobin A1c; HOMA-IR: Homeostatic Model Assessment Insulin Resistance; HPA: Hypothalamic-Pituitary-Adrenal; HPT: Hypothalamic-Pituitary-Thyroid; IRS: Insulin Receptor Substrate; IGF-1: Insulin-like Growth Factor-1; LDL: Low-Density Lipoprotein; LMICs: Low- and middle-income countries; MRI: Magnetic Resonance Imaging; MERV: Minimum Efficiency Reporting Value; NO2: Nitrogen Dioxide; NOx: Nitrogen Oxides; OGTT: Oral Glucose Tolerance Test; PCOS: Polycystic Ovary Syndrome; PG: Plasma Glucose; PI3K-Akt: Phosphatidylinositol-3-kinase-Protein Kinase B; PM2.5: Particulate Matter 2.5; SO2: Sulfur Dioxide; T3: Triiodothyronine; T4: Thyroxine; TRH: Thyrotropin-Releasing Hormone; TSH: Thyroid-Stimulating Hormone; UNFCCC: United Nations Framework Convention on Climate Change; WHO: World Health Organization; WMO: World Meteorological Organization

References

- Abbass K, Qasim MZ, Song H, Murshed M, Mahmood H, Younis I (2022) A review of the global climate change impacts, adaptation, and sustainable mitigation measures. Environmental Science and Pollution Research. [crossref]

- Nations U. Causes and Effects of Climate Change | United Nations.

- Zhao Q, Guo Y, Ye T, Gasparrini A, Tong S, Overcenco A, et al. (2021) Global, regional, and national burden of mortality associated with non-optimal ambient temperatures from 2000 to 2019: a three-stage modelling study. Lancet Planet Health. [crossref]

- McMichael AJ. Globalization, climate change, and human health (2013) N Engl J Med.

- Hansen J, Ruedy R, Sato M, Lo K (2020) World of Change: Global Temperatures. Reviews of Geophysics.

- Zhao Q, Yu P, Mahendran R, Huang W, Gao Y, Yang Z, et al. (2022) Global climate change and human health: Pathways and possible solutions. Eco-Environment and Health. [crossref]

- Zhao Q, Li S, Coelho MSZS, Saldiva PHN, Hu K, Huxley RR, et al. (2019) The association between heatwaves and risk of hospitalization in Brazil: A nationwide time series study between 2000 and 2015. PLoS Med. [crossref]

- Ebi KL, Capon A, Berry P, Broderick C, de Dear R, Havenith G, et al. (2021) Hot weather and heat extremes: health risks. Lancet. [crossref]

- Wu Y, Xu R, Wen B, De Sousa Zanotti Stagliorio Coelho M, Saldiva PH, Li S, et al. (2021) Temperature variability and asthma hospitalisation in Brazil, 2000-2015: a nationwide case-crossover study. Thorax. [crossref].

- Blauw LL, Aziz NA, Tannemaat MR, Blauw CA, de Craen AJ, Pijl H, et al. (2017) Diabetes incidence and glucose intolerance prevalence increase with higher outdoor temperature. BMJ Open Diabetes Res Care. [crossref].

- Kim Y, Kim H, Gasparrini A, Armstrong B, Honda Y, Chung Y, et al. (2019) Suicide and Ambient Temperature: A Multi-Country Multi-City Study. Environ Health Perspect. [crossref].

- Khan AM, Finlay JM, Clarke P, Sol K, Melendez R, Judd S, et al. (2021) Association between temperature exposure and cognition: a cross-sectional analysis of 20,687 aging adults in the United States. BMC Public Health. [crossref].

- Schwartz J. Who is sensitive to extremes of temperature?: A case-only analysis. Epidemiology 2005. [crossref]

- Winquist A, Grundstein A, Chang HH, Hess J, Sarnat SE. Warm season temperatures and emergency department visits in Atlanta, Georgia. Environ Res 2016.

- Hajat S, Haines A, Sarran C, Sharma A, Bates C, Fleming LE. The effect of ambient temperature on type-2-diabetes: case-crossover analysis of 4+ million GP consultations across England. Environ Health 2017.

- Kapwata T, Gebreslasie MT, Mathee A, Wright CY. Current and Potential Future Seasonal Trends of Indoor Dwelling Temperature and Likely Health Risks in Rural Southern Africa. Int J Environ Res Public Health 2018.

- Xu R, Yu P, Abramson MJ, Johnston FH, Samet JM, Bell ML, et al. Wildfires, Global Climate Change, and Human Health. N Engl J Med 2020.

- Reisen F, Duran SM, Flannigan M, Elliott C, Rideout K. Wildfire smoke and public health risk. Int J Wildland Fire. 2015.

- Parks RM, Anderson GB, Nethery RC, Navas-Acien A, Dominici F, Kioumourtzoglou MA. Tropical cyclone exposure is associated with increased hospitalization rates in older adults. Nat Commun. 2021. [crossref]

- Gauderman WJ, Urman R, A E, Berhane K, McConnell R, Rappaport E, et al. Association of improved air quality with lung development in children. N Engl J Med 2015. [crossref]

- Zhang S, Mwiberi S, Pickford R, Breitner S, Huth C, Koenig W, et al. Longitudinal associations between ambient air pollution and insulin sensitivity: results from the KORA cohort study. Lancet Planet Health 2021. [crossref]

- Burkart K, Causey K, Cohen AJ, Wozniak SS, Salvi DD, Abbafati C, et al. Estimates, trends, and drivers of the global burden of type 2 diabetes attributable to PM2·5 air pollution, 1990-2019: an analysis of data from the Global Burden of Disease Study 2019. Lancet Planet Health 2022. [crossref]

- Wang J, Vanga SK, Saxena R, Orsat V, Raghavan V. Effect of Climate Change on the Yield of Cereal Crops: A Review. Climate 2018.

- Chen XM, Sharma A, Liu H. The Impact of Climate Change on Environmental Sustainability and Human Mortality. Environments 2023.

- McMichael AJ, Friel S, Nyong A, Corvalan C. Global environmental change and health: impacts, inequalities, and the health sector. BMJ 2008. [crossref]

- Kalra S, Kapoor N. Environmental Endocrinology: An Expanding Horizon. 2021. [crossref]

- Darbre PD. Overview of air pollution and endocrine disorders. Int J Gen Med 2018. [crossref]

- Diamanti-Kandarakis E, Bourguignon JP, Giudice LC, Hauser R, Prins GS, Soto AM, et al. Endocrine-Disrupting Chemicals: An Endocrine Society Scientific Statement. Endocr Rev 2009. [crossref]

- Brent GA. Environmental Exposures and Autoimmune Thyroid Disease. Thyroid 2010. [crossref]

- Wright JT, Crall JJ, Fontana M, Gillette EJ, Nový BB, Dhar V, et al. Evidence-based clinical practice guideline for the use of pit-and-fissure sealants: A report of the American Dental Association and the American Academy of Pediatric Dentistry. Journal of the American Dental Association. 2016. [crossref]

- Ratter-Rieck JM, Roden M, Herder C. Diabetes and climate change: current evidence and implications for people with diabetes, clinicians and policy stakeholders. Diabetologia 2023. [crossref]

- Yang J, Zhou M, Zhang F, Yin P, Wang B, Guo Y, et al. Diabetes mortality burden attributable to short-term effect of PM10 in China. Environmental Science and Pollution Research 2020. [crossref]

- Romanello M, McGushin A, Di Napoli C, Drummond P, Hughes N, Jamart L, et al. The 2021 report of the Lancet Countdown on health and climate change: code red for a healthy future. Lancet. 2021. [crossref]

- Hiller-Sturmhöfel S, Bartke A. The Endocrine System: An Overview. Alcohol Health Res World 1998. [crossref]

- Hoermann R, Pekker MJ, Midgley JEM, Larisch R, Dietrich JW. Principles of Endocrine Regulation: Reconciling Tensions Between Robustness in Performance and Adaptation to Change. Front Endocrinol (Lausanne) 2022. [crossref]

- Peters A, Conrad M, Hubold C, Schweiger U, Fischer B, Fehm HL. The principle of homeostasis in the hypothalamus-pituitary-adrenal system: new insight from positive feedback. Am J Physiol Regul Integr Comp Physiol 2007. [crossref]

- Diagnosis and classification of diabetes mellitus. Diabetes Care 2009. [crossref]

- Gessl A, Lemmens-Gruber R, Kautzky-Willer A. Thyroid disorders. Handb Exp Pharmacol 2012. [crossref]

- De Leo S, Lee SY, Braverman LE. Hyperthyroidism. Lancet 2016

- De Leo S, Lee SY, Braverman LE. Hyperthyroidism. Lancet 2016

- Aoki Y, Belin RM, Clickner R, Jeffries R, Phillips L, Mahaffey KR. Serum TSH and total T4 in the United States population and their association with participant characteristics: National Health and Nutrition Examination Survey (NHANES 1999-2002) Thyroid 2007. [crossref]

- McLeod DSA, Caturegli P, Cooper DS, Matos PG, Hutfless S. Variation in rates of autoimmune thyroid disease by race/ethnicity in US military personnel. JAMA 2014. [crossref]

- Chaker L, Bianco AC, Jonklaas J, Peeters RP. Hypothyroidism. Lancet 2017

- Wiersinga WM. Myxedema and Coma (Severe Hypothyroidism) Endotext 2018

- Carlé A, Bülow Pedersen I, Knudsen N, Perrild H, Ovesen L, Laurberg P. Gender differences in symptoms of hypothyroidism: a population-based DanThyr study. Clin Endocrinol (Oxf) 2015. [crossref]

- Teede H, Deeks A, Moran L. Polycystic ovary syndrome: a complex condition with psychological, reproductive and metabolic manifestations that impacts on health across the lifespan. BMC Med 2010. [crossref]

- Rasquin LI, Anastasopoulou C, Mayrin J V. Polycystic Ovarian Disease. Encyclopedia of Genetics, Genomics, Proteomics and Informatics 2022

- Hirsch D, Shimon I, Manisterski Y, Aviran-Barak N, Amitai O, Nadler V, et al. Cushing’s syndrome: comparison between Cushing’s disease and adrenal Cushing’s. Endocrine 2018. [crossref]

- Gutiérrez J, Latorre G, Campuzano G. Cushing Syndrome. Medicina & Laboratorio 2023. [crossref]

- Bornstein SR, Allolio B, Arlt W, Barthel A, Don-Wauchope A, Hammer GD, et al. Diagnosis and Treatment of Primary Adrenal Insufficiency: An Endocrine Society Clinical Practice Guideline. J Clin Endocrinol Metab. 2016. [crossref]

- Munir S, Rodriguez BSQ, Waseem M. Addison Disease. StatPearls. 2023. [crossref]

- Reisch N, Peczkowska M, Januszewicz A, Neumann HPH. Pheochromocytoma: presentation, diagnosis and treatment. J Hypertens 2006. [crossref]

- Farrugia FA, charalampopoulos A. Pheochromocytoma. Endocr Regul. 2019. [crossref]

- Gawande R, Castaneda R, Daldrup-Link HE. Adrenal Hemorrhage. Pearls and Pitfalls in Pediatric Imaging: Variants and Other Difficult Diagnoses 2023

- Funder JW, Carey RM, Mantero F, Murad MH, Reincke M, Shibata H, et al. The Management of Primary Aldosteronism: Case Detection, Diagnosis, and Treatment: An Endocrine Society Clinical Practice Guideline. J Clin Endocrinol Metab 2016. [crossref]

- Cobb A, Aeddula NR. Primary Hyperaldosteronism. StatPearls 2023. [crossref]

- Diabetes, Heart Disease, & Stroke – NIDDK. [crossref]

- BM L, TM M. Diabetes and cardiovascular disease: Epidemiology, biological mechanisms, treatment recommendations and future research. World J Diabetes 2015. [crossref]

- Diabetes and Your Heart | CDC.

- Joseph JJ, Deedwania P, Acharya T, Aguilar D, Bhatt DL, Chyun DA, et al. Comprehensive Management of Cardiovascular Risk Factors for Adults With Type 2 Diabetes: A Scientific Statement From the American Heart Association. Circulation 2022. [crossref]

- Creager MA, Lüscher TF, Cosentino F, Beckman JA. Diabetes and vascular disease: pathophysiology, clinical consequences, and medical therapy: Part I. Circulation 2003. [crossref]

- Sonino N, Tomba E, Fava GA. Psychosocial approach to endocrine disease. Adv Psychosom Med 2007. [crossref]

- Sonino N, Fava GA. Psychological aspects of endocrine disease. Clin Endocrinol (Oxf) 1998

- Sonino N, Fava GA, Fallo F, Boscaro M. Psychological distress and quality of life in endocrine disease. Psychother Psychosom. 1990. [crossref]

- Salvador J, Gutierrez G, Llavero M, Gargallo J, Escalada J, López J. Endocrine Disorders and Psychiatric Manifestations. 2019

- Guarnotta V, Amodei R, Frasca F, Aversa A, Giordano C. Impact of Chemical Endocrine Disruptors and Hormone Modulators on the Endocrine System. Int J Mol Sci 2022. [crossref]

- Yilmaz B, Terekeci H, Sandal S, Kelestimur F. Endocrine disrupting chemicals: exposure, effects on human health, mechanism of action, models for testing and strategies for prevention. Rev Endocr Metab Disord 2020. [crossref]

- Zoeller TR. Environmental chemicals targeting thyroid. Hormones (Athens) 2010. [crossref]

- Kumar M, Sarma DK, Shubham S, Kumawat M, Verma V, Prakash A, et al. Environmental Endocrine-Disrupting Chemical Exposure: Role in Non-Communicable Diseases. Front Public Health 2020. [crossref]

- Magueresse-Battistoni B Le, Labaronne E, Vidal H, Naville D. Endocrine disrupting chemicals in mixture and obesity, diabetes and related metabolic disorders. World J Biol Chem 2017. [crossref]

- Ahn C, Jeung EB. Endocrine-Disrupting Chemicals and Disease Endpoints. Int J Mol Sci 2023. [crossref]

- Schug TT, Janesick A, Blumberg B, Heindel JJ. Endocrine disrupting chemicals and disease susceptibility. J Steroid Biochem Mol Biol 2011. [crossref]

- Chung BY, Choi SM, Roh TH, Lim DS, Ahn MY, Kim YJ, et al. Risk assessment of phthalates in pharmaceuticals. J Toxicol Environ Health A 2019. [crossref]

- Meeker JD, Calafat AM, Hauser R. Di(2-ethylhexyl) phthalate metabolites may alter thyroid hormone levels in men. Environ Health Perspect 2007. [crossref]

- Greer MA, Goodman G, Pleus RC, Greer SE. Health effects assessment for environmental perchlorate contamination: the dose response for inhibition of thyroidal radioiodine uptake in humans. Environ Health Perspect 2002. [crossref]

- Taylor PN, Okosieme OE, Murphy R, Hales C, Chiusano E, Maina A, et al. Maternal perchlorate levels in women with borderline thyroid function during pregnancy and the cognitive development of their offspring: data from the Controlled Antenatal Thyroid Study. J Clin Endocrinol Metab 2014. [crossref]

- Hampl R, Kubátová J, Stárka L. Steroids and endocrine disruptors–History, recent state of art and open questions. J Steroid Biochem Mol Biol. 2016. [crossref]

- Sargis RM. Metabolic disruption in context: Clinical avenues for synergistic perturbations in energy homeostasis by endocrine disrupting chemicals. Endocr Disruptors (Austin) 2015.

- Bartone PT, Krueger GP, Bartone J V. Individual Differences in Adaptability to Isolated, Confined, and Extreme Environments. Aerosp Med Hum Perform 2018. [crossref]

- Ilardo M, Nielsen R. Human adaptation to extreme environmental conditions. Curr Opin Genet Dev 2018. [crossref]

- Dhabhar FS. The short-term stress response – Mother nature’s mechanism for enhancing protection and performance under conditions of threat, challenge, and opportunity. Front Neuroendocrinol 2018. [crossref]

- Mazzeo RS, Wolfel EE, Butterfield GE, Reeves JT. Sympathetic response during 21 days at high altitude (4,300 m) as determined by urinary and arterial catecholamines. Metabolism 1994.

- Woods DR, O’Hara JP, Boos CJ, Hodkinson PD, Tsakirides C, Hill NE, et al. Markers of physiological stress during exercise under conditions of normoxia, normobaric hypoxia, hypobaric hypoxia, and genuine high altitude. Eur J Appl Physiol 2017. [crossref]

- Yilmaz M, Suleyman B, Mammadov R, Altuner D, Bulut S, Suleyman H. The Role of Adrenaline, Noradrenaline, and Cortisol in the Pathogenesis of the Analgesic Potency, Duration, and Neurotoxic Effect of Meperidine. Medicina (B Aires) 2023. [crossref]

- Morena M, Roozendaal B, Trezza V, Ratano P, Peloso A, Hauer D, et al. Endogenous cannabinoid release within prefrontal-limbic pathways affects memory consolidation of emotional training. Proc Natl Acad Sci U S A 2014. [crossref]

- Liu C, Ying Z, Harkema J, Sun Q, Rajagopalan S. Epidemiological and experimental links between air pollution and type 2 diabetes. Toxicol Pathol 2013. [crossref]

- Vangoitsenhoven R, Rondas D, Crèvecoeur I, D’Hertog W, Baatsen P, Masini M, et al. Foodborne Cereulide Causes Beta-Cell Dysfunction and Apoptosis. PLoS One 2014. [crossref]

- Aggarwal A, Upadhyay R. Heat stress and animal productivity. Heat Stress and Animal Productivity. 2013

- Azzam A. Is the world converging to a ‘Western diet’? Public Health Nutr 2021

- Mima M, Greenwald D, Ohlander S. Environmental Toxins and Male Fertility. Curr Urol Rep 2018. [crossref]

- Mendola P, Messer LC, Rappazzo K. Science linking environmental contaminant exposures with fertility and reproductive health impacts in the adult female. Fertil Steril 2008. [crossref]

- Marieb EN, Hoehn KN. Human Anatomy & Psysiology. Pearson Education, editor. 2019

- Russell G, Lightman S. The human stress response. Nat Rev Endocrinol 2019

- Nadolnik LI. Stress and the thyroid gland. Biochem Mosc Suppl B Biomed Chem 2011

- Staufenbiel SM, Penninx BWJH, Spijker AT, Elzinga BM, van Rossum EFC. Hair cortisol, stress exposure, and mental health in humans: a systematic review. Psychoneuroendocrinology. 2013. [crossref]

- Kennis M, Gerritsen L, van Dalen M, Williams A, Cuijpers P, Bockting C. Prospective biomarkers of major depressive disorder: a systematic review and meta-analysis. Mol Psychiatry 2020. [crossref]

- Diabetes and climate change: breaking the vicious cycle. BMC Med 2023. [crossref]

- Mora C, McKenzie T, Gaw IM, Dean JM, von Hammerstein H, Knudson TA, et al. Over half of known human pathogenic diseases can be aggravated by climate change. Nat Clim Chang 2022. [crossref]

- Corrales Vargas A, Peñaloza Castañeda J, Rietz Liljedahl E, Mora AM, Menezes-Filho JA, Smith DR, et al. Exposure to common-use pesticides, manganese, lead, and thyroid function among pregnant women from the Infants’ Environmental Health (ISA) study, Costa Rica. Science of The Total Environment. 2022. [crossref]

- Ghassabian A, Pierotti L, Basterrechea M, Chatzi L, Estarlich M, Fernández-Somoano A, et al. Association of Exposure to Ambient Air Pollution With Thyroid Function During Pregnancy. JAMA Netw Open 2019. [crossref]

- Zhao Y, Cao Z, Li H, Su X, Yang Y, Liu C, et al. Air pollution exposure in association with maternal thyroid function during early pregnancy. J Hazard Mater 2019. [crossref]

- Zhou Y, Guo J, Wang Z, Zhang B, Sun Z, Yun X, et al. Levels and inhalation health risk of neonicotinoid insecticides in fine particulate matter (PM2.5) in urban and rural areas of China. Environ Int. 2020. [crossref]

- Kim HJ, Kwon H, Yun JM, Cho B, Park JH. Association Between Exposure to Ambient Air Pollution and Thyroid Function in Korean Adults. J Clin Endocrinol Metab. 2020. [crossref]

- Zeng Y, He H, Wang X, Zhang M, An Z. Climate and air pollution exposure are associated with thyroid function parameters: a retrospective cross-sectional study. J Endocrinol Invest 2021[crossref]

- Preda C, Branisteanu D, Fica S, Parker J. Pathophysiological Effects of Contemporary Lifestyle on Evolutionary-Conserved Survival Mechanisms in Polycystic Ovary Syndrome. Life 2023. [crossref]

- Lin SY, Yang YC, Chang CYY, Lin CC, Hsu WH, Ju SW, et al. Risk of Polycystic Ovary Syndrome in Women Exposed to Fine Air Pollutants and Acidic Gases: A Nationwide Cohort Analysis. International Journal of Environmental Research and Public Health 2019. [crossref]

- Afzal F, Das A, Chatterjee S. Drawing the Linkage Between Women’s Reproductive Health, Climate Change, Natural Disaster, and Climate-driven Migration: Focusing on Low- and Middle-income Countries – A Systematic Overview. Indian J Community Med 2024. [crossref]

- Climate and Health Program | CDC

- Compendium of WHO and other UN guidance on health and environment – World | ReliefWeb.

- Climate Change and Health | Endocrine Society

- Bikomeye JC, Rublee CS, Beyer KMM. Positive Externalities of Climate Change Mitigation and Adaptation for Human Health: A Review and Conceptual Framework for Public Health Research. International Journal of Environmental Research and Public Health 2021, Vol 18, Page 2481. 2021. [crossref]

- Kwakye G, Brat GA, Makary MA. Green surgical practices for health care. Arch Surg 2011. [crossref]

- Soares AL, Buttigieg SC, Bak B, McFadden S, Hughes C, McClure P, et al. A Review of the Applicability of Current Green Practices in Healthcare Facilities. Int J Health Policy Manag 2023. [crossref]

- Benefits of Physical Activity | Physical Activity | CDC.

- Food and Diet | Obesity Prevention Source | Harvard T.H. Chan School of Public Health.

- Sami W, Ansari T, Butt NS, Hamid MRA. Effect of diet on type 2 diabetes mellitus: A review. Int J Health Sci (Qassim) 2017.

- Clark MA, Domingo NGG, Colgan K, Thakrar SK, Tilman D, Lynch J, et al. Global food system emissions could preclude achieving the 1.5° and 2°C climate change targets. Science (1979) 2020. [crossref]

- Chemical safety EURO.

- Fox M, Zuidema C, Bauman B, Burke T, Sheehan M. Integrating Public Health into Climate Change Policy and Planning: State of Practice Update. Int J Environ Res Public Health 2019. [crossref]

- Lesnikowski AC, Ford JD, Berrang-Ford L, Barrera M, Heymann J. How are we adapting to climate change? A global assessment. Mitig Adapt Strateg Glob Chang. 2015

- Vine E, Sathaye J. The monitoring, evaluation, reporting, and verification of climate change mitigation projects: Discussion of issues and methodologies and review of existing protocols and guidelines. 1997

- Jasanoff S. NGOS and the environment: From knowledge to action. Third World Q 1997

- Crafa A, Calogero AE, Cannarella R, Mongioi’ LM, Condorelli RA, Greco EA, et al. The Burden of Hormonal Disorders: A Worldwide Overview With a Particular Look in Italy. Front Endocrinol (Lausanne) 2021. [crossref]

- Climate Change and Health | Endocrine Society. [cited 2024 Feb 7]

- Climate change. [cited 2024 Feb 7].

- Colón-González FJ, Bastos LS, Hofmann B, Hopkin A, Harpham Q, Crocker T, et al. Probabilistic seasonal dengue forecasting in Vietnam: A modelling study using superensembles. PLoS Med 2021. [crossref]

- Health Technology and Climate Change | Hoover Institution Health Technology and Climate Change. [cited 2024 Feb 7].

- Collins SP, Barton-Maclaren TS. Novel machine learning models to predict endocrine disruption activity for high-throughput chemical screening. Frontiers in toxicology 2022. [crossref]