DOI: 10.31038/JDMR.2024714

Abstract

Introduction: In the United States alone, more than half a million new dental implants are placed annually, including patients in the Veterans Health Administration (VHA). Despite their widespread adoption, this treatment option is not devoid of complications and failures. Reported failure rates are 5-10% within the first decade after implantation, with a notable increase beyond this period. While implant failure has been extensively studied in the broader context, research specific to veterans is limited.

Methods: This retrospective study was designed to investigate the Early and Late causes of dental implant failures within the Salt Lake City VHA Dental Clinic (SL VA) and to enhance clinical management of dental implants post-implantation.

Results: This case-series study consists of 60 failed implants from 49 patients collected over a period of 3 years. Statistical analysis was conducted on risk factors associated with implant failures, patient demographics, and clinical characteristics. The data of this patient cohort, predominantly older males with a military background, revealed that Early implant failures were associated with ~90% increased relative odds with infection (p=0.03) and >70 years old at time of implantation (OR 0.11, 95% CI [0.02,0.65]; p=0.02), while Late failures were associated with progressive bone loss (OR 7.15, 95%CI [1.08,47.17]; p=0.041); clinical history and histology supported the statistical findings.

Conclusion: Understanding the unique factors that contribute to Early and Late failures may improve initial integration rates and, ultimately, implant longevity.

Keywords

Dental implants, Mouth, Edentulous, Veteran, Peri-implantitis, Dental care, Veterans’ electronic health record

Introduction

Dental implants are a routine treatment for addressing partial and complete edentulism at the Salt Lake City, Utah (SL) Department of Veterans Affairs (VA) Dental Clinic. Thousands of dental implants have been placed in patients at the SL VA, with both success and failure. Failures are often divided into two types based on the time they occurred: 1) Early or 2) Late. Our clinical team previously evaluated the spectrum of failure within the national and local VA cohorts using the Veterans’ health record, which revealed that Utah’s failure rate was 6.7% over ~20 years. However, this study did not consider the timing of the failures, Early or Late, in the analysis. Thus, there is a need to understand the factors that contribute to both type of dental implant failure (DIF) to enhance the quality of patient care, which serves as the rationale for this study.

Although osseointegrated implants are a success story with a ten-year survival rate of 90-95%, significant failures do occur [1-4]. As implants have become mainstream, complications have become increasingly apparent. Difficulty selecting appropriate treatment strategies is compounded by the commercialization of implant dentistry. When financial interests take precedence over best practices, neither patients nor providers derive long-term benefits. For instance, the survival rate of implants diminishes to 73% when managed by inexperienced practitioners.

Classic literature defined implant success as 1-1.5 mm of bone loss of an integrated implant in the first year and 0.2 mm bone loss annually after functional loading, without mobility, pain, or infection [5,6]. A more recent study stated that surviving implants would lose 0-0.2 mm of marginal bone within the first year with no pain on function, mobility, or history of exudate, and bone loss <1/2 of the implant body [7]. The same study describes implant failure as pain with function, mobility, radiographic bone loss >1/2 implant length, uncontrolled exudate, or no longer in the mouth [7]. There are three major challenges in implant dentistry: 1) the lack of initial osseointegration, 2) infection, and 3) peri-implant bone loss over time. Early failures have often been seen in those that have not yet been restored. Late failures refer to those implants that initially integrated, usually have been restored (in function), and failed over time [8,9].

A comprehensive understanding of the intricacies underlying implant failure and its associated conditions, including peri- implantitis and peri-implant mucositis, holds significant importance for enhancing the effectiveness of treatment and ultimately delivering improved outcomes. Furthermore, maintaining both systemic and oral health is important since there is a postulated direct relationship between periodontitis and systemic diseases such as diabetes, heart disease, rheumatoid arthritis, and cancer [10,11]. Because implant success is multifactorial, the quest for effective strategies in the management of peri-implant disease poses a formidable challenge.

This study was initiated to understand the causation of DIFs within our local clinic. We hypothesized that Early DIFs would be associated with inadequate healing (osseointegration), while Late failures are linked to systemic factors contributing to peri-implant bone tissue inability to maintain osseointegration. Enhancing our understanding of the peri-implant disease process will improve patient treatment protocols within our clinic and beyond.

Methods

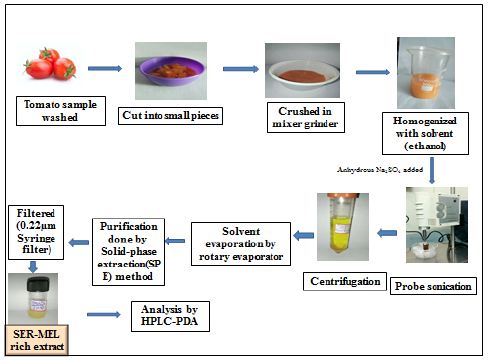

Sample Collection

The study was reviewed by the Institutional Review Boards of the University of Utah and the Department of Veterans Affairs Salt Lake City Hospital system, and deemed exempt (IRB# 00138581). Dental implants identified as hopeless by primary dental providers were collected after removal. The process adhered to standard extraction procedures at the SL VA. A total of 60 discarded implants were collected from 49 patients between August 2019 and March 2022.

Following removal, the implants were preserved in 10% buffered formalin (Azer Scientific™, Morgantown, PA). Samples were de-identified and confidentiality was maintained. After 3 exchanges of formalin for fixation at 3 days apart, the implants underwent dehydration through progressive grades of ethanol from 50 to 100% and finally with xylene using a tissue dehydrator (Leica TP 1020, Leica Biosystems, Deer Park, IL). After dehydration, samples were placed in acetone and slowly dried to avoid extensive tissue sticking/fusing. The processed samples were subjected to histologic evaluation and chart review.

Microscopic Analyses

Each implant underwent initial assessment utilizing light microscopy (Keyence Digital Microscope; VHX-6000, Itasca, IL) and then comprehensive imaging using scanning electron microscopy ((SEM); Nano-Eye SEM with fitted backscatter detector (SNE-Apha, Lafaytte, CA)). Elemental analysis was done using the University of Utah NanoFab Core Facility FEI Quanta SEM (600F, Hillsboro, OR) with an attached energy dispersive spectroscopic detector (EDS). Descriptive observations were documented. Surface irregularities of the implants were recorded, along with the identification of various tissue types using the backscattering electron (BSE) images. Nobel Biocare (Brea, California) and Zimmer Biomet (Warsaw, IN) provided new dental implants to compare surface topography.

Clinical and Radiographic Data

The principal investigator conducted a systematic chart review utilizing a standardized evaluation form for consistency. De- identified data, encompassing procedural notes, radiographs, patient demographics, and dental and medical histories were assessed.

Review of procedure notes on the day of removal focused on the diagnosis and other relevant information (e.g., purulence, pain). Pre- existing comorbidities, prescription utilization, recreational drug use, and select bloodwork (Hemoglobin A1C) were tallied and recorded, with specific attention given to prevalent conditions like type 2 diabetes (DM2) and post-traumatic stress disorder (PTSD).

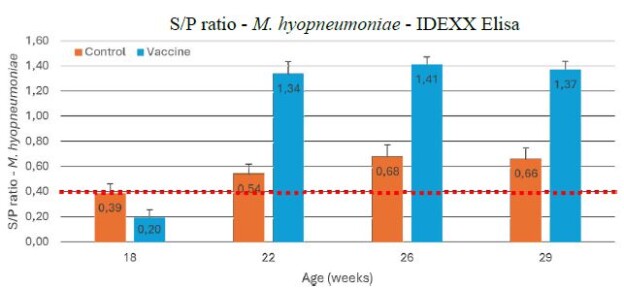

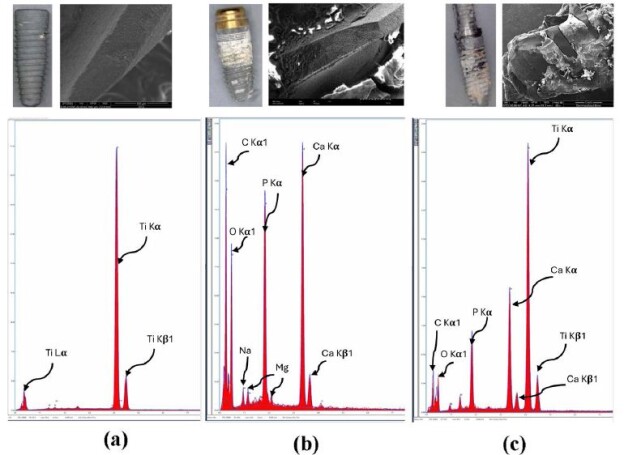

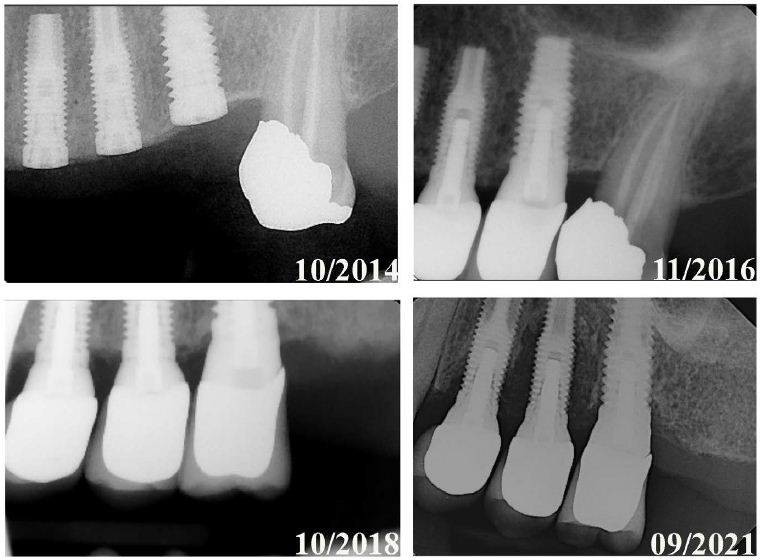

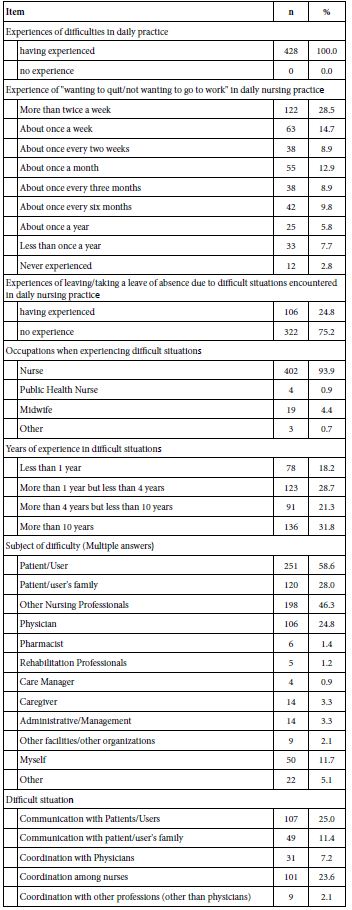

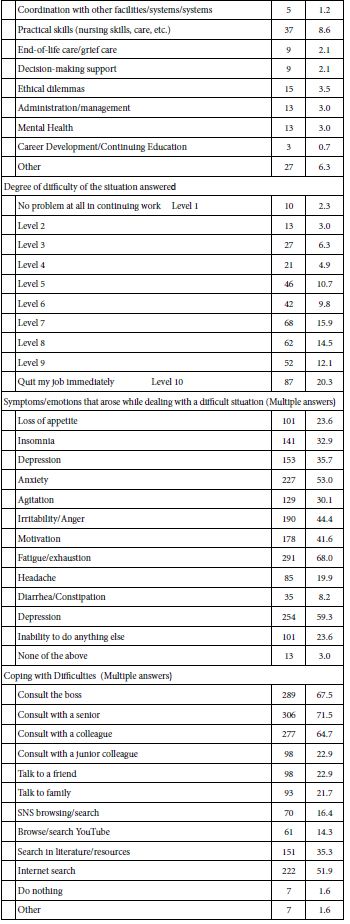

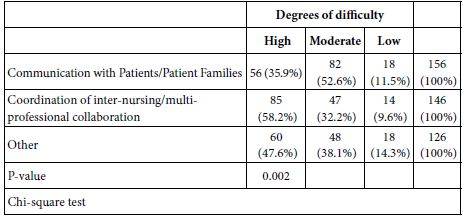

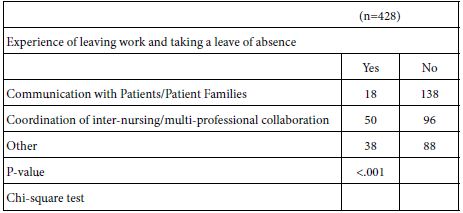

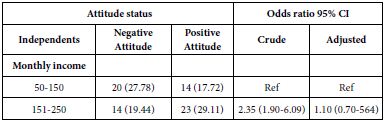

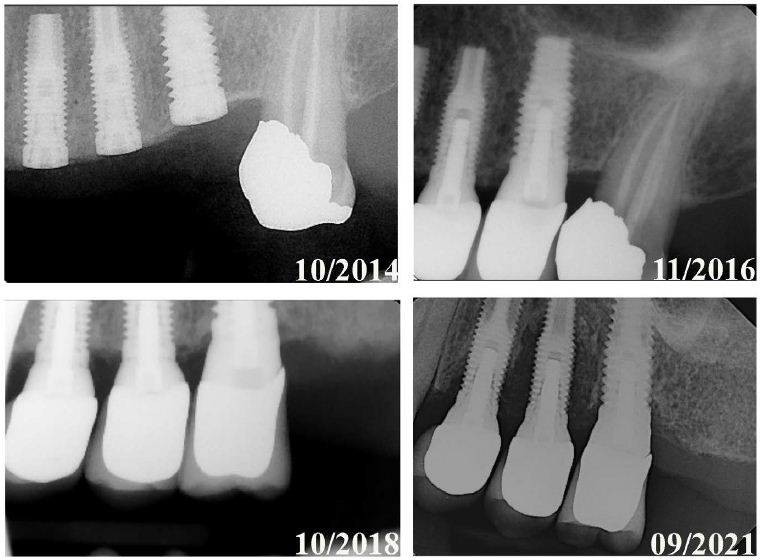

All implants included in the study had periapical radiographs available to the investigator. These were assessed for bone loss using Planmeca Romexis 5.2.1.R software (Planmeca USA INC., Charlotte, NC), categorized as percentage clusters (0-25, >25-50, >50-75, or >75-100). The 0-25% was defined as no bone loss. If preoperative radiographs were unavailable for comparison, the assumption was made that the implant platform was placed relative to the alveolar crest, per manufacturer instructions. Bone loss was further described by bone loss patterns: vertical or cupped, horizontal, peri-implant radiolucent line/halo, or a combination (Figure 1). Each implant was tallied in its respective category of bone loss (Figure 1) based on the predominant bone loss pattern (Figure 1d).

Figure 1: Radiographic images of bone loss patterns in dental implant failures. A representative set of radiographs showing the dental implant failure bone loss patterns. (a) Vertical or cupping, (b) Horizontal, (c) Peri-implant radiolucent line/halo and (d) horizontal with vertical component [classified by major (horizontal) pattern].

Statistical Analysis

For descriptive data, patient characteristics were summarized using mean and standard deviation (SD) or median and interquartile range (IQR) for continuous variables and numbers and percentages for categorical variables. Participants were categorized based on Early (≤ 6 months) or Late implant failure (> 6 months). Group comparisons were performed using t-tests, Chi-square tests, or Fisher’s exact tests as appropriate based on the variables being evaluated.

A univariable logistic model was utilized, encompassing all failed teeth while clustering patient IDs to address within-patient correlation, to evaluate the association between potential risk factors, and Late and Early failures. A multivariable logistic model was then used to identify association, adjusting for significant variables identified in the univariable model. The findings were reported, including odds ratios, 95% confidence intervals (CI), and p-values. All statistical analyses were executed using STATA MP18, with significance determined at p < 0.05, and all tests were two-sided.

Results

Patients with incomplete clinically relevant data, including implant placement date, were excluded from the study. Consequently, four patients (comprising seven implants) were removed from the data set. This resulted in a final cohort of 45 patients with 53 implants. Among these participants, two implants were lost spontaneously, while the remaining 51 implants were extracted at the VA SL Dental Clinic.

Overall, the patients evaluated in this series had significant oral, systemic, and/or mental health concerns. A history of illicit drugs (methamphetamine, cocaine, marijuana, heroin), chronic opioid use, alcohol, tobacco, and polypharmacy were prevalent findings, along with diagnoses of serious co-morbid conditions. Thirty-nine out of the forty- five patients (86.7%) had four or more serious health problems (e.g., coronary artery disease, kidney disease, diabetes, depression, etc.).

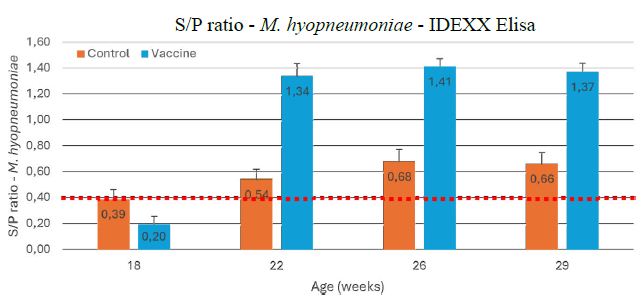

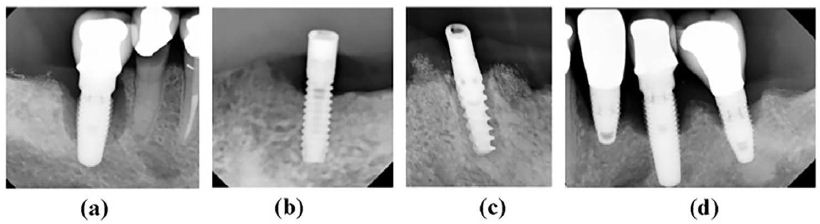

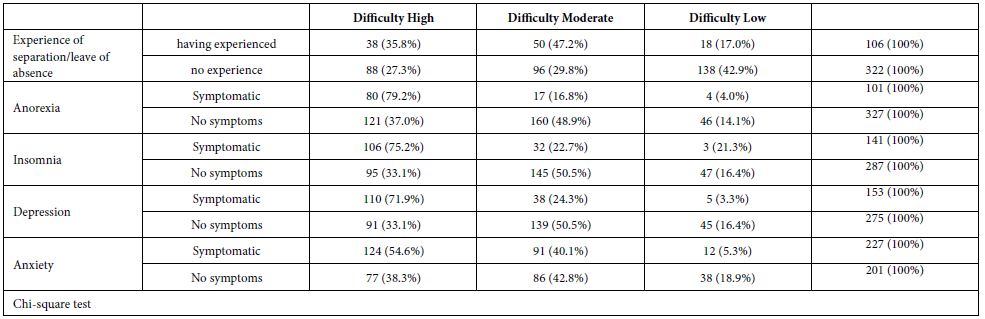

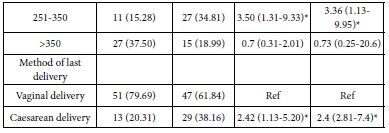

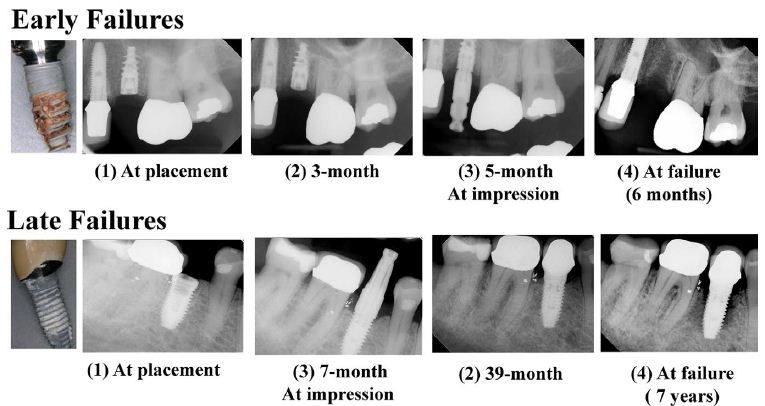

Based on the clinical notes, infection was defined as peri-implant purulence, swelling, and pain. In total, 9 of 45 (20%) patients met the criteria. The Late DIF group exhibited higher percentages of bone loss at the time of implant extraction than the Early DIF. Graded bone loss percentages are given in Table 1. Except for a few outliers, our data revealed that the radiographic bone loss categories of vertical/cupping and horizontal were more common in Late DIFs (Figure 1a), while a halo pattern (Figure 1c) or no visible bone loss was more prevalent in Early DIFs. Serial radiographs of this process for both Early and Late categories are shown in Figure 2.

Table 1: Bone loss associated with each implant failure

|

Total Patients n=45

|

Late Failures (Post-6 months) n=31 |

Early Failures (Prior to 6 months) n=14 |

|

0-25% Bone loss (No Bone Loss)

|

7 |

4 (57.1%) |

3 (42.9%)

|

| >25% to 50% Bone loss |

16

|

13 (81.2%) |

3 (18.8%) |

|

>50% to 75% Bone loss

|

7 |

6 (85.7%) |

1 (14.3%)

|

| >75% to 100% Bone loss |

12

|

8 (66.7%) |

4 (33.3%) |

|

Unknown (No X-ray available)

|

3 |

0 (0.0%) |

3 (100.0%)

|

Percentages of peri-implant bone loss assessed using periapical radiographs. All specimens were bone-level implants, and a measurement was made from the implant platform to the site of integration toward the apex.

Figure 2: Radiographic images of Early and Late Dental Implant Failures over time. A representative set of radiographs showing the progression of Early (top row) and Late (bottom row) implant failures over time. Note: Early failure occurred at 6 months post-implantation. Late failure resulted in 7 years after the implantation.

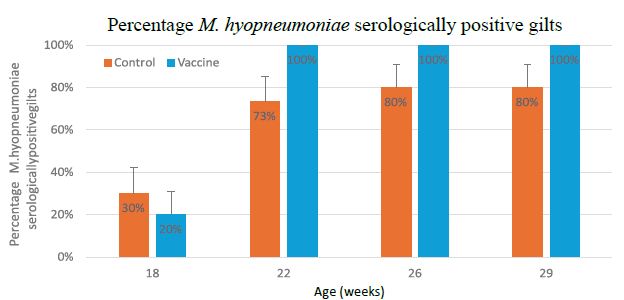

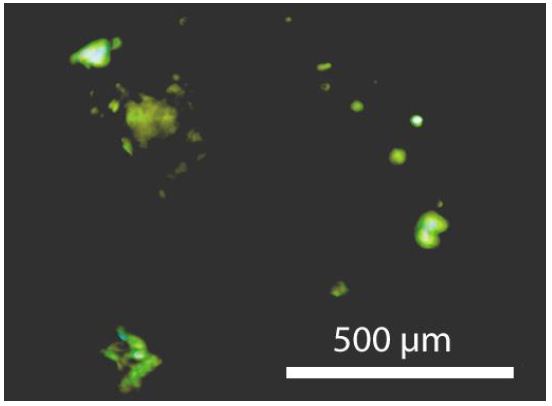

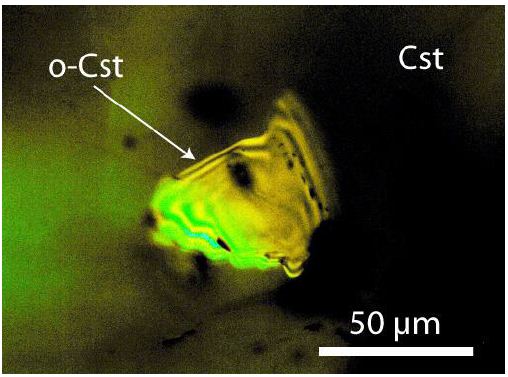

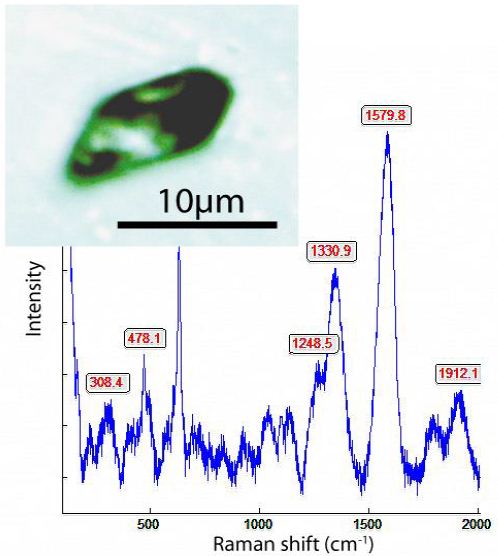

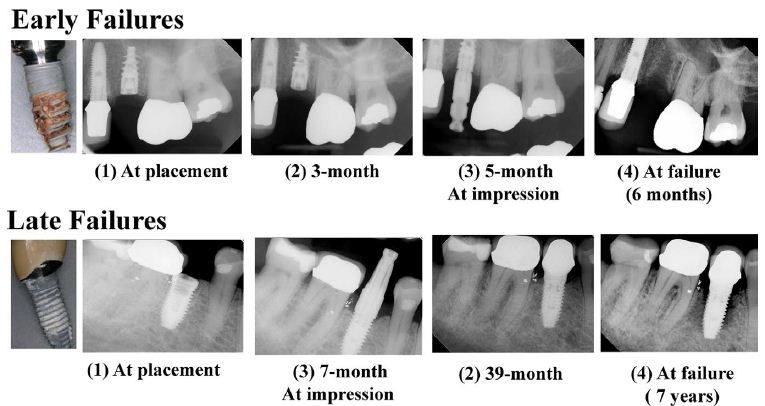

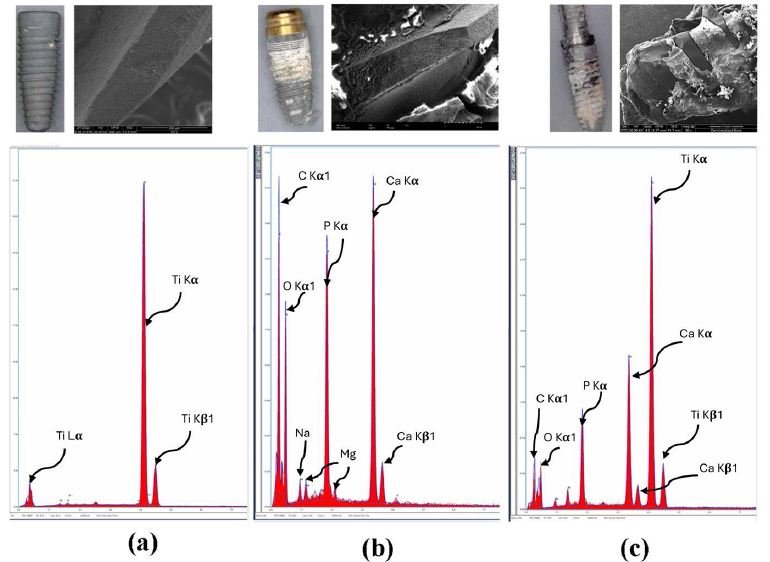

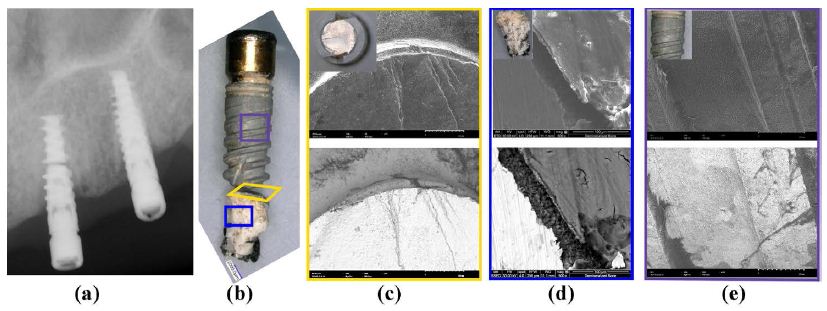

Collected implants were investigated extensively using light and electron microscopic techniques. Initially, surface characteristics were assessed using the light microscope for failed implants. Sample descriptors were documented as follows: 1) fracture: 4 of 53 (7.5%), 2) surface damage not attributable to surgical removal: 42 of 53 (79.2%), 3) surgical damage: 27 of 53 (50.9%), 4) presence of organic matter (soft-tissue encapsulation): 27 of 53 (50.9%), 5) sparse Bone: 22 of 53 (41.5%), and 6) Tartar: 14 of 53 (26.4%).

Generally, implants classified as Early (≤ 6 months; Figure 2), exhibited surfaces resembling those recently removed from packaging, with minimal damage and absence of bone integration or presence of soft tissue and tartar compared to Late failure cases (Figure 3(a) and (b)). Magnified views of implant surfaces revealed the presence of very little attached bone tissue on the implants where the bone was present, with the majority showing no visible bone growth. Soft tissues surrounded the majority of the Early implants, indicating the absence of osseointegration.

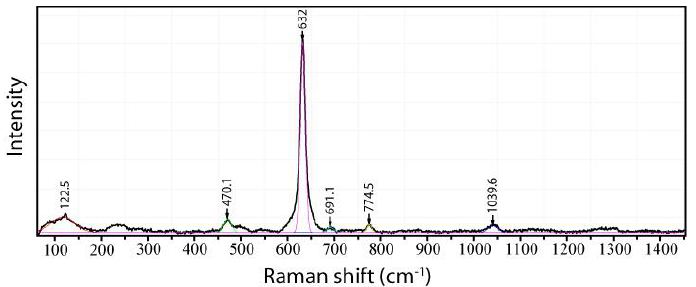

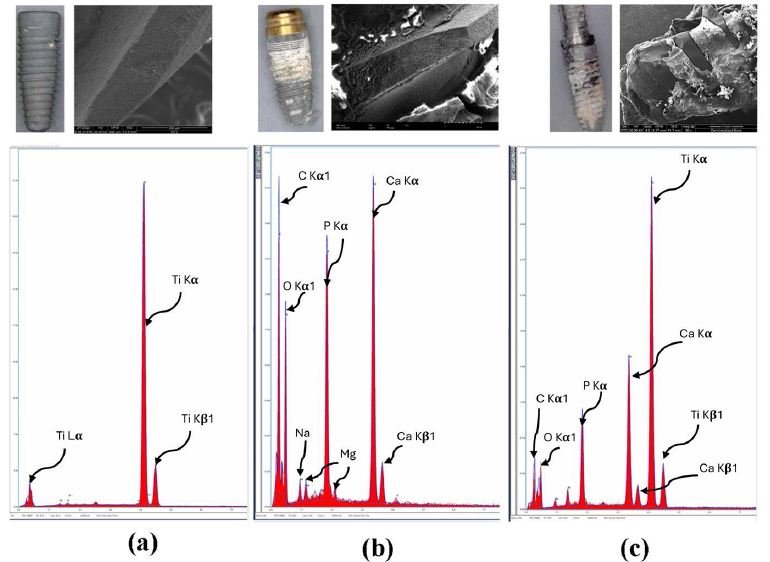

Figure 3: Energy dispersive X-ray analysis of failed dental implants. Energy-dispersive X-ray analysis: Top row – A representative set of SEM images and photographs. Bottom row – EDS spectrum analyses of the implant with no bone attachment (a) tartar (b), and bone (c).

In contrast, instances of Late implant failure revealed progressive resorption of bone radiographically (Figures 2 and 4), often accompanied by some residual bone tissue around the implant apex during explantation (Figure 3(c)).

The scanning electron micrographs of implants were consistent with the radiographic findings or clinical presentation and notes, Figure 3 shows EDS and light micrographs that were obtained from an implant with no direct bone contact (Figure 3 (a), tartar (Figure 3 (b), and bone present on an implant surface (Figure 3 c).

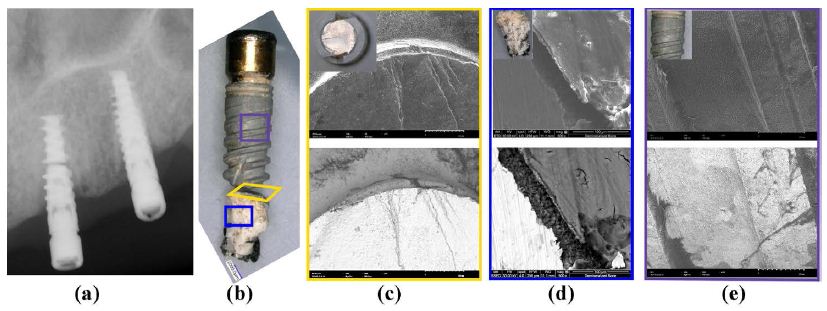

Scanning electron microscopy (SEM) analyses were conducted on several samples to understand other mechanisms of failure. In one case, the implant failure occurred due to a fracture, and its description is given below.

Case Description

A 3.5x13mm Nobel implant at site #10 (maxilla), which retained a locator overdenture for six years. Clinical notes revealed that the patient presented with severe pain and peri-implant purulence. The patient admitted to functioning on the implant without the denture in place before the fracture. The coronal 2/3 of the implant was mobile, encapsulated with soft tissue, and easily extracted with forceps. The apical 1/3 of the implant was osseointegrated and removed surgically with a high-speed handpiece. SEM photomicrographs validated the finding (Figure 5). The broken cross-sectional surface showed signs of shear fractures (Figure 5(c)). The presence of bone tissue was confirmed using the BSE (Figure 4(d) within the apical portion, while only soft tissue was present at the coronal portion of the implant (Figure 5(e)). EDS of the coronal (soft tissue encapsulated) aspect showed mainly Titanium without evidence of elements of bone while the apical portion surrounded by the adhered bone tissue presented high amounts of Calcium, Titanium, and Phosphorus, with trace amounts of Sodium and Aluminum.

Figure 4: Radiographic images illustrating bone loss progression in Late Dental Implant Failures. A set of radiographic images showing the progression of bone loss in a Late Failure case, where one implant was lost (where the implant was placed in tooth #13 (Biomet 3i Nanotite 4×13)). The images demonstrate the progressive bone loss selectively with the implant placed at #13 after losing natural tooth #15. Implants at sites #12 and #14 are also impacted by #13’s peri-implant bone loss.

Figure 5: Image characterization of a Nobel 3.5x13mm implant failure. (a) A radiograph showing the fractured Nobel 3.5x13mm implant at site #10 present for 6 years. A combination of horizontal bone loss from the implant platform and a radiolucent halo with osseointegrated apical 1/3 is needed. (b) A macroscopic view of dental implant. (c) An axial view of the sheared implant surface under SEM. (d) The SEM image shows the presence of bone on apical 1/3 of the osseointegrated implant remnant. (e) The SEM image of the coronal 1/3 of implant surface devoid of bone where soft tissue encapsulation had occurred. Inset images in (c), (d) and (e) are photographic images of the respective implant surfaces. While the top row of (c), (d), and (e) are the representations of the surface under the secondary mode, the bottom row is representative of the backscattering mode.

Statistical Analyses

Among the 45 Utah veterans with at least one DIF between 2019 and 2021, 31 experienced Late DIFs (occurring greater than six months post-implant placement; Late DIF), while 14 experienced early DIFs (within six months of implant placement; Early DIF). Table 2 outlines the demographic and patient characteristics, failure details, and comorbid conditions for both Late and Early DIF cases. The Late DIF group exhibited a mean follow-up time of 6.6 years (median: 5.8; IQR: 2.0-9.8 years), whereas the Early DIF group had a mean follow-up time of 0.3 years (median: 0.3; IQR: 0.1-0.3). The average age at implant placement was 60.5 and 65.0 years, while the mean age at implant removal was 67.1 and 65.3 years for the Late and Early DIF cohorts, respectively.

Table 2: Veteran dental implant failure characteristics

|

Total

|

Failure after 6months |

Failure before 6months |

p-value |

|

N=45 |

N-31 |

N=14 |

|

| Sex (Male) |

43 (95.6%)

|

30 (96.8%) |

13 (92.9%) |

0.53

|

| Implant Characteristics: |

|

|

|

|

| Follow-up time: |

|

|

|

|

| Average (Years (SD)) |

4.6 (5.3)

|

6.6 (5.3) |

0.3 (0.2) |

<0.001 |

|

Median (Years (IQR))

|

2.4 (0.4-7.8) |

5.8 (2.0-9.8) |

0.3 (0.1-0.3) |

<0.001

|

| Age at implant removal: |

|

|

|

|

| Average (Age (SD)) |

66.5 (9.8)

|

67.1 (8.9) |

65.3 (11.9) |

0.58 |

|

Age>70yearsold (count (%))

|

24 (53.3%) |

17 (54.8%) |

7 (50.0%) |

0.76

|

| Age at implant placement |

|

|

|

|

| Average (Age (SD)) |

61.9 (10.5)

|

60.5 (9.6) |

65.0 (11.9) |

0.18 |

|

Age>70yearsold (count (%))

|

13 (28.9%) |

6919.4%) |

7 (50.0%) |

0.036

|

| Failure Characteristics: |

|

|

|

|

| Failure type |

|

|

|

0.001

|

| Previously osseointegrated implant |

31 (68.9%)

|

26 (83.9%) |

5 (45.7%) |

|

|

Never integrated to begin with

|

14 (31.1%) |

5 (16.1%) |

9 (64.3%)

|

|

| Type of tooth replacement |

|

|

|

0.18

|

| Completed dentures |

16 (35.6%)

|

13 (41.9%) |

3 (21.4%) |

|

|

Partial edentulous

|

29 (64.4%) |

18 (58.1%) |

11 (78.6%)

|

|

| Periodontal health |

|

|

|

0.33

|

| Peri-implantitis |

11 (24.4%)

|

9 (29.0%) |

2 (14.3%) |

|

|

Past periodontitis diagnosis

|

26 (57.8%) |

18 (58.1%) |

8 (57.1%)

|

|

| No existing periodontitis |

8 (17.8%)

|

4 (12.9%) |

4 (28.6%) |

|

|

Bone loss

|

35 (77.8%) |

27 (87.1%) |

8 (57.1%) |

0.025

|

| Infection |

9 (20.0%)

|

4 (12.9%) |

5 (35.7%) |

0.077 |

|

Plaque

|

44 (97.8%) |

30 (96.8%) |

14 (100.0%) |

1.00

|

| Comorbidities: |

|

|

|

|

| Xerostomia |

42 (93.3%)

|

28 (90.3%) |

14 (100.0%) |

0.54 |

|

Diabetes

|

19 (42.2%) |

13 (41.9%) |

6 (42.9%) |

0.32

|

| Thyroid disorder |

11 (24.4%)

|

7 (22.6%) |

4 (28.6%) |

0.67 |

|

Htperlipidemia

|

20 (44.4%) |

15 (48.4%) |

5 (35.7%) |

0.43

|

| PTSD |

20 (44.4%)

|

15 (48.4%) |

5 (35.7%) |

0.43

|

| Labs: |

|

|

|

|

| Haemogobin A1C |

|

|

|

0.33

|

| NA |

20 (44.4%)

|

16 (51.6%) |

4 (28.6%) |

|

|

5-6.9

|

16 (35.6%) |

10 (32,3%) |

6 (2.9%)

|

|

| >=7 |

9 (20.0%)

|

5 (16.1%) |

4 (28.6%)

|

|

| Social History: |

|

|

|

|

| Smoking |

22 (48.9%)

|

18 (58.1%) |

4 (28.6%) |

0.067 |

|

Alcohol

|

14 (31.1%) |

10 (32.3%) |

4 (28.6%) |

0.80

|

| Opioid |

18 (40.0%)

|

13 (41.9%) |

5 (35.7%) |

0.69

|

Patient Demographics, showing dental failure types and clinical characteristics of Utah veterans who experienced at least one dental implant failure. Early Failures = 0 to 6 months; Late Failures = >6.0 months; N/A: Not Available, i.e., no diabetes; PTSD: Post Traumatic Stress Disorder; SD: Standard Deviation; IQR: Inter-Quartile Range.

Table 2 further indicates that the study cohorts were predominantly male sex (96.8% and 92.9% for Late and Early DIF cohorts, respectively). In the Late DIF cohort, 83.9% of the failure occurred with previously osseointegrated implants, while infections only accounted for 12.9% of cases. Conversely, in the Early DIF cohort, the majority of failures (64.3%) were due to lack of osseointegration. Among the Early DIF cases, failures were prevalent in partially edentulous patients (78.6%), with associated factors including bone loss (57.1%) and infection (35.7%). Notably, all 14 cases of Early DIF (100%) exhibited a buildup of plaque. Overall, bone loss was significantly higher in the Late DIF cohort (87.1%) compared to the Early DIF cohort (57.1%).

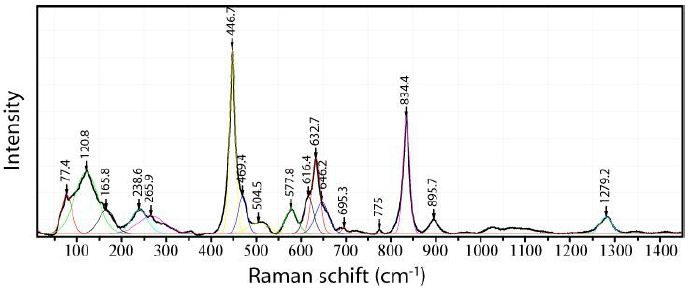

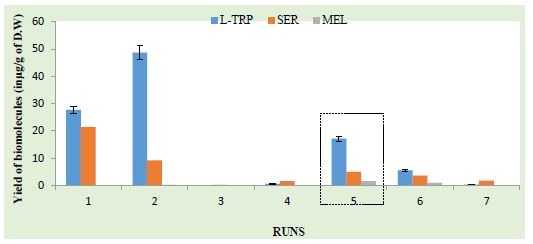

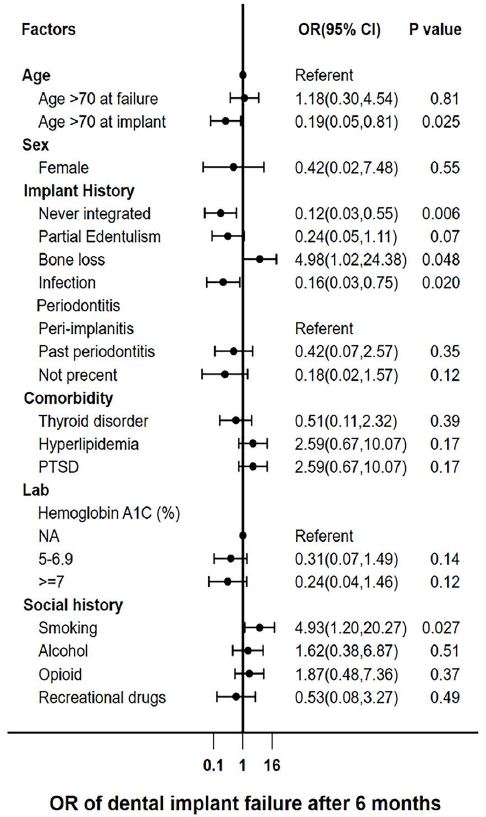

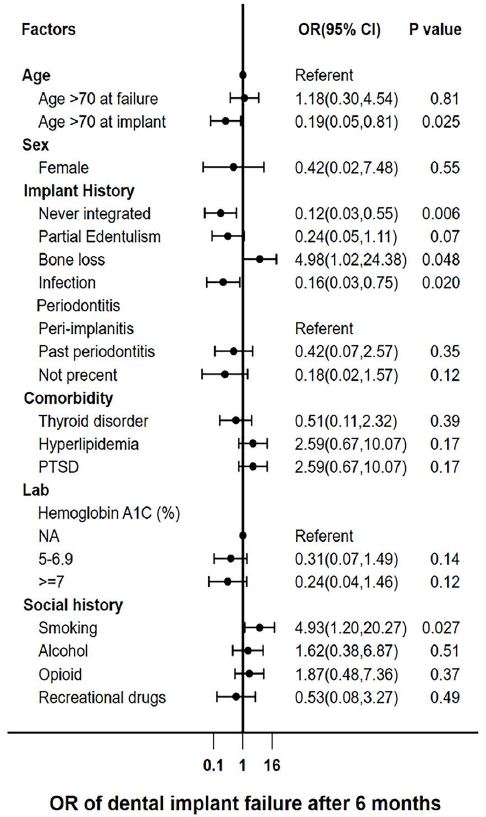

Univariable logistic models were employed to calculate the odds ratios (ORs) (Figure 6). Initial univariable analyses identified statistically significant association with individuals over 70 years old at the time of implant placement (OR 0.19, 95% CI [0.05,0.81], p=0.025), failure to osseointegrate (OR 0.12, 95% CI [0.03,0.55], p=0.006), bone loss (OR 4.98, 95% CI [1.02,24.38], p=0.048), infection (OR 0.16, 95% CI [0.03,0.75], p=0.020), and smoking (OR 4.93, 95% CI [1.20,20.27], p=0.027) within the failure groups. Notably, both bone loss and smoking exhibited a 4-fold increase in the odds of failure within the Late DIF group.

Multivariable logistic regression analyses were then conducted, adjusting for significant risk factors outlined in Figure 6. The outcomes are presented in Table 3. The findings indicate a noteworthy association between progressive bone loss and Late DIF (OR: 7.15 (95% CI [1.08,47.17]; p=0.041). Notably, factors such as age at implantation (OR 0.11, 95% CI [0.02,0.65] p=0.02) and infection (OR 0.09, 95% CI [0.01,0.78], p=0.029) exhibited relatively reduced odds for Late DIFs compared to Early failures. Smoking lost its significance when adjusted for confounding variables.

Figure 6: Odds Ratios for the univariable logistic regression model for Veteran dental implant failures. A forest plot showing Odds Ratios (OR) for the Univariable logistic model. PTSD – Post Traumatic Stress Disorder.

Table 3: Dental Implant Failures – Multivariate model

|

Failure after 6months

|

OR (95%CI) |

P-value |

|

Age at implantation (>70 years)

|

0.11 (0.02,0.65) |

0.015

|

| Infection |

0.09 (0.01,0.78)

|

0.029 |

|

Progressive bone loss

|

7.15 (1.08,47.17) |

0.041

|

| Smoking |

1.65 (0.30,9.03)

|

0.057

|

Adjusted odds ratios (OR) for Late DIF among Utah veterans.

Discussion

This study posed the hypothesis that Early and Late DIFs may stem from different underlying causes. Our multivariate statistical analysis supported this hypothesis, demonstrating a strong association between progressive bone loss and Late DIFs (OR 7.15, 95% CI 1.08,47.17; p=0.041) compared to the referent group, Early DIFs. Conversely, our data supported that advancing age (p=0.015) and infection (p=0.029) were relative risk factors for Early failures. Although smoking is a known risk factor for DIFs, showing significance for Late failure in the univariate model, its significance (p=0.057) diminished when adjusted for other risk factors in the multivariate model. Despite the limited sample size (n=45), our study provided valuable insights into the distinct processes underlying both Early and Late implant failures, thereby justifying the rationale for conducting a further chart review study for validation, which is currently underway.

Radiographically, the pattern of bone loss observed in Early DIF cases often manifested as radiolucent peri-implant lines or halo patterns, whereas Late DIF cases commonly exhibited higher percentages of bone loss in vertical/cupping and horizontal patterns (Figures 1, 2, and 4). A common feature of both Early and Late DIF was the lack of bone on the implant surface microscopically (Figure 3(a)). With few exceptions, radiographic bone loss in Early DIFs was minimal and located near the alveolar crest (Figure 2). Some samples showed a radiolucent line/halo (Figure 1(d)), while only one in the Early group showed catastrophic bone loss affecting adjacent teeth. Functional osseointegration likely never occurred in the Early group [12,13].

Though the radiographic approximation of bone and implant is not regarded as absolute confirmation of osseointegration, the peri-implant radiolucencies, confirmed with clinical history, of these cases were confirmation of the absence of bone-to-implant contact and DIF [14]. All 14 (100%) Early failure cases had a high plaque score, indicating an active microbiome and poor compliance with home care. Additionally, EDS data confirmed the presence of the elemental composition for tartar (calculus) on several samples [15]. The overgrowth of pathogenic bacteria and eventual seeding of the device with virulent organisms is a likely explanation for the association of Early DIFs with infection. Yaghmoor et al. have shown that preoperative antimicrobial rinses reduce the oral cavity’s bacterial load before implant placement, thereby reducing post-operative complications [16].

Furthermore, Kaminski et al. suggested that old age and systemic disease were factors that reduced the bacterial load required to cause severe maxillofacial infections.17 Such factors may explain why SL VA data shows the elderly are significantly more likely to experience Early DIF. This observation is consistent with the diminished ability to heal and combat infection that occurs with advanced age. The elderly are prone to developing multimorbidity and are colonized with increased anaerobic bacteria and fungi [17]. In short, older age predisposes patients to infection because of exposure to more pathogenic microbes and declining health.17

An additional factor associated with Early DIF is surgical insufficiency [18]. Although not analyzed in this study, operator- dependent factors and surgical concerns that could have contributed to peri-implant crestal bone loss include incorrect 3-D placement (i.e., angulation), insufficient bone or soft tissue, torque-induced bone compression, and inadequate osteotomy preparation to name a few. Unsurprisingly, experienced surgeons (≥50 implants placed per year) have fewer failures than those with less experience (<50 implants placed per year) or trainees [19]. Such considerations highlight the value of appropriate surgical training and mentorship [20].

Patients in the Late DIF group were generally younger (average 60.5 years) at the time of placement, and progressive bone loss was evident years later (Figures 2 and 4). Since only 12.9% acute infection (purulence, pain, swelling) was noted in the Late DIFs in this series, it is important to consider what other factors could have contributed to progressive osteolysis and eventual peri-implant bone loss [21]. Albrektsson’s standard of 1-1.5 mm of bone loss in the first year and 0.2mm annually highlights the difficulty of maintaining an implant long-term. An expectation of bone loss exists within the definition of a clinically successful implant.

Based on clinical history, most Late failures evaluated presented with signs consistent with Renvert’s definition of peri-implant disease, which is defined as bleeding on probing in addition to radiographic bone loss of 0.5mm to 5mm following initial healing [22]. Evidence suggests progressive bone loss is related to low-grade microbial insult, subsequent inflammatory response, genetics, and systemic health. Clinical, SEM and light microscopic data demonstrated that tartar was present in only 14 (26.4%) Late DIF cases. Patient compliance with hygiene practices, general health recommendations (e.g. smoking cessation), and regular dental cleanings have a significant impact on the patient’s microbiome and peri-implant health [23,24]. Sufficient healthy, keratinized soft tissue is as important as osseointegration for long-term implant success [25].

Implant longevity is further affected by occlusal forces. Although mechanics played an obvious role in the failure of those implants that were lost due to fracture (Figure 5), they may also contribute to failure more insidiously [26]. Figure 4 shows a series of radiographs in which implants at sites 12, 13, and 14 were stable for many years. The natural tooth at site #15 was lost, and significant bone loss was observed on adjacent implants shortly thereafter. Though several factors could have influenced such bone loss, it is possible the change in occlusion forces after the loss of natural tooth #15 resulted in mechanical overload for which the alveolar bone near implant #13 could not compensate [27]. Harold M. Frost updated Wolff’s law—the Utah paradigm of skeletal physiology—and described the biomechanical relationship between the stress placed on the functional unit of bone and the health of the load-bearing tissue [28]. As with the atrophy and hypertrophy witnessed in the disuse and use of muscle, bone requires appropriate mechanical loading to maintain its volume. When viewed through the lens of Frost’s “Utah paradigm,” the alteration of occlusal forces transferred to the bone with a dental implant versus the teeth and periodontal ligament could explain the significant alveolar bone loss that occurs over time [29]. Naghavi et al. described stress shielding as the main cause of aseptic loosening and bone loss in long-term orthopedic implants [30]. Another reason for progressive bone loss could be linked to osteolysis if wear debris is created as the result of micro-motion [30]. Thread design, platform switching, sequence and time of loading, and implant surface (e.g., machined collars, roughened surfaces) have been shown to play a role in stresses transferred to bone and impact osseointegration [31-34]. These factors need further investigation and could contribute to long-term implant success.

Another significant finding within the univariable analysis of Late failure cases was the association with smoking. However, when adjusted, it lost significance in the multivariable model. It is worth noting that both are failed groups. Smoking is a well-known risk factor for all DIFs and is supported by the existing literature [35]. The meta-analysis by Mustapha et al. suggests exposure to the toxins in cigarette smoke negatively impacts long-term dental implant survival [36]. Mustapha further stated that smoking inhibits osteogenesis and angiogenesis, diminishes bone mineralization and trabeculation, reduces intestinal calcium absorption, and increases free radical damage. Exposure to smoke is further associated with higher bleeding index, mucosal inflammation, and deeper peri-implant probing depths when compared to non-smokers [36].

Serious health conditions were present in 39 of the 45 patients, and 37 of those patients (75.5%) had been diagnosed with ≥4 serious health conditions, while the same number (75.5%) were also taking ≥4 prescription medications. Commonalities between both groups were challenges with substance abuse, polypharmacy, chronic opioid use, mental health, and multiple co-morbid conditions (e.g., coronary artery disease, kidney disease, diabetes, depression, PTSD). Only 12 (24.5%) of the 45 reported no use of alcohol, tobacco, opioids, or illicit drug use. Drug abusers generally used multiple illicit substances (alcohol 27/45, tobacco 16/45, opioids 20/45, illicit drugs 6/45). Further investigation on the effect of these environmental and health factors impact on dental implant success is warranted and supported by the literature [37-39].

Conclusion

In summary, our findings indicate that Early and Late DIFs are associated with distinct risk factors: namely, a lack of osseointegration in Early failures and progressive bone resorption for Late failures. Given the relatively short mean failure time of 0.3 years (IQR: 0.1 – 0.3 years) for Early DIF and 5.8 years (IQR: 2.0 – 9.8 months) for Late DIF, it appears that Early failures may be preventable through interventions aimed at enhancing healing and osseointegration, such as surgeon training and stringent patient selection criteria. Additionally, while factors contributing to Early failure, such as adherence to drilling protocols, tissue management, and antimicrobial rinses, can be effectively managed by experienced providers, trainees may need extensive guidance.

The adjusted multivariate model revealed a roughly seven-fold increase in the relative odds associated with Late failures and bone loss, highlighting the importance of managing risk factors related to progressive bone loss to improve implant longevity. While many underlying causes of progressive bone loss remain elusive and warrant further research, the authors speculate that mismatches between bone resorption and deposition, compounded by local peri-implant tissue infection or inflammation, may play a pivotal role in failures. Age- related changes, medication use, and poor oral health are potential contributors to implant failure, which lie beyond the control of the provider. Nonetheless, proactive management of these factors could potentially mitigate their impact on implant overall outcomes.

The major limitation of this study was the small sample size. Many known confounders were not controlled (e.g. patient hygiene, health, surgeon experience, etc). Moreover, females were underrepresented within this veteran cohort, and this analysis might not have underscored any risk factors related to sex differences.

Further research is needed to fully elucidate the complex mechanisms underlying implant failure and develop more effective strategies for its prevention and management.

Conflict of Interest Statement

The authors declare no conflict of interest

Author Contribution Statement

All authors have made significant contributions to this article. The article was a collaborative effort conceived collaboratively, involving Alec Griffin and Sujee Jeyapalina; the acquisition of data was a team effort, with contributions from Alec Griffin, Sujee Jeyapalina, and Guo Wei. Guo Wei performed the data and statistical analysis. Dr. Brown aided in sample collection. Data interpretation, drafting, critical revisions, and final approval of the version to be published were carried out collectively by Alec Griffin, Layne Brown, Guo Wei, Aaron Miller, Jill Shea, Mark Durham, and Sujee Jeyapalina.

Acknowledgments

We would like to thank Pooya Elahitaleghani, PhD for his contribution in generating the scanning electron microscope images, along with the providers and staff at the VA SLC Dental Clinic for their help in sample collection for this study. No funding was received for this study. We would like to thank Nobel Biocare and Zimmer Biomet for supplying several new dental implants for comparison.

Summary Box

What is known:

- Current literature estimates dental implant failure rates of 5-10% within the first decade after implantation among the general

- Late dental implant failures initially integrate but fail over time while early dental implant failures occur due to failure to

What the study adds:

Late vs early dental implant failures are associated with distinct risk factors. Specifically, late failures result from progressive bone resorption and early failures from a lack of bone-to-implant integration.

References

- Manor Y, Oubaid S, Mardinger O, Chaushu G, Nissan J (2009) Characteristics of early versus late implant failure: a retrospective study. J Oral Maxillofac Surg. 67: 2649-52. [crossref]

- Miller AJ, Brown LC, Wei G, et al. (2024) Dental implant failures in Utah and US veteran cohorts. Clin Implant Dent Relat Res. [crossref]

- Raikar S, Talukdar P, Kumari S, Panda SK, Oommen VM, et al. (2017) Factors Affecting the Survival Rate of Dental Implants: A Retrospective Study. J Int Soc Prev Community Dent. 7: 351-355. [crossref]

- Setzer FC, Kim S (2014) Comparison of long-term survival of implants and endodontically treated teeth. J Dent Res. 93: 19-26. [crossref]

- Albrektsson T, Zarb G, Worthington P, Eriksson AR (1986) The long-term efficacy of currently used dental implants: a review and proposed criteria of Int J Oral Maxillofac Implants. 1: 11-25. [crossref]

- Linkevic̆ius T (2019) Zero bone loss concepts. Quintessence Publishing Co, Inc,online

- Misch CE, Perel ML, Wang HL, et (2008) Implant success, survival, and failure: the International Congress of Oral Implantologists (ICOI) Pisa Consensus Conference. Implant Dent. 17: 5-15. [crossref]

- Mohajerani H, Roozbayani R, Taherian S, Tabrizi R (2017) The Risk Factors in Early Failure of Dental Implants: a Retrospective J Dent (Shiraz)18: 298-303. [crossref]

- Staedt H, Rossa M, Lehmann KM, Al-Nawas B, Kammerer PW, et (2020) Potential risk factors for early and late dental implant failure: a retrospective clinical study on 9080 implants. Int J Implant Dent. 6: 81. [crossref]

- Pan W, Wang Q, Chen Q (2019) The cytokine network involved in the host immune response to periodontitis. Int J Oral Sci. 11: 30. [crossref]

- Hashim D, Cionca, N. . A Comprehensive Review of Peri-implantitis Risk Factors. Current Oral Health Reports. 27 July 2020 2020;7: 262-273. doi: https: //doi. org/10.1007/s40496-020-00274-2

- Jayesh RS, Dhinakarsamy V (2015) J Pharm Bioallied Sci. 7: S226-9.

- Parithimarkalaignan S, Padmanabhan TV (2013) Osseointegration: an update. J Indian Prosthodont Soc. 13: 2-6. [crossref]

- Geraets W, Zhang L, Liu Y, Wismeijer D (2014) Annual bone loss and success rates of dental implants based on radiographic measurements. Dentomaxillofac Radiol. 43: 20140007. [crossref]

- Valm AM (2019) The Structure of Dental Plaque Microbial Communities in the Transition from Health to Dental Caries and Periodontal Disease. J Mol Biol. 431: 2957-2969. [crossref]

- sYaghmoor W, Ruiz-Torruella M, Ogata Y, et al. (2024)Effect of preoperative chlorhexidine, essential oil, and cetylpyridinium chloride mouthwashes on bacterial contamination during dental implant surgery: A randomized controlled clinical Saudi Dent J. 36: 492-497.

- Kaminski B, Blochowiak K, Kolomanski K, Sikora M, Karwan S, et (2022) Oral and Maxillofacial Infections-A Bacterial and Clinical Cross-Section. J Clin Med. 11: 10 [crossref]

- Thiebot NH, Blanchet F, Dame M, Tawfik S, Mbapou et al. (2022) Implant failure rate and the prevalence of associated risk factors: a 6-year retrospective observational survey. Journal of Oral Medicine and Oral Surgery. 28: 2

- Solderer A, Al-Jazrawi A, Sahrmann P, Jung R, Attin T, et (2019) Removal of failed dental implants revisited: Questions and answers. Clin Exp Dent Res. 5: 712-724. [crossref]

- Entezami P, Franzblau LE, Chung KC (2012) Mentorship in surgical training: a systematic review. Hand (N Y)7: 30-6. [crossref]

- Rokn AR, Sajedinejad N, Yousefyfakhr H, Badri S (2013) An unusual bone loss around implants. J Dent (Tehran) 10: 388-92.

- Renvert S, Persson GR, Pirih FQ, Camargo PM (2018) Peri-implant health, peri-implant mucositis, and peri-implantitis: Case definitions and diagnostic J Periodontol. 89 1: S304-S312.

- Scarano A, Khater AGA, Gehrke SA, et al. (2023) Current Status of Peri-Implant Diseases: A Clinical Review for Evidence-Based Decision J Funct Biomater. 14: 4 [crossref]

- Mombelli A, Decaillet F (2011) The characteristics of biofilms in peri-implant J Clin Periodontol. 38: 203-13. [crossref]

- Linkevicius T, Apse P, Grybauskas S, Puisys A (2009) The influence of soft tissue thickness on crestal bone changes around implants: a 1-year prospective controlled clinical Int J Oral Maxillofac Implants. 24: 712-9. [crossref]

- Alzahrani KM (2020) Implant Bio-mechanics for Successful Implant Therapy: A Systematic J Int Soc Prev Community Dent. 10: 700-714. [crossref]

- Ferreira PW, Nogueira PJ, Nobre MAA, Guedes CM, Salvado F (2022) Impact of Mechanical Complications on Success of Dental Implant Treatments: A Case-Control Eur J Dent. 16: 179-187. doi: 10.1055/s-0041-1732802 [crossref]

- Frost HM (2001) From Wolff’s law to the Utah paradigm: insights about bone physiology and its clinical applications. Anat Rec.262: 398-419. [crossref]

- Millis DL, Levine Canine rehabilitation and physical therapy. Second edition. ed. Elsevier; 2014: xvi, 760 pages.

- Naghavi SA, Lin C, Sun C, et al. (2022) Stress Shielding and Bone Resorption of Press-Fit Polyether-Ether-Ketone (PEEK) Hip Prosthesis: A Sawbone Model Polymers (Basel) 14: 21. [crossref]

- Manikyamba Y, Sajjan S, Raju R, Rao B, Nair K C (2017) Implant thread designs: An Trends in Prosthodontics and Dental Implantology (TPDI) 8: 1&2.

- Wiskott HW, Belser UC (1999)Lack of integration of smooth titanium surfaces: a working hypothesis based on strains generated in the surrounding bone. Clin Oral Implants Res.10: 429-44. [crossref]

- Barfeie A, Wilson J, Rees J (2015) Implant surface characteristics and their effect on Br Dent J. 218: E9. [crossref]

- Penarrocha-Diago MA, Flichy-Fernandez AJ, Alonso-Gonzalez R, Penarrocha-Oltra D, Balaguer-Martinez J et al. (2013) Influence of implant neck design and implant- abutment connection type on peri-implant health. Radiological study. Clin Oral Implants Res. 24: 1192-200. [crossref]

- Kasat V, Ladda R (2012) Smoking and dental implants. J Int Soc Prev Community Dent. 2: 38-41. [crossref]

- Mustapha Z, Chrcanovic BR (2021) Smoking and Dental Implants: A Systematic Review and Meta-Analysis. Medicina (Kaunas) 58: 1. [crossref]

- Parihar AS, Madhuri S, Devanna R, Sharma G, Singh R,et (2020) Assessment of failure rate of dental implants in medically compromised patients. J Family Med Prim Care. 9: 883-885. [crossref]

- Chappuis V, Avila-Ortiz G, Araujo MG, Monje A (2018) Medication-related dental implant failure: Systematic review and meta-analysis. Clin Oral Implants Res. 29: 55-68. [crossref]

- Nassar P, Ouanounou A (2020) Cocaine and methamphetamine: Pharmacology and dental implications. Can J Dent Hyg. 54: 75-82.