Abstract

This paper addresses the emerging opportunity to learn how to ask better questions, and think critically using an AI based tool, Idea Coach. The tool allows the user to define the topic, as well as specify the nature of the question though an easy-to-use interface (www.BimiLeap.com). The tool permits the user to change the topic slightly and discover the changes in the questions which then emerge. Idea Coach provides sets of 15 topic questions per iteration, along with summarizing the themes inherent in the questions, and suggests innovations based on the questions. The paper illustrates the output of the Idea Coach for four similar phrase describing food: Healthful food; Healthy Food; Good for Health; Health Food, respectively. The output, produced in a matter of minutes, provides the user with a Socratic-type tutor to teach concepts and drive research efforts.

Introduction-thinking Critically and the Importance of Asking Good Questions

A look through the literature of critical thinking reveals an increasing recognition of its importance, as well as alternative ways of how to achieve it [1,2]. It should not come as a surprise that educators are concerned about the seeming diminution of critical thinking [3,4]. Some of that diminution can be traced to the sheer attractiveness of the small screen, the personal phone or laptop, which can provide hours of entertainment. Some of the problem may be due to the effort to have people score well on standardized tests, a problem that the late Professor Banesh Hoffmann of Queens College recognized six decades ago in his pathbreaking book, The Tyranny of Testing, first published in the early 1960’s [5].

That was then, the past. Given today’s technology, the ability to tap into AI, artificial intelligence, the availability of information at one’s fingertips, the ability to scan hordes of documents on the internet, what are the next steps?. And can the next steps be created so that they can serve the purposes of serious inquiry, e.g., social policy on the one hand, science on the other, designed for students as well as for senior users? When the next steps can be used by students, they end up generating a qualitative improvement in education.

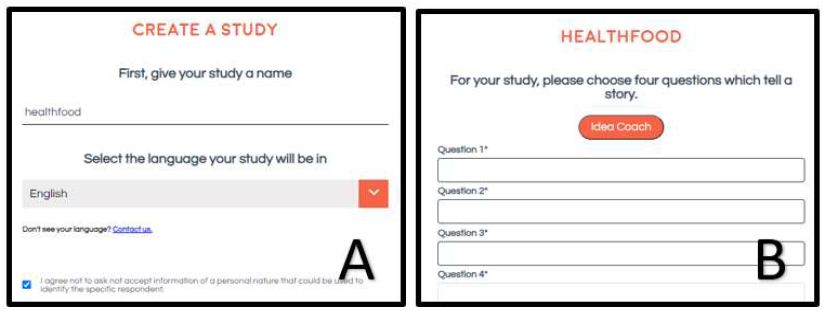

Previous papers in this ‘series’, papers appearing in various journals, have presented a systematized approach to ‘understanding’ how people think. The approach, originally called IdeaMap and then RDE (Rule Developing Experimentation), and now finally Mind Genomics, focused on creating a framework which required users to create four questions, each with four answers [6]. The actual process was to have the user create a study name, come up with the four ‘questions which tell a story’, and then for each question come up with four stand-alone-answers, phrases. The actual process was to mix these standard alone answers (called elements), present the combinations of answers (vignettes), instruct the respondent (survey participant) to rate the vignette on a defined rating scale, one vignette at a time, and then analyze the data to link the elements to the ratings. Figure 1 shows the process.

Figure 1: The first steps in the Mind Genomics process. Panel A shows the creation of a study, including the name. Panel B shows the request for four questions which ‘tell a story.’

This exercise, introduced thirty years ago in the early version called IdeaMap® ended up revealing the difficulties experienced with asking good questions. The users of IdeaMap® comprised professionals at market research companies scattered around the world. These users were familiar with surveys, had no problems asking questions, but needed ‘coaching’ on creating questions which ‘told a story’.

As IdeaMap® grew, it became increasingly obvious that many users wanted to create a version of surveys. Users were comfortable with surveys. The requirement for a survey was to identify the different key areas of a topic and instruct the survey-taker to rate each topic using a set of questions prepared by the user. Expertise was demonstrated in the topics that the user selected, the instructions to the survey taker, and occasionally in the analysis. The user who discovered a new subtopic, e.g., one corresponding to a trend, could make an impression simply by surveying that new topic. Others prided themselves on the ability to run surveys which were demonstrably of lower bias and bias-free, or at least pontificated on the need to reduce bias. Still others were able to show different types of scales, and often times novel types of analyses of the results [7]. What was missing, however, was a deeper way to think about the problem, one which provided a new level of understanding.

The Contribution, or Rather the ‘Nudge’ Generated by the User Experience in Mind Genomics

The first task of the researcher after setting up the study is to create the four questions (Figure 1). It is at this step that many researchers become dismayed, distressed, and demotivated. Our education teaches us to answer questions. Standardized scores are based on the performance, viz.., right versus wrong. There is the implicit bias that progress is measured by the number of right answers. The motto ‘no child left behind’ often points to the implicit success of children on these standardized tests. There is no such similar statement such as ‘all children will think critically.’ And, most likely were that to be a motto, it would be laughed at, and perhaps prosecuted because it points to the inequality of point. We don’t think of teaching children to think critically as being a major criterion for advancing them into their education.

The introduction of Mind Genomics into the world of research and then into the world of education by working with young children revealed the very simplicity of teaching critical thinking, albeit in a way which was experiential and adult oriented [6]. Early work with very bright students showed that a few of them could understand how to provide ideas for Mind Genomics, and with coaching could even develop new ideas such as the reasons for WWI or what it was like to be a teenagers in the days of ancient Greece. These efforts, difficult as they were, revealed that with coaching and with a motivated young person one could get the person to think in terms of sequence of topics which related a story.

It was clear from a variety of studies that there was a connection between the ability to use the Mind Genomics platform and the ability to think. Those who were able to come up with a set of questions and then four answers to each question seemed to be quite smart. There were also students who were known to be ‘smart’ in their everyday work, but who were experiencing one or another difficulty while trying to come up with ideas. These frustrated respondents did not push forward with the study. Indeed, many of the putative users of Mind Genomics gave up in frustration, simply abandoning the process. Often they requested that the Mind Genomics process should provide them with the four questions. The answers were never an issue with these individuals, only the questions.

The response to the request for questions ended up being filled by the widespread introduction of affordable and usable AI, in the form of Chat GPT [8]. The inspiration came from the realization that were the questions to be presented to Chat GPT in a standardized form, with the user able to add individuating verbiage it might well be possible to create a ‘tutor’ which could help the user. And so was born Idea Coach, in the early months of 2023, shortly after the widely heralded introduction of Chat GPT to what turned out to be a wildly receptive audience of users.

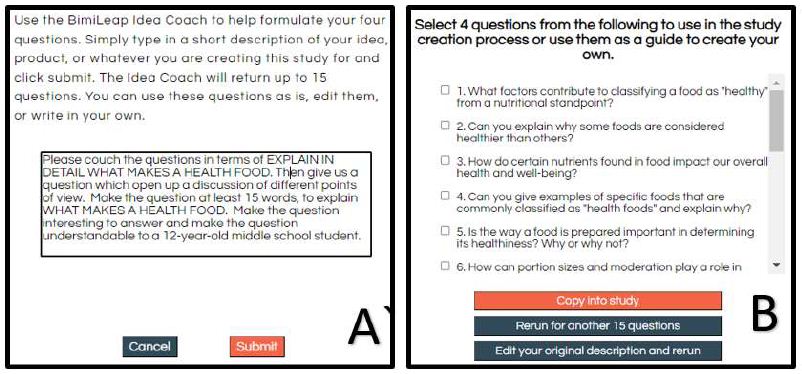

The early approach of Idea Coach was to allow the user to type in the request for questions, at which point the Idea Coach would return with 30 questions. The sheer volume of putative questions was soon overwhelming, an embarrassment of riches. It was impossible for the user to read the questions and make a selection. Eventually the system was fixed to generate 15 questions rather than 30, to record the questions for later presentation to the user, to allow the user to select questions and re-rerun the effort, or even to edit the questions. Figure 2 shows an example of the request to the Idea Coach, and the return o f a set of questions, along with the hance to select 1-4, or to rerun or to edit the requests and rerun.

Figure 2: Screen shots showing the location where the user types in the ‘squib’, viz the prompt (Panel A), and some questions which emerge from an iteration using that prompt.

The ultimate use of the Idea Coach turned out to be a massive simplification in the use of the Mind Genomics program, BimiLeap (Big Mind Learning App), along with the welcome acceptance by school age students who found it easy, and ‘fun’ [9-11]. The effort to create the Idea Coach along with mentoring the young students make it possible for them to do studies, at first guided, and then later on their own. Later on, the Idea Coach would end up providing answers to the questions, with the AI provided the text to the AI in the form of the actual question.

Moving Beyond the Research Process into What Idea Coach Actually Can Contribute

The initial experiences with Idea Coach were confined to setting up the raw material for the Mind Genomics process, namely the specification of the four questions, and then for each question the specification of the four answers. The earliest inkling of the power of Idea Coach to help critical thinking emerged from meetings with two young researchers, both of school age. It was during the effort to set up studies that they asked to run the Idea Coach several times. It was watching their faces which revealed the emerging opportunity. Rather than focusing on the ‘task’, these young school children seemed to enjoy reading the answers, at least for two, sometimes three iterations. They would read the answers and then press re-run, just to see what changed, what new ideas. It was then that the notion f using Idea Coach as a Socratic tutor emerged, a tutor which would create a book of questions about a topic.

Not every user was interested in using the Idea Coach to provide sets of questions for a topic, but there were some. Those who were interested ended up going through the question development process about two or three times, and then moved on, either to set up the study, or in cases of demonstration to other topics outside of the actual experience.

Over time, the Idea Coach was expanded twice, first to give answers as well as to suggest questions, and then to provide am Excel book of all efforts to create questions, and to create answers, each effort generating a separate tab in the Excel book. After the questions and answers had been registered in the study, and even before the user continued with the remaining parts of the set-up (viz., self-profiling classification questions, respondent orientation, scale for the evaluation) the Idea Coach produced a complete ‘idea book.’ The idea book comprised the one page for each iteration, whether question or answer, and then a series of AI-generated summarizations, listed below.

- Actual set of 15 questions

- Key Ideas

- Themes

- Perspectives

- What is missing

- Alternative Viewpoints

- Interested Audiences

- Opposing Audiences

- Innovations

The objective of the summarization was to make Idea Coach into a real Socratic tutor which asked questions, but also a provider of different points of view extractable from the set of 15 questions or 15 answers on a single Excel tab. That is, the Idea Coach evolved into a teaching tool, the basic goal to help the user come up with questions, but the unintended consequence being the creation of a system to educate the user on a topic in a way that could not be easily done otherwise.

The ‘time dimension’ of the process is worth noting before the paper shows the key results for the overarching topic of ‘health + food’. The creation of the squib to develop the questions requires about 2-3 minutes, once the user understands what to do. Each return of the 15 questions requires about 10-15 seconds. The editing of the squib to create a new question requires about a minute. Finally, the results are returned after the user completes the selection of four questions and the selection of four answers for each. A reasonable size Excel-based Idea book with about 30 total pages, questions, answers, AI summarization, in finished form thus emerges within 25-30 minutes. It is important to note that some of the questions will repeat, and there will overlaps from iteration to iteration. Even so, the Idea Coach, beginning at it did to ameliorate the problem of frustration and lack of knowledge ended up being a unique teaching guide, truly a Socrates with a PhD level degree. The correctness of information emerging is not relevant. What is relevant is the highlight of ideas and themes for the user to explore.

How Expressions of the Idea of ‘Health’ Generate Different Key Ideas and Suggested Innovations

Food and health are becoming inseparable, joined together at many levels. It is not the case that food is the same as health, except for some individuals who conflate the two. Yet it is obvious that there exists a real-world, albeit complex between what we eat and how healthy we are. These connections manifest themselves in different ways, whether simply the co-variation of food and health [9], the decisions we make about food choice [12,13], our immediate thoughts about what makes a food healthy or healthful [14], and finally but not least, how we respond behaviorally and attitudinally to claims made by advertisers and information provided by manufacturers [15,16].

The notion of critical thinking emerged as a way to investigate the differences in the way people use common terms to describe food and health. After many discussions about the topic, it became increasingly obvious that people bandied about terms conjoining health and food in many ways. The discussions failed to reveal systematic differences. The question then emerged as to whether critical thinking powered by AI could generate clear patterns of difference in language when different expressions about food and health were used as the starting points. In simple terms, the question became simply like ‘do we see differences when we talk a healthful food versus a health food?’

What Makes a Food HEALTHFUL?

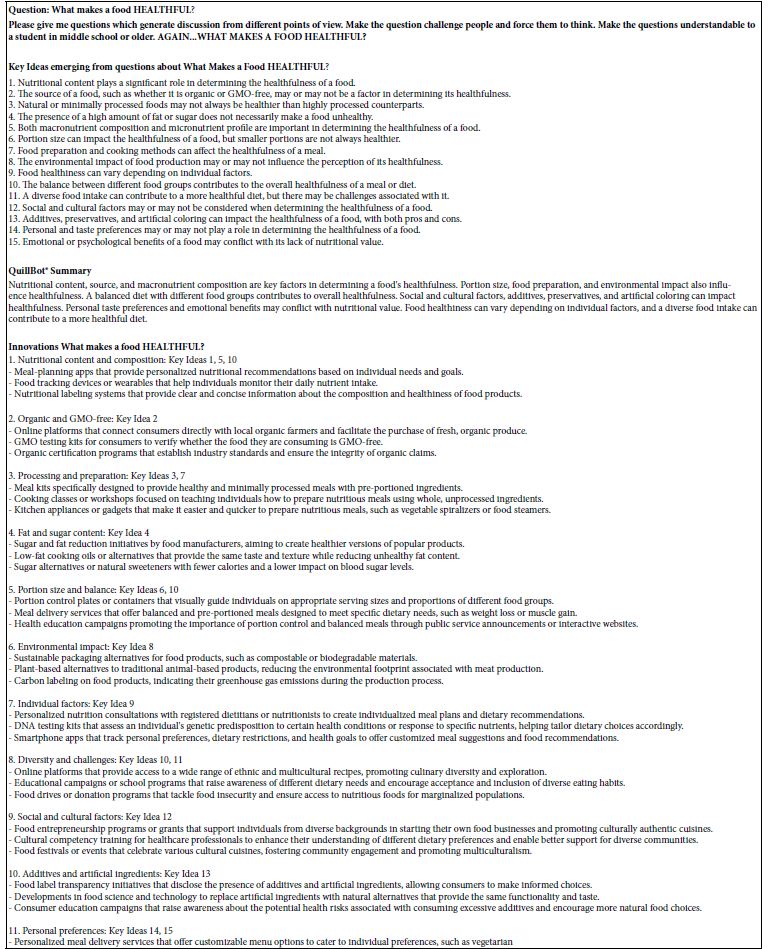

The first phrase investigated about foods and health is ‘What makes a food HEALTHFUL?’ The focus is on the word ‘healthful’ to express the main idea Table 1 shows the question as presented in the squib, the 15 key ideas which emerge, an AI summarization of the key ideas by the new AI program, QuillBot [8,17] and finally suggested innovations based on the ideas. The bottom line for HEALTHFUL is that the output ends up providing a short but focused study guide to the topic created by the interests of the user, open to being enhanced by the user at will, and in reality, in minute.

Table 1: AI results regarding the phrase ‘What makes a food healthful?’ The table is taken directly from the outputs of Idea Coach (key ideas, innovations) and from Quillbot®.

What Makes a Food HEALTHY?

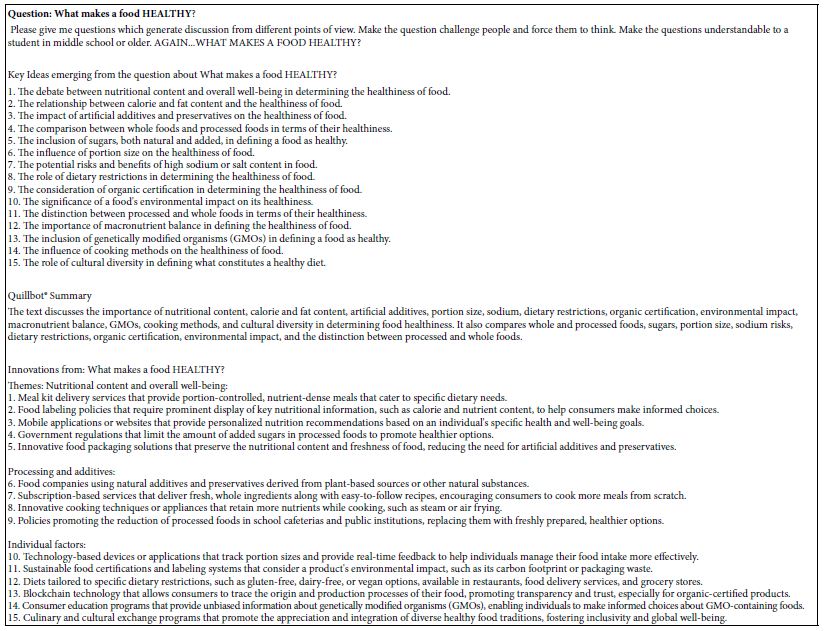

The second phrase investigated about foods and health is ‘What makes a food HEALTHY? The terms ‘healthy’ and ‘healthful’ are used interchangeably in modern usage, although there is a subtle but profound difference. The word ‘healthful’ refers to the effect that the food has on a third party, such as a person. The word ‘healthy’ refers to the food itself, as if the food were the third party. It is precisely this type of thinking, which is part of the world of critical thinking, although the issue might go further to deal with the different implications of these two words.

The reality of the differences between healthful and healthy is suggested by Table 2, but not strongly. Table 2 again suggests a many-dimensional world of ideas surrounding the word ‘healthy’ when combined with the food. There is once again the reference to the food itself, as well as to the person. The key difference seems to be ‘morphological’, viz., the format of the output of AI. In Table 1 the key ideas were so numerous that the key ideas themselves generated different aspects to each idea. In contrast, Table 2 shows a far sparser result.

One clear opportunity for teaching critical thinking now emerges. That opportunity is to discuss the foregoing observation about the different morphologies of the answers, the reasons which might underly the reasons, and the type of ideas and innovations which emerge.

Table 2: AI results regarding the phrase ‘What makes a food healthy?’ The table is taken directly from the outputs of Idea Coach (key ideas, innovations) and from Quillbot®.

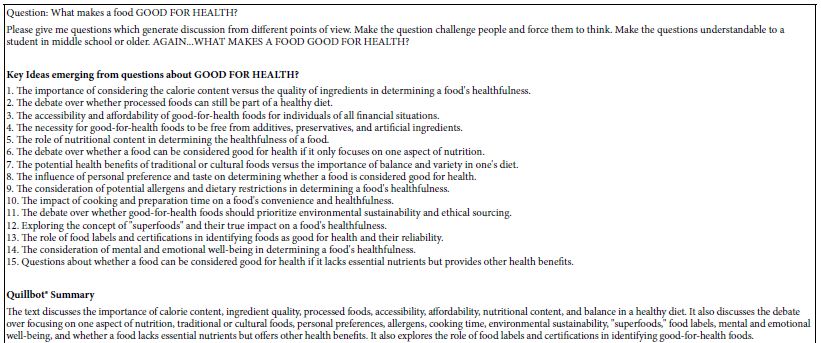

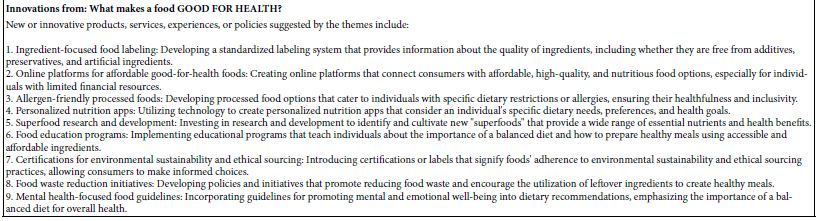

Table 3: AI results regarding the phrase ‘What makes a good for health?’ The table is taken directly from the outputs of Idea Coach (key ideas, innovations) and from Quillbot®.

What Makes a HEALTH FOOD?

The fourth and final phrase investigated is ‘Health Food’. Table 4 shows the results emerging from the AI analysis. Once again AI returns with relatively simple ideas.

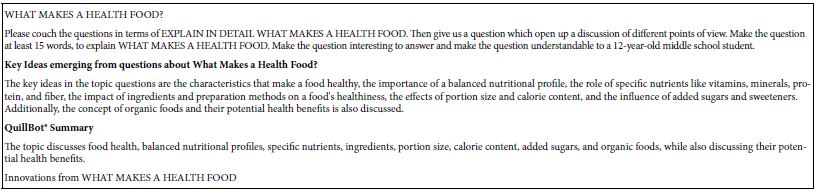

Table 4: AI results regarding the phrase ‘What makes a health food?’ The table is taken directly from the outputs of Idea Coach (key ideas, innovations) and from Quillbot®.

1. Meal delivery services that focus on providing healthy, balanced meals with optimal nutritional profiles.

2. Cooking classes or workshops that teach individuals how to cook using healthy ingredients and techniques.

3. Apps or websites that provide information on the nutritional content and ingredients of commonly consumed foods and beverages.

4. Nutritional labeling on restaurant menus to make it easier for individuals to make healthier choices when dining out.

5. Community gardens or urban farming initiatives that promote access to fresh, organic produce in urban areas.

6. Policies and regulations that require food manufacturers to disclose the amount of added sugars in their products.

7. Nutrient-dense food products or snacks that provide essential vitamins, minerals, and protein in a convenient and portable form.

8. Schools implementing nutrition education programs that teach children about the importance of healthy eating and the impact of food choices on their overall health.

9. Digital health platforms or apps that offer personalized nutrition plans based on an individual’s specific nutrient needs and goals.

10. Food labeling systems that use color-coded labels or symbols to indicate the nutritional quality of a product, making it easier for consumers to make healthier choices.

Discussion and Conclusions

The objective of this study is to explore the different ways of learning how to ask questions. A great deal of today’s research follows the path of the so-called ‘hypothetico-deductive’ system. The researcher begins with a hypothesis and runs an experiment to confirm or disconfirm that hypothesis, viz., to falsify if possible. The focus is often on the deep thinking to link the hypothesis to the experiment, then to analyze the results in a way which provides a valid answer [18]. The vast majority of papers in the literature begin with this approach, with the actual science focusing on the ability to test the hypothesis, and maybe add that hypothesis to our knowledge, a task often colloquially called ‘plugging holes in the literature.’

Mind Genomics, an emerging approach to the issues of everyday life, does not begin with hypothesis, and does not the scientific logic of Popper, and the notion of hypothesis drive research. Instead, Mind Genomics begins as an explorer or cartographer might begin, looking for relations among variables, looking for regularities in nature, without however any underlying hypothesis about how nature ‘works’. As a consequence, the typical experiment in Mind Genomics begins by an interesting conjecture about what might be going on in the mind of a person regarding a topic. The outcome of a set of Mind Genomics experiments ends up being an aggregate of snapshots of how people think about different topics, this collection of snapshots put into a database for others to explore and summarize.

With the foregoing in mind, the topic of coming up with interesting questions becomes a key issue in Mind Genomics. If the approach is stated simply as ‘asking questions, and getting answers to these questions’, with no direct theory to guide the question, then in the absence of theory how the system can move forward? The science of Mind Genomics is limited to the questions that people can ask. How can we enable people to ask better questions, to explore different areas of a topic with their questions. And in such a way expand this science based on question and answer.

Acknowledgment

Many of the ideas presented in this paper have been taken from the pioneering work of the late Professor Anthony G. Oettinger of Harvard University, albeit after a rumination period going on to almost 60 years [19].

References

- Chin C, Brown DE (2002) Student-generated questions: A meaningful aspect of learning in science. International Journal of Science Education 24: 521-549.

- Washburne JN (1929) The use of questions in social science material. Journal of Educational Psychology 20: 321-359.

- Huitt W (1998) Critical thinking: An overview. Educational Psychology Interactive 3: 34-50.

- Willingham DT (2007) Critical thinking: Why it is so hard to teach? American Federation of Teachers Summer 2007: 8-19.

- Hoffmann B, Barzun J (2003) The tyranny of testing. Courier Corporation.

- Moskowitz HR (2012) Mind Genomics’: The experimental, inductive science of the ordinary, and its application to aspects of food and feeding. Physiology and Behavior 107: 606-613. [crossref]

- Slattery EL, Voelker CC, Nussenbaum B, Rich JT, Paniello RC, et al. (2011) A practical guide to surveys and questionnaires. Otolaryngology–Head and Neck Surgery 144: 831-837.

- Fitria TN (2021) QuillBot as an online tool: Students’ alternative in paraphrasing and rewriting of English writing. Englisia: Journal of Language, Education, and Humanities 9: 183-196.

- Kornstein B, Rappaport, SD, Moskowitz H (2023a) Communication styles regarding child obesity: Investigation of a health and communication issue by a high school student researcher using Mind Genomics and artificial intelligence. Mind Genomics Studies in Psychology Experience 3: 1-14.

- Kornstein B, Deitel Y, Rapapport SD, Kornstein H, Moskowitz H (2023b) Accelerating and expanding knowledge of the everyday through Mind Genomics: Teaching high school students about health eating and living. Acta Scientific Nutritional Health 7: 5-22.

- Mendoza CL, Mendoza CI, Rappaport S, Deitel J, Moskowitz H (2023) Empowering young people to become researchers: What do people think about the different factors involved when shopping for food? Nutrition Research and Food Science Journal 6: 1-9.

- Caplan P (2013) Food, health and identity. Routledge.

- Rao M, Afshin A, Singh G, Mozaffarian D (2013) Do healthier foods and diet patterns cost more than less healthy options? A systematic review and meta-analysis. BMJ open 3: p.e004277. [crossref]

- Monteiro CA (2009) Nutrition and health. The issue is not food, nor nutrients, so much as processing. Public Health Nutrition 12: 729-731. [crossref]

- Bouwman LI, Molder H, Koelen MM, Van Woerkum CM (2009) I eat healthfully but I am not a freak. Consumers’ everyday life perspective on healthful eating. Appetite 53: 390-398. [crossref]

- Nocella G, Kennedy O (2012) Food health claims–What consumers understand. Food Policy 37: 571-580.

- Ellerton W (2023) The human and machine: OpenAI, ChatGPT, Quillbot, Grammarly, Google, Google Docs, & humans. Visible Language 57: 38-52.

- Kalinowski ST, Pelakh A (2023) A hypothetico-deductive theory of science and learning. Journal of Research in Science Teaching.

- Bossert WJ, Oettinger AG (1973) The integration of course content, technology and institutional setting. A three-year report. 31 May 1973. Project TACT, Technological Aids to Creative Thought.