Abstract

The paper introduces a new approach for understanding aspects life, based upon the emerging science of Mind Genomics. The approach presents a internet-based technology (www.bimileap), which enables the interested person to use an AI-empowered system, Idea Coach, to ask ideas. Originally developed as a way to provide novices with a way to learn structured, critical thinking, Idea Coach has been expanded, allowing the user to describe a topic, posit the existence of mind-sets, and instruct the AI to answer a set of user-defined questions about these mind-sets. The Idea Coach system can be iterated to provide new sets of answers, each iteration requiring about 15-30 seconds. The Idea Coach request to AI can be changed ‘mid-stream’, to provide different types of information. The approach is illustrated with a deep analysis by Idea Coach of what might be in the mind of a person suffering from post-traumatic stress. The position of the paper is that AI can now be used to launch critical thinking about a topic, doing by posing ‘what if’ types of questions to promote discussion and experimentation.

Introduction

It is commonly recognized that a vast number of internet searches are done to understand situations, events, things which affect one personally [1-3]. The foregoing sentence seems so simple, so realistic, so obvious. The point is made cogently when one experiences a life-impacting situation, e.g., a disease to which one is newly diagnosed. What was an intellectual topic before may often evolve to an obsession with knowing as much about this disease or other problem [4,5], often to the point that the individual’s entire focus and conversation revolves around the different aspects of the disease, the origins, diagnoses, prognoses, and so forth [6].

One consequence of the desire to ‘know’ about things most important is the use of the Internet as a source of information. When the topic is health and medical most websites are careful to emphasize in one way or another that the reader should consult a medical professional for guidance, and that the information presented is for popular, informal consumption. Typically, the material presented to the reader is couched in an interesting, easy to understanding, and engaging fashion. The information is usually superficial, a level which makes sense because the typical reader wants a quick and superficial overview.

The origin of this paper was the request to provide a deeper level of information about a medical topic, that level not being one that a medical student might learn, but certain more structured and deeper than one might receive from a cursory search through Google.

The choice of PTSD was dictated by other reasons, namely a growing interest to deal with social issues intertwined with medical issues. One of the important ones was the providing deeper information about PTSD, a psychiatric disorder of interest to local town officials coping with the effects of exposures to violence among many of the town’s poorer citizens. Could Mind Genomics, and its AI components, Idea Coach, provide the user with new types of information.

The Historical Background

The approach presented here evolved over the 30-year span from the early 1990’s. At that time author Howard Moskowitz and colleague Derek Martin had been expanding the scope of concept testing by creating what was then called IdeaMap [7], later to evolve to Mind Genomics [8]. The ingoing vision was to democratize the acquisition of insights into two ways, one by a new way of thinking about insights, the other by the vision of DIY, first at one’s own computer, and then on the internet.

Up to the introduction of IdeaMap researchers tested new ideas by one of two ways. The first was called ‘promise’ testing, or some variation thereof. The ingoing notion was that the researcher would come up with a number of different ideas, and test these among prospective consumers. The ideas could be alternative execution of a basic idea or proposition’ (viz., rate each of these ideas for a new medicine), or the ideas could be even more basic (viz., rate the importance of each of these benefits of a new medicine, such as speed of relief, degree of relief, safety, etc.). These ideas could be rated in a study, but the basic notion is that the research could take a simple situation and have people rate facets of that situation, or take a simple offering, and have people rate ways of expressing what the offering could do. The methods were easy, the respondent in the study had no problem, but the test stimuli lacked context, depth, and the richness of everyday life. Nonetheless, the researchers working in the field followed through on these, and often were quite successful because the study with real consumers gave the developers and marketers a great deal of insight.

A second approach, also widely done, and complementing the promise test was to create an execution, a stand-alone ‘concept’ of the problem or offering. The test stimulus in this case was a concept ‘board’, usually presenting a picture, product or service name, description, and so forth. The execution of the basic idea was as important to the corporation as the basic idea itself. The ingoing assumption was that people needed to be convinced, by having something which appealed to them, presented in a way that would be more typical more ‘ecologically valid.’ The executions, the test concepts, demanded a great deal of judgment about what to put in, what to exclude, how to talk about the product or service, along with work to create the actual concept, e.g., what visuals were needed, and so forth. The studies were called concept screens when the concepts were rough, barebones descriptions, or called concept tests when the concepts were finished, and the testing was necessary to measure the expected performance in the marketplace.

The Contribution of Mind Genomics

When Mind Genomics appeared on the scene it is evolved form, beginning in the first years of this 21st century [8] the ingoing notion was that people should be able to respond easily to combinations of messages, even when the messages were not necessarily connected, but rather thrown together. Unpublished work with respondents beginning in 1980 with The Colgate Palmolive Company in Toronto, Canada evaluating new ideas for Colgate Dental Cream uncovered the surprising observation respondents reported NO DIFFICULTIES when they were exposed to combinations of messages in this sparse framework. There were, however, ‘concerns’ from the advertising agency.

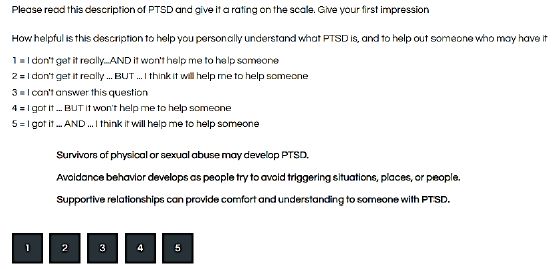

This study is about PTSD, post-traumatic stress disorder [9], but the reality is that the approach presented here could be used virtually for any topic. Figure 1 shows the structure of a concept, or ‘vignette’ in the language of Mind Genomics. The figure shows a spare structure comprising a short introductory instruction, a small collection of unconnected phrases, albeit all dealing with PTSD, and then the rating scale. After an initial shock of seeing such a spare format, most respondents adjust quickly, going through the vignettes by ‘skimming’, and then making a quick decision about what rating to assign,. The reality was that in most studies with concepts the respondent skims the concept, grazing for information, rather than reading the material.

Figure 1: Example of a vignette about PTSD (post-traumatic stress disorder)

The important advance of Mind Genomics was the focus on the material, not on th execution. It was the questions and the answers that were important. In the actual execution, the Mind Genomics platform, www.bimileap.com, would combine the answers into vignettes, according to an underlying experimental design. The questions would not appear in the vignettes, only the answers. Respondents participating found this format easy, perhaps slightly boring, but not intimidating at all. The people who were intimidated turned out to be the users wanting to use Mind Genomics. These prospective users ended up having to provide questions and answers, a task that seemed to be so easy to do when Mind Genomics was first developed, but which turned out to be intimidating as real people were exposed to the task. It had been unclear until that point how difficult people found the job of thinking about a topic, coming up with questions which tell a story, and then coming up with answers to those questions.

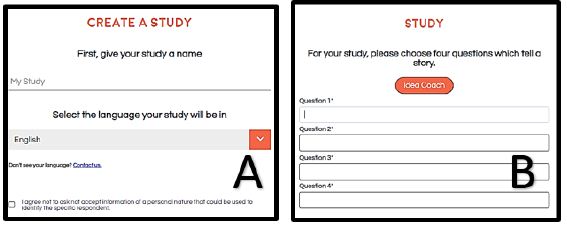

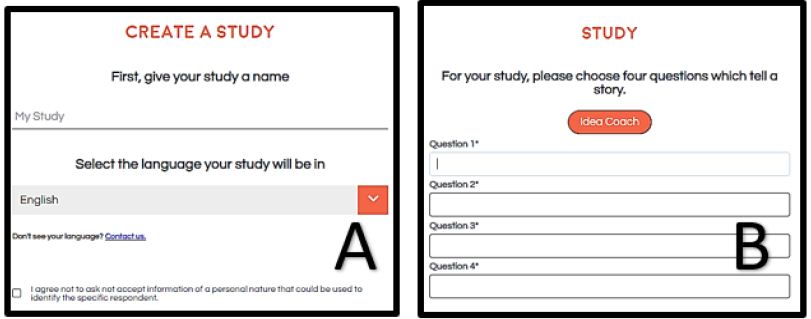

The Mind Genomics approach ended up disposing of the believed requirement that the vignettes presented to the respondent be complete vignettes. Rather, it was simpler to create a template that the user could complete. Rather than using statistical jargon, and talking about underlying experimental design, everything was put into the template, requiring the user simply to think of ideas. In the most recent format, the user would be instructed to give the project a name (Figure 2, Panel A), and then provide the template with four questions telling a story (Figure 2, Panel B), and then for each question, four answers (not shown).

Figure 2: Set up screens for a Mind Genomics study. Panel A shows the first step, to name the study. Panel B shows the instructions to provide four questions.

Mind Genomics requires that the user think critically. Figure 1, Panel B instructs the user to create a set of four questions which ‘tell a story’.’ For many neophytes, individuals who wanted to experience what Mind Genomics could do for them, the task seemed overwhelming. In order to ameliorate this problem, Mind Genomics evolved to incorporate AI, through Idea Coach. Figure 2, Panel A shows the ‘squib’, which allows the user write a request to Mind Genomics, and in turn have AI use that request to create the questions, as well as create the answers (not shown). Panel B shows the four questions which were chosen (Figure 3).

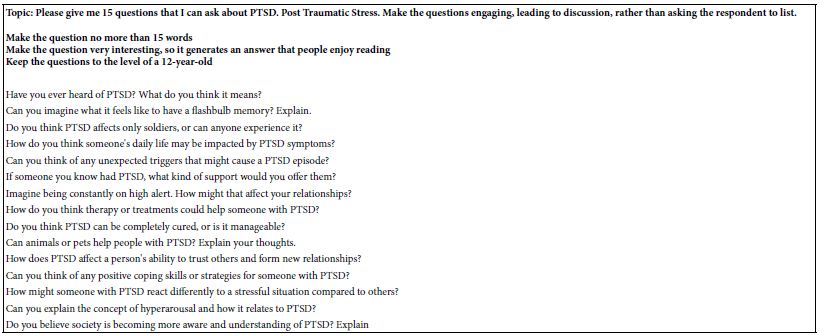

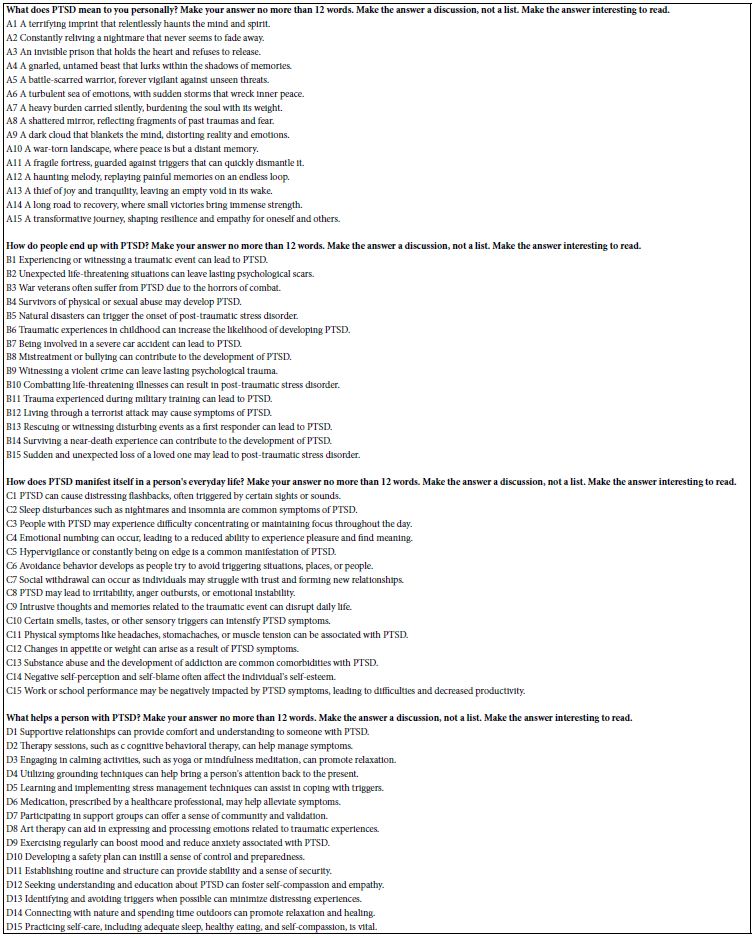

The actual output of Idea Coach appears in Tables 1 and 2, respectively. Table 1 shows the request made to Idea Coach to provide it with 15 questions. From this, the user selected four questions, shown in Figure 3, Panel B. Table 2 shows the 15 answers to each question provided by Idea Coach.

Table 1: Output of 15 questions from Idea Coach, with the output emerging immediately after the request was given to Idea Coach.

Table 2: The four sets of 15 answers, one set for each question selected (see Figure 2, Panel B)

Figure 3: Panel A shows a request to Idea Coach to provide relevant questions for the study. Panel B shows four questions which emerged from Idea Coach.

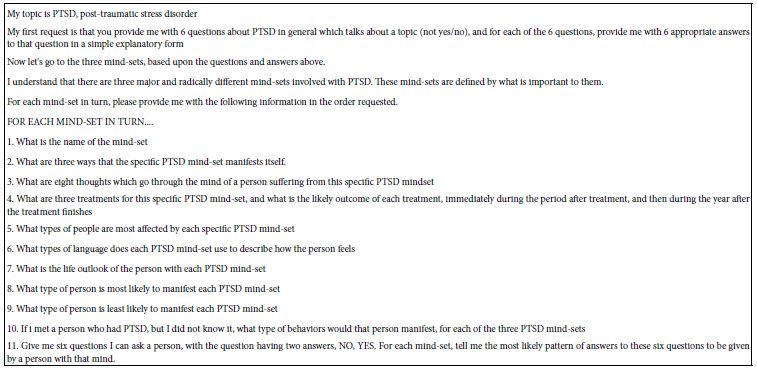

Moving Beyond the Question-and-Answer Format to the Tutoring/Coaching System

The success of Idea Coach as a way to provide questions and answers gave rise to another discovery, one which motivates this paper among others. That discovery is what emerges when the user asks more of Idea Coach than simply providing questions to address a simple squib (Table 1), or answers to a simple question (Table 2). Table 3 shows the squib for PTSD, but a far more elaborate request. The request ‘assumes’ without specification that PTSD has three different mind-sets. The squib does not specify anything about these mind-sets, but rather requests Idea Coach to answer 11 different questions.

Table 3: A more elaborate squib to both get answers about PTSD and to teach about PTSD

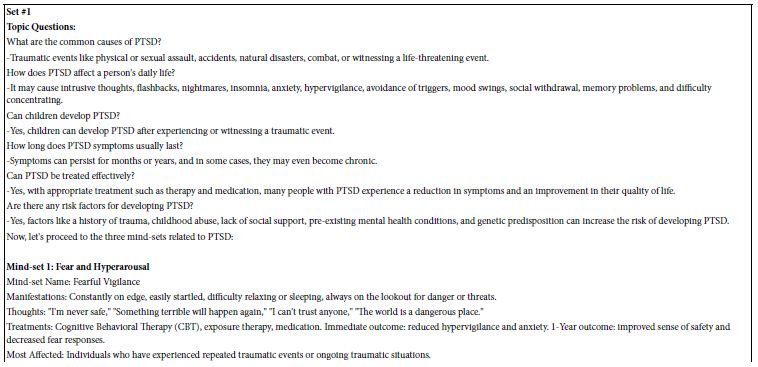

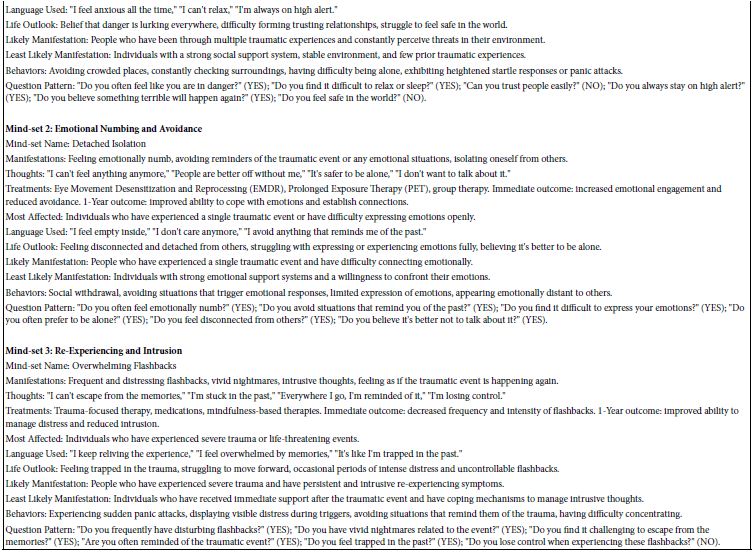

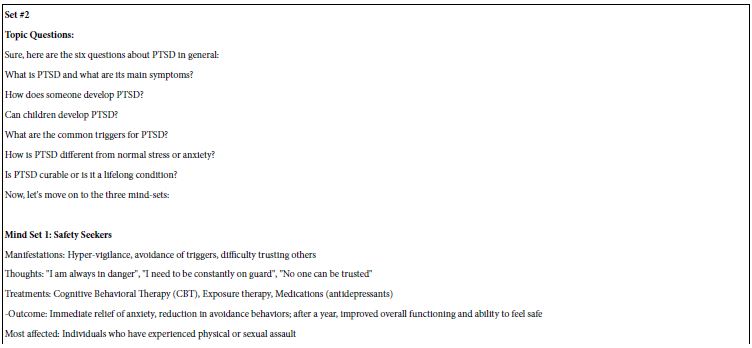

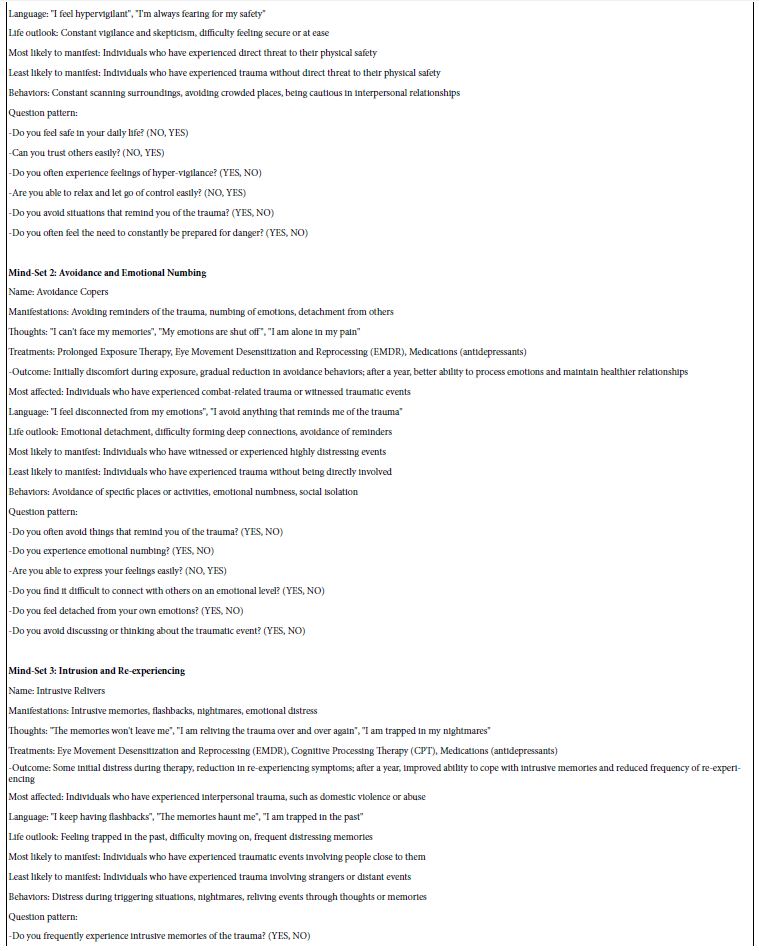

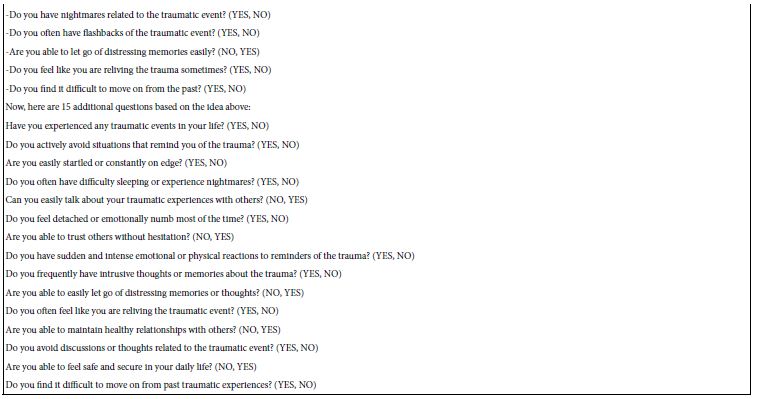

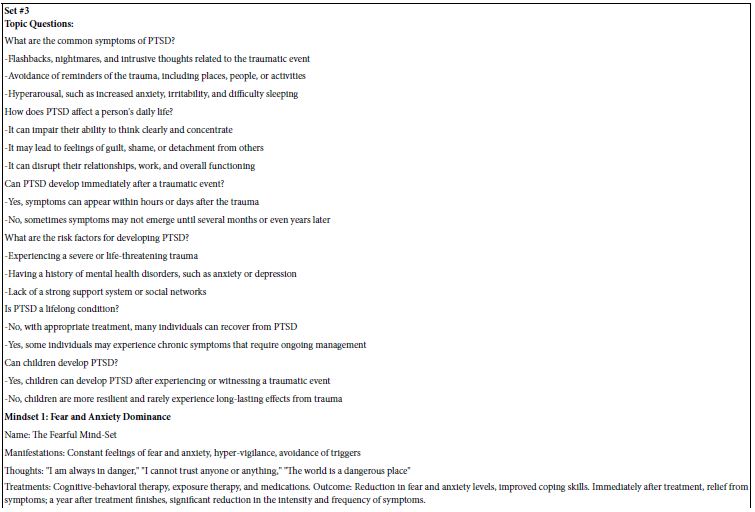

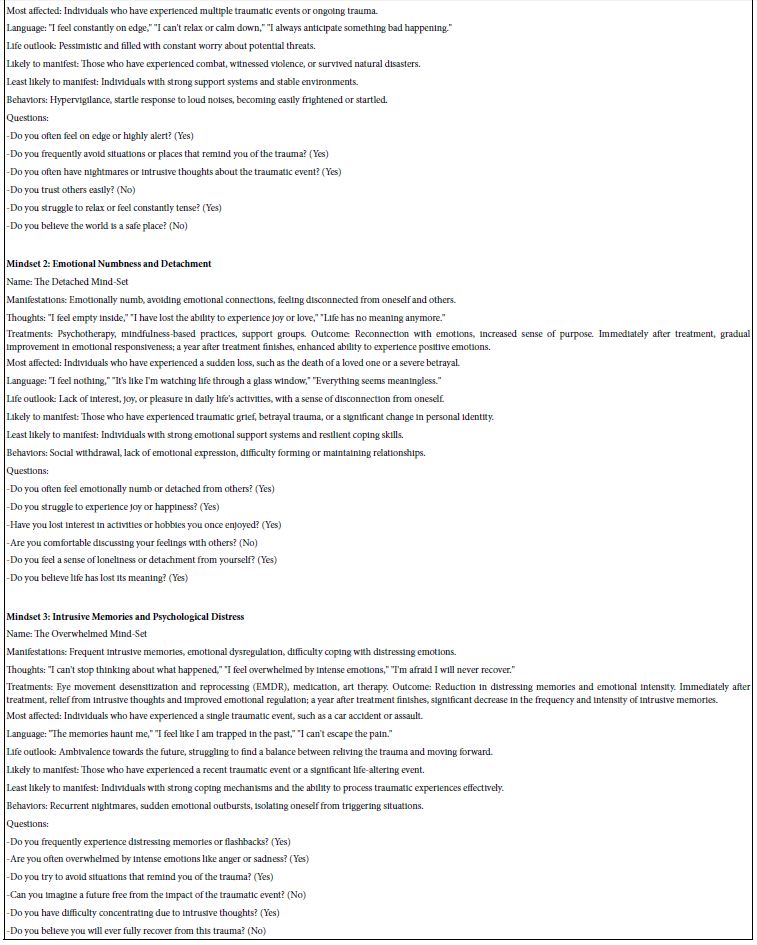

In turn, Tables 4-6 show three sequential runs of Idea Coach, each taking about 30 seconds. Idea Coach uses the same squib shown in Table 3, and returning back each time with a unique set of answers, albeit answers with substantial commonality.

Table 4: The first set of answers to the squib shown in Table 3. Idea Coach attempts to provide answers to each question, viz., to each request.

Table 5: The second set of answers to the squib shown in Table 3. Idea Coach attempts to provide answers to each question, viz., to each request.

Table 6: The third set of answers to the squib shown in Table 3. Idea Coach attempts to provide answers to each question, viz., to each request.

Discussion and Conclusions

During the course of the research and writing, an effort requiring less and less time and effort, AI has been both lauded and lambasted, lauded because of the possibilities it has, lambasted because it is far from being omniscient and fair [10]. The world of academics is struggling with the impact of the widely available Chat GPT system and its clones [11], worrying about cheating [12], about the reliance of students on AI for their knowledge and even for writing the papers that they turn in for coursework [13].

The approach presented here may provide a different pattern of activities, one which incorporates early-stage learning with AI as a tutor, and then experimentation with real people, or eventually event with synthetic respondents, viz., survey takers constructed by AI. The paper began with the history of Mind Genomics, the problems with critical thinking, and the salutary effects introducing AI as an aid to creating questions, and then providing answers to those questions. The paper ‘ended’ with the benefits emerging from a more detailed introduction to the issue, that introduction created simply by expanding the nature of the squib, the introduction to the problem. Rather than a simple instruction to produce questions and answers, the paper shows how a more detailed request to AI could produce a wealth of information.

The suggestion made here is quite simple: REVERSE THE PROCESS. That is, for a designated topic topic, e.g., PTSD, begin the process by a tutorial with detailed request, such as that shown in Table 2. Once the tutorial has finished, the Idea Coach, acting as a true coach, has done its work, producing sufficient information about PTSD. It is now entirely in the hands of the user to do the Mind Genomics experiment with real people, using the information conveyed to the user by the expanded squib. Whether the user must use their own questions and answers or can once again use idea Coach to request questions and answers is a policy decision, one beyond the scope of this paper.

References

- Ellery PJ, Vaughn W, Ellery J, Bott J, Ritchey K, et al. (2008) Understanding internet health search patterns: An early exploration into the usefulness of Google Trends. Journal of Communication in Healthcare 1: 441-456.

- Mellon J (2014) Internet search data and issue salience: The properties of Google Trends as a measure of issue salience. Journal of Elections, Public Opinion & Parties 24: 45-72.

- Ripberger JT (2011) Capturing curiosity: Using internet search trends to measure public attentiveness.” Policy Studies Journal 39: 239-259.

- Svenstrup D, Jørgensen HL, Winther O (2015) Rare disease diagnosis: a review of web search, social media and large-scale data-mining approaches. Rare Diseases 3: p.e1083145.

- Zuccon G, Koopman B, Palotti J (2015) Diagnose this if you can: On the effectiveness of search engines in finding medical self-diagnosis information. In: Advances in Information Retrieval: 37th European Conference on IR Research, ECIR 2015, Vienna, Austria, March 29-April 2, 2015. Proceedings 37 562-567. Springer International Publishing.

- Endicott NA, Endicott J (1963) Objective measures of somatic preoccupation. The Journal of Nervous and Mental Disease 137: 427-437.

- Moskowitz HR, Martin D (1993) How computer aided design and presentation of concepts speeds up the product development process. In: ESOMAR Marketing Research Congress 405.

- Moskowitz HR, Gofman A, Beckley J, Ashman H (2006) Founding a new science: Mind genomics. Journal of Sensory Studies 21: 266-307.

- Shalev AY (2001) What is posttraumatic stress disorder? Journal of Clinical Psychiatry 62: 4-10.

- Suchman L (2023) Imaginaries of omniscience: Automating intelligence in the US Department of Defense. Social Studies of Science 53: 761-786.

- Guleria A, Krishan K, Sharma V, Kanchan T (2023) ChatGPT: ethical concerns and challenges in academics and research. The Journal of Infection in Developing Countries 17: 1292-1299. [crossref]

- Cotton DR, Cotton PA, Shipway JR (2023) Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International, 1-12.

- Chan CKY (2023) A comprehensive AI policy education framework for university teaching and learning. Int J Educ Technol High Educ 20: 38.