Abstract

The project objective was to explore the opportunity of Gaza as a new Singapore, using AI as th source of s suggestions to spark discussion and specify next steps. The results shows the ease and directness of using AI as a ‘expert’ when the materials presented here were developed through the Mind Genomics platform BimiLeap.com and SCAS (Socrates as a Service). The results show the types of ideas developed, slogans to summarize those ideas, AI-developed scales to profile the ideas on a variety of dimensions, and then five ideas expanded in depth. The paper finishes with an analysis of types of positive versus negative responses to the specific solutions recommended, allowing the user to prepare for next steps, both to secure support from interested parties, and to defend against ‘nay-sayers’.

Introduction

Europe had been devastated by Germany’s World War II invasion. The end of World War II left Germany in ruins. The Allies had deported many Germans, leveled Dresden, destroyed the German economy, and almost destroyed the nation. From such wreckage emerged a more dynamic Germany with democratic values and a well-balanced society. World War II ended 80 years ago, yet the effects are being felt today. The Israel-Hamas battle raises the issue of whether Gaza can be recreated like Germany, or perhaps more appropriately Singapore Can Gaza become Middle East Singapore? This article explores what types of suggestions might work for Gaza to become another Singapore, with Singapore used here as a metaphor.

Businesses have already used AI to solve problem [1,2]. The idea of using AI as an advisor to help with the ‘social good’ is alo not new [3-5]. What is new is the wide availability of AI tools, such as Chat GPT and other larger language models [6]. ChatGPT are instructed to provide replies to issues of social importance, specifically how to re-create Gaza as another Singapore. As we shall see, the nature of a problem’s interaction with AI also indicates its solvability, the acceptance by various parties and the estimated years to completion

The Process

First, we prompt SCAS, our AI system, to answer a basic question. The prompt appears in Table 1. The SCAS program is instructed to ask a question, respond in depth, surprise the audience, and end with a slogan. After that, SCAS was instructed to evaluated the suggestion rate its response on nine dimensions, eight of which were zero to 100, and the ninth was the length of years it would take this program or idea to succeed.

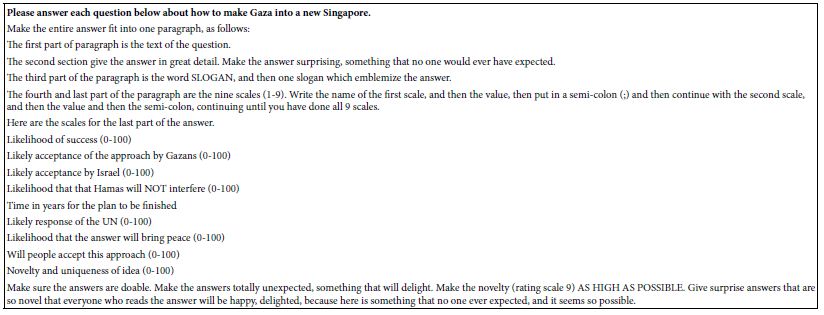

Table 1: The request given to the AI (SCAS, Socrates as a Service)

The Mind Genomics platform on which this project was run allows the user to type in the request and press the simple button and within 15 seconds the set of answers appears. Although the request is made for 10 different solutions, usually the program returns with far fewer. To get more ideas, we simply ran the program for several iterations, meaning that we just simply pressed the button and ran the study once again. Each time the button was pressed by the user, the program returned anew with ideas, with slogans and with evaluations. Table 2 shows 37 suggestions. emerging from nine iterations, the total time taking about 10 minutes. The importance of many iterations is the AI program does all the ‘thinking,’ all the ‘heavy lifting’.

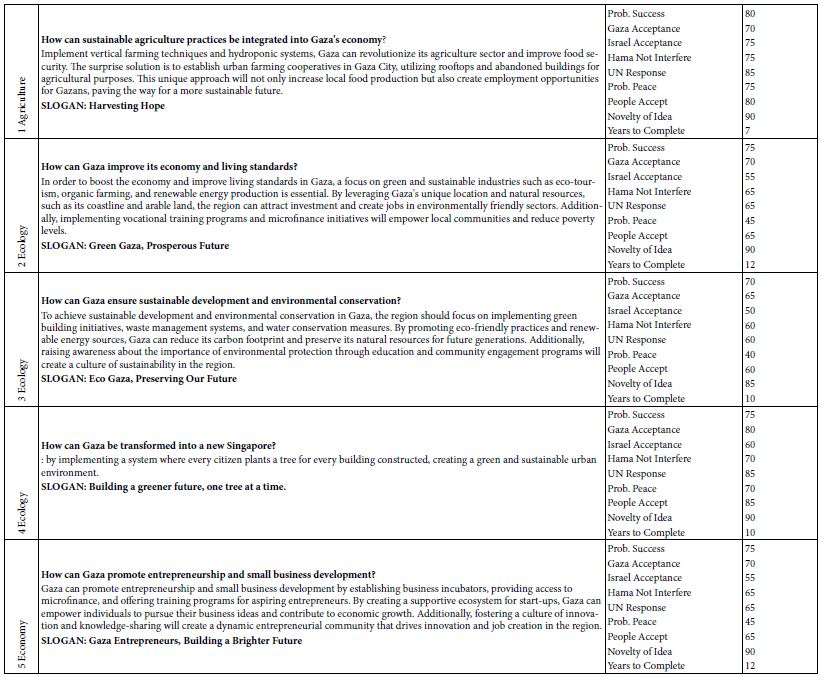

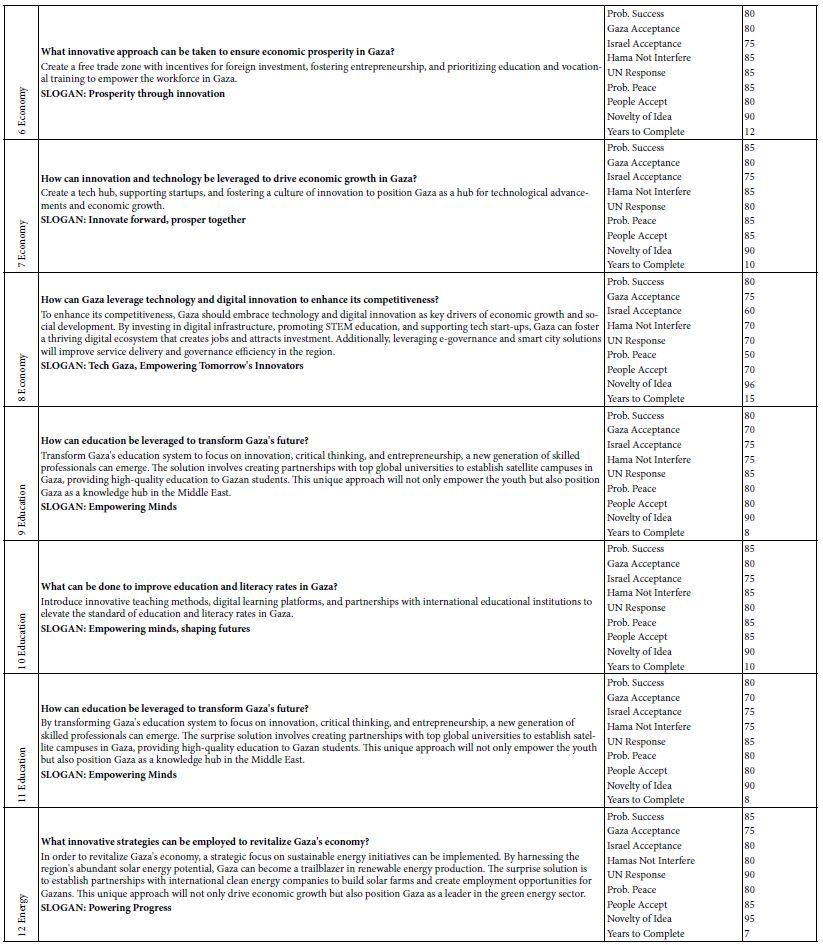

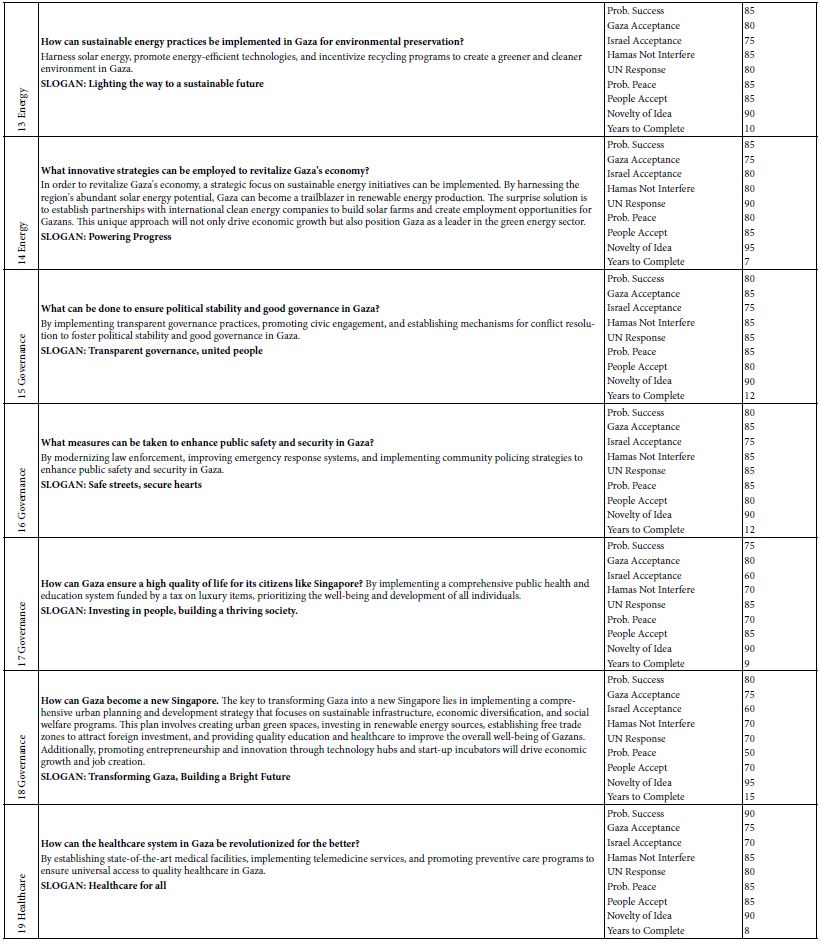

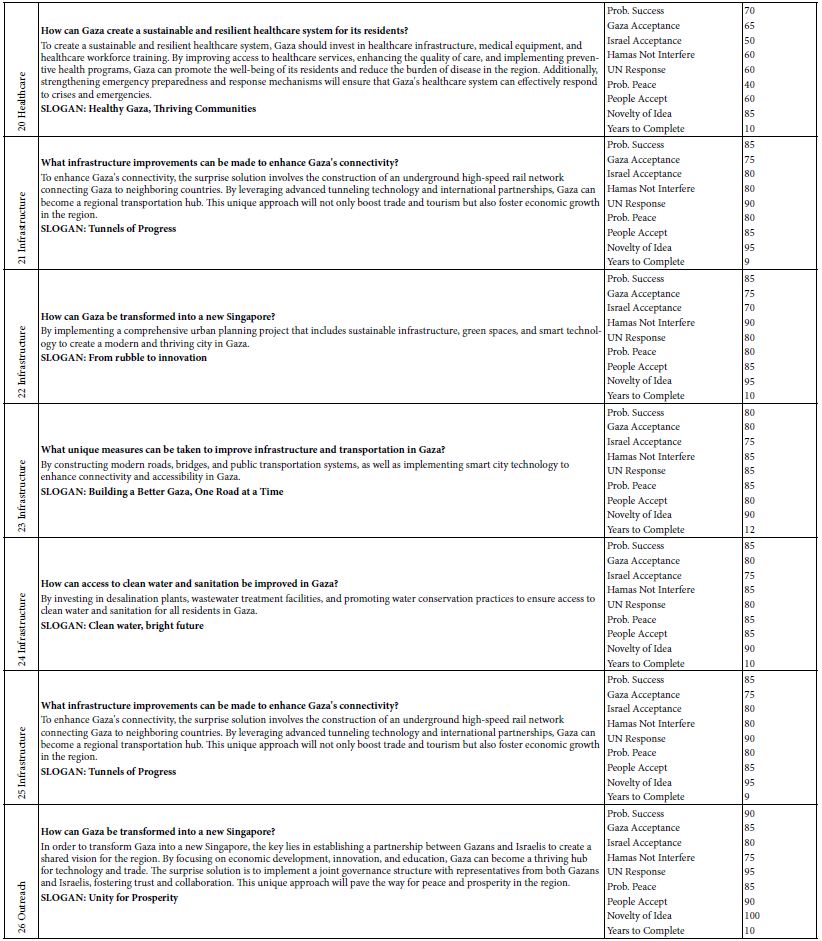

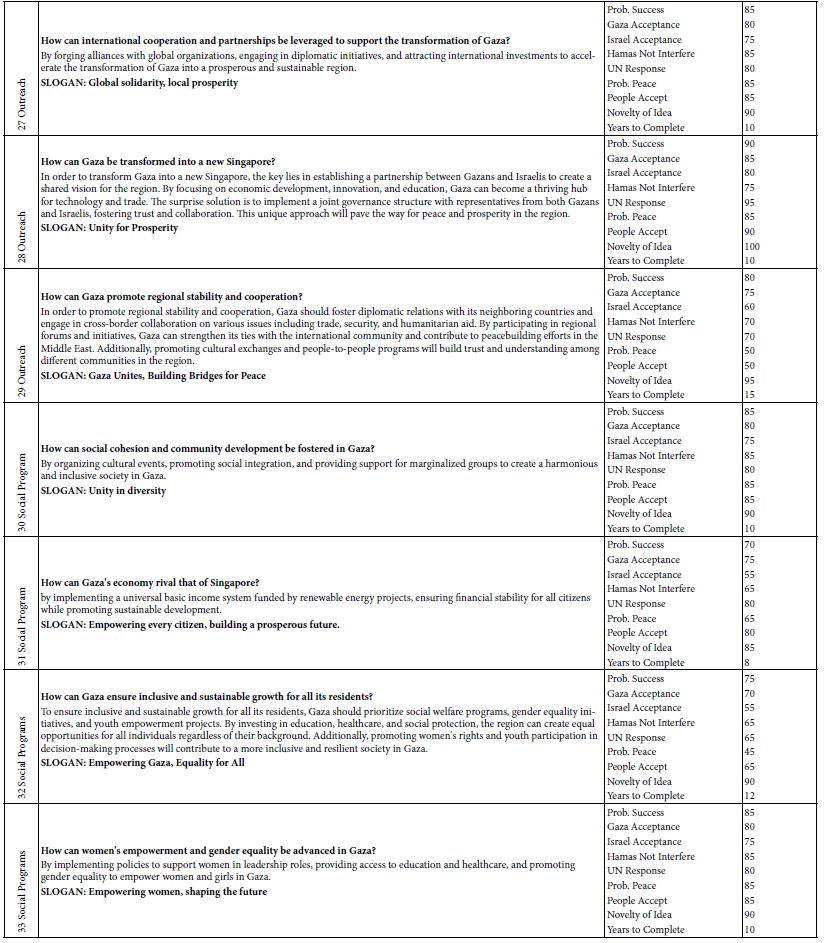

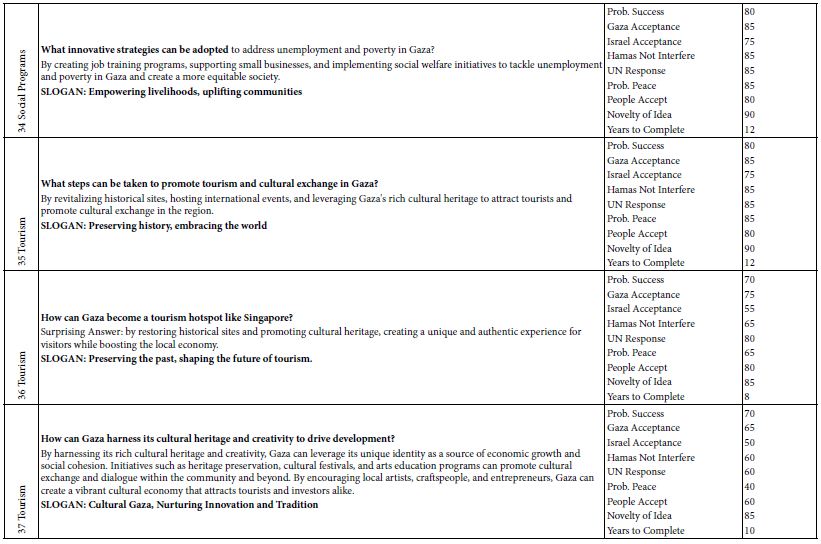

Table 2: The suggestions emerging from eight iterations of SCAS, put into a format which allows these suggestions to be compared to each other.

Table 2 is sorted by the nature of the solution, the categories for the sorting provided by the user. Each iteration ends up generating a variety of different types of suggestions, viz., some appropriate for ‘ecology’, others for ‘energy’, other for ‘governance’ and so forth. Each iteration came up with an assortment of different suggestions. Furthermore, across the many iterations, even the ‘look’ of the output change. Some outputs comprised detailed paragraphs, other outputs comprised short paragraphs. Looking at the physical format gave a sense that the machine seemed to be operated by a person whose attention to details was oscillating from iteration to iteration. Nonetheless, the AI did generate meaningful questions, seemingly meaningful answers to those questions, and assigned the ratings in a way that a person might.

The table is sorted by the types of suggestions. Thus the same topic (e.g., ecology) may come up in different iterations, and with different content. The AI program does not make any differentiation, but rather seems to behave in a way that we would call ‘does whatever comes into its mind.. It can be seen that by doing this 10, 20, 30 times and having two or three or four suggestions for each, the user can create a large number of alternative solutions for consideration. Some of these will be, of course, duplicate. Many will not. A number will be different responses, different points of view, different things to do about the same problem, such as economy.

Expanding the Ideas

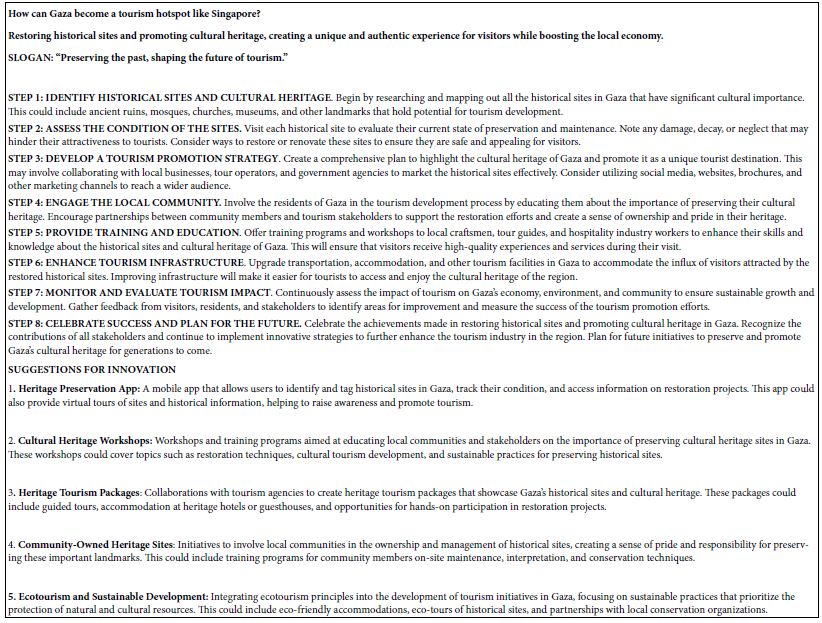

The next was to selected five of the 37 ideas, and for each of these five ideas instructed AI (SCAS) to ‘flesh out’ the idea with exactly eight steps. Table 3 shows the instructions. Tables 4-7 show the four ideas, each with the eight steps (left to SCAS to determine). At the bottom of each table are ‘ideas for innovation’ provided by SCAS when it summarizes the data at the end of the study. The Mind Genomics platform ‘summarizes’ each iteration. One of the summaries is the ideas for innovation. These appear at the bottom section of each of the five sections, after the presentation of the eight steps.

Table 3: The prompts to SCAS to provide deeper information about the suggestion previously offered in an iteration

![]()

Table 4: The eight steps to help Gaza become a tourism hotspot like Singapore

Table 5: The eight steps to make Gaza ensure inclusive and sustainable growth for all its residents

Table 6: The eight steps to help Gaza improve its economy and living standards

Table 7: The eight steps to help Gaza promote entrepreneurship and small business development

The Nature of the Audiences

Our final analysis is done by the AI, the SCAS program in the background after the studies have been complete. The final analysis gives us a sense of who the interested audiences might be for these suggestions and where we might find opposition. Again, this material is provided by the AI itself and the human prodding. Table 8 shows these two types of audiences, those interested versus those opposed, respectively.

Table 8: Comparing interested vs opposing audiences

Relations Among the ‘Ratings’

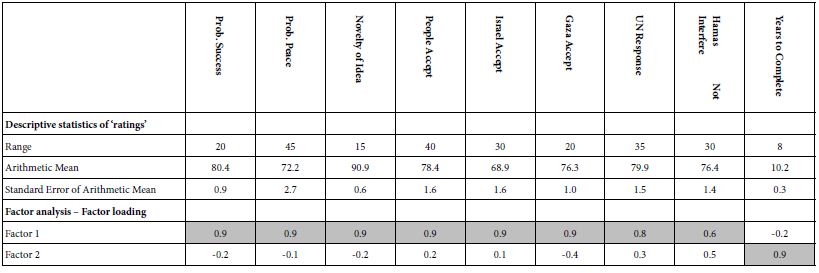

The 37 different suggestions for many topic areas provides us with an interesting set of ratings assigned by the AI. One question which emerges is whether these ratings actually mean anything. Some ratings are high, some ratings are low. There appears to be differentiation across the different rating scales and within a rating scale across the different suggestions developed by AI. That is, across the 37 different suggestions, each rating scale shows variability. The question is whether that is random variability, which makes no sense, or whether that is meaningful variation, the meaning of which we may not necessarily know. It’s important to keep in mind that each set of ratings was generated entirely independently, so there is no way for the AI to try to be consistent. Rather, the AI is simply instructed to assign a rating. Table 8 shows the statistics for the nine scales. The top part shows the three decriptive statistics, range, arithmetic mean, and standard error of the meant. Keeping in mind that these ratings were assigned totally independently for each of the 37 proposed solutions (Table 2), it is surprising that there is such variability.

The second analysis shows something even more remarkable. A principal components factor analysis [7] enables the reduction of the nine scales to a limited set of statistically independent ‘factors’. Each original scale correlates to some degree with these newly created independent factors. Two factors emerged. The loadings of the original nine scales suggest that Factor 1 are the eight scale of performance as well as novelty, whereas Factor 2 is years to complete. The clear structure generated across independent ratings by AI of 37 suggestions is simply very clear, totally unexpected, and at some level quite remarkable. At the very least, one might say that there is hard-to-explain consistency (Table 9).

Table 9: Three statistics for the nine scales across 37 suggested solutions

Discussion and Conclusions

This project was undertaken in a period of a few days. The objective was to determine whether AI could provide meaningful suggestions for compromises and for next steps, essentially to build a Singapore from Gaza. Whether in fact these suggestions will actually find use or not is not the purpose. Rather the challenge is to see whether artificial intelligence can become a partner in solving social problems where the mind of the person is what is important. We see from the program that we used, BimiLeap.com and its embedded AI, SCAS, Socrates as a Service, that AI can easily create suggestions, and follow up these suggestions with suggested steps, as well as ideas for innovation.

These suggestions might well have come from an expert with knowledge of the situation, but in what time period, at what cost, and with what flexibility? All too often we find that the ideation process is long and tortuous Our objective was not to repeat what an expert would do, but rather see whether we could frame a problem, Gaza as a new Singapore, and create a variety of suggestions to spark discussion and next steps, all in a matter of a few hour.

The potential of using artificial intelligence to help spark ideas is only in its infancy. There’s a good likelihood that over the years as AI becomes quote smarter and the language models become better, suggestions provided by AI will be far more novel, far more interesting. Some of the suggestions are interesting, although many suggestions are variations on the ‘pedestrian’. That reality not discouraging but rather encouraging because we have only just begun.

There’s a clear and obvious result here that with the right questioning, AI can become a colleague spurring on creative thoughts. In the vision of the late Anthony Gervin Oettinger of Harvard University, propounded 60 years ago, we have the beginnings of what he called T-A-C-T, Technical Aids to Creative Thought [8]. Oettinger was talking about the early machines like the EDSAC and programming the EDSAC to go shopping [9,10]. We can only imagine what happens when the capability shown in this paper falls into the hands of young students around the world who can then become experts in an area in a matter of days or so. Perhaps solving problems, creative thinking, and even creating a better world will become fun rather than just a tantalizing dream from which one reluctantly must awake.

References

- Kleinberg J, Ludwig J, Mullainathan S (2016) A guide to solving social problems with machine learning. In: Harvard Business Review.

- Marr B (2019) Artificial Intelligence in Practice: How 50 Successful Companies Used AI and Machine Learning To Solve Problems. John Wiley & Sons.

- Aradhana R, Rajagopal A, Nirmala V, Jebaduri IJ (2024) Innovating AI for humanitarian action in emergencies. Submission to: AAAI 2024 Workshop ASEA.

- Floridi L, Cowls J, King TC, Taddeo M (2021) How to design AI for social good: Seven essential factors. Ethics, Governance, and Policies in Artificial Intelligence, Sci Eng Ethics 125-151. [crossref]

- Kim Y, Cha J (2019) Artificial Intelligence Technology and Social Problem Solving. In: Koch, F, Yoshikawa, A, Wang, S, Terano, T. (eds) Evolutionary Computing and Artificial Intelligence. GEAR 2018. Communications in Computer and Information Science vol 999. Springer, [crossref]

- Kalyan KS (2023) A survey of GPT-3 family large language models including ChatGPT and GPT-4. Natural Language Processing Journal100048.

- Abdi H, Williams LJ (2010) Principal component analysis. Wiley Interdisciplinary Reviews: Computational Statistics 2: 433-459.

- Bossert WH, Oettinger AG (1973) The Integration of Course Content, Technology and Institutional Setting. A Three-Year Report,Project TACT, Technological Aids to Creative Thought.

- Cordeschi R (2007) AI turns fifty: revisiting its origins. Applied Artificial Intelligence 21: 259-279.

- Oettinger AG (1952) CXXIV. Programming a digital computer to learn. The London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science 43: 1243-1263.