Abstract

As preparation for a negotiation involving the merger of two corporations through direct purchase, an experiment was conducted to determine whether the negotiations could be enhanced by understanding how ‘uninvolved third parties’ felt about the different aspects to be negotiated. These aspects were topics such as dividing shares of the merged company, and policy toward retaining employees and assets. The research effort was to assess the operational viability of a process which required about an hour from beginning to end to provide that ‘third party view of the issues. Aspects of the merger were surfaced and combined into vignettes comprising 2-4 element, and evaluated by an outside panel of respondents, unknown to the negotiating parties. The panel responses to the elements were deconstructed into the potential ability of each element to drive agreement (MERGE – YES) or disagreement (MERGE – NO). The process quickly revealed the elements on which there would probably be agreement, and elements over which there might be conflict. A segmentation of the test respondents showed two different mind-sets, uncovering types of sticking points for each mind-set.

Introduction

A great deal of the practice of the law involves negotiation and coming to an agreement. The negotiations may be left to the parties, to a professional negotiator/mediator, to the lawyers involved, and so forth. When there are opposing parties with different interests, how can negotiations be expedited, using knowledge, to reduce time, and reduce expense? Is there room for an application of the scientific method, which can provide a sense of what the parties can agree upon? Discussions with law professionals continue to suggest problems with ‘access to the law’ [1,2]. By access to the law is meant an easy, affordable, rapid way to get legal advice. Many lawyers are happy to give a free hour or so of consultation before they take on the case and request payment for their legal services. Despite this gesture, which is often welcome by businesspeople making deal as well as by parties seeking to sue another, the access to the law is not what it could be. The lawyer or the legal aid group must put time against the situation, understand it, and then decide whether there is a sufficient opportunity to monetize the time put against the effort. One unhappy consequence is that the ordinary small efforts are given short shrift. Sometimes the unhappy result is the oft-heard plaint ‘the only ones who made money from the situation were the lawyers.’ As denigrating as the statement might seem, it is hard to refute, especially when one tries to look at what the law provides for the small issues. The literature on negotiation, whether for business transactions or legal issues, continues to grow. The value of sensitivity in negotiation is obvious, and serves the negotiator well [3]. In fact, the importance of such sensitivity, and its practical application are subjects taught in law schools and business schools [4-6], as well as in the world of medicine. What then might be an appropriate technology or at least technique to introduce into the world of law to create a way to access the law? The approach might have to avoid taking up the time of a legal professional, because that defeats the purpose, especially when the case or situation is relative minor. The equivalent would be to find an approach to access knowledge in a set of printed material, without having to involve a librarian or even a legal assistant. In other words, the approach would have to rely on automatic computing, and analysis, to some people bordering or actually using ‘artificial intelligence.’ The idea of having technology assist in the negotiation process is not a new one. With the advent of computers and the recognition that there can be decision support systems of an electronic nature, interest has focused on the features of such a system [7-9].

The foregoing problem has been the focus of author HRM for 20 years, since 2001. The issues then, twenty years ago, were to understand how to evaluate the feelings of people presented with scenarios of a societal nature. The approach used by author HRM is called Mind Genomics [10]. Mind Genomics grew out of work beginning in 1980, trying to understand the patterns of preference of people towards foods, and the application of those patterns to the creation of commercial products [11]. The pioneering work, first with products eventually migrated into a variety of areas, some dealing with food, others dealing with social issues [12], and finally with the law [13], and with bigger issues in society [13]. The original efforts migrated to consulting projects in the legal and business areas, with work in different places around the world. It was clear from the projects that people from different countries often had markedly different styles of negotiating, an observation supported by published studies [14]. What was also interesting was the range of different responses to the same offers, suggesting the need to treat aspects of the negotiation process in a way which respects and understands these profound individual differences.

Demonstrating the Opportunity Through a Short Case History

During a meeting with lawyers in the Albany region of New York State, the opportunity emerged to demonstrate the approach. The topic was a merger of two companies. The opportunity was to identify how the owners of two companies could find an area of agreement. Separately from the private meeting with their lawyers negotiating the merger, the parties agreed to discuss the issues with author HRM, in an informal manner, and strictly for purposes of science. From the short, 10-minute background discussion, it become possible to ‘create’ a matrix of different issues, elaborating on the topics raised. At this point, the participants in the merger, here presented as Charles and Rebecca, respectively, returned to the meeting, after having given HRM permission to do a small demonstration ‘experiment’ using the material surfaced in the meeting. The relevant information was disguised where necessary.

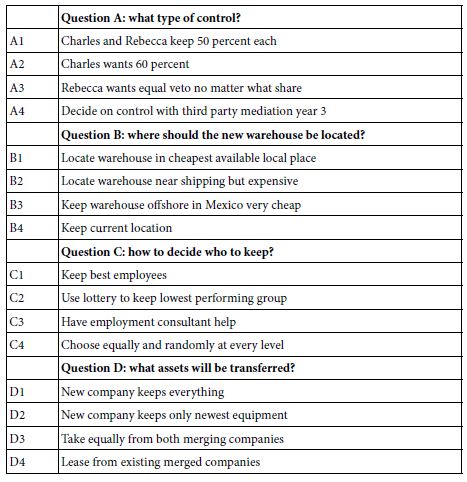

Table 1 shows the set of four questions emerging from the discussion. The questions pertain to the topics of the merger. The 16 answers or elements present alternatives raised in the discussion, as well as several added by HRM afterward, based on the discussion, but not directly raised. The Mind Genomics process provides a template by which the researcher can quickly record the topic, the four questions, and the 16 answers, as shown in Table 1.

Table 1: The four questions and the four answers to each question, for the project pertaining to the merge of two companies.

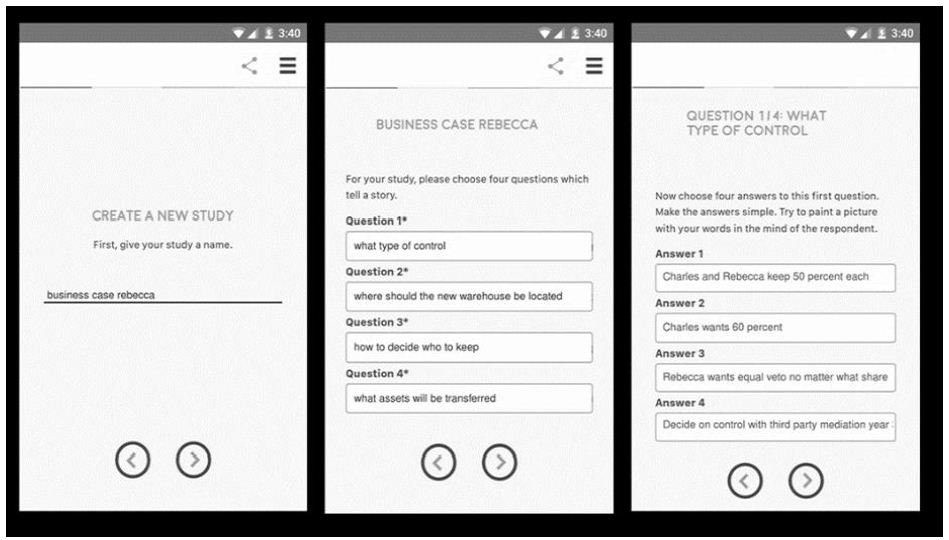

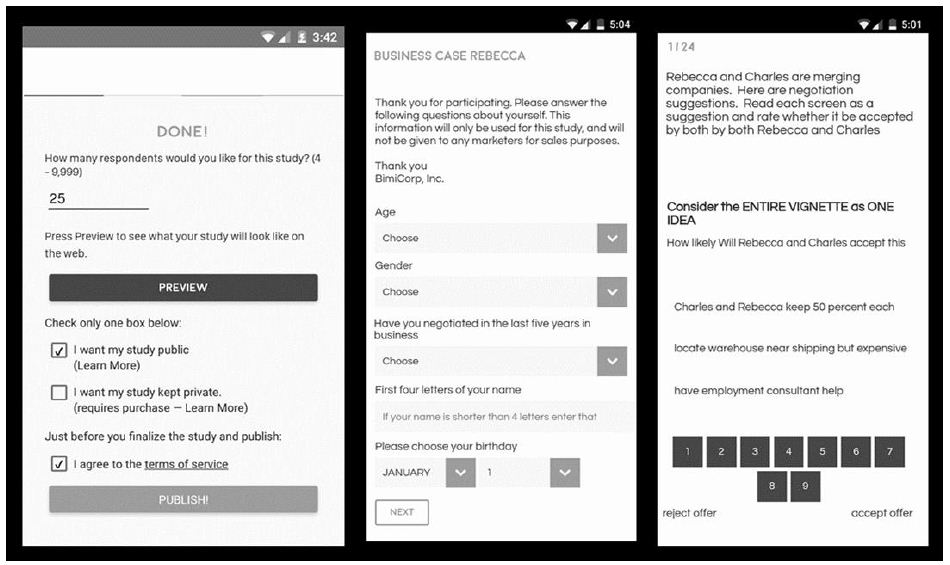

Figure 1 shows three panels, each a screenshot from the actual project. The left panel shows the selection of the project name (business case Rebecca). The middle panel shows the four questions. The right panel shows the four answers to Question 1. The Mind Genomics program follows this right-hand panel with three additional panels (now shown), allowing for the remaining three sets of four answers each. It is important to note that the project can be set up ‘live,’ viz., in real time. The www.BimiLeap.com website is set up to guide and structure the thought processes, making the approach feasible in the middle of the meeting to gain quick feedback. The website can be freely accessed and easily used. Figure 1, as well as Figures 2 and 3, show the set-up of the experiment, requiring about 15-20 minutes at most.

Figure 1: The set-up screens for the Mind Genomics project showing the selection of the name, the list of four questions, and the set of four answers to the first question.

Figure 2: Screen shots showing communication to the respondent, including the third self-classification question (left panel), the rating scale and anchors (middle panel), and the short orientation which introduces the topic to the respondent.

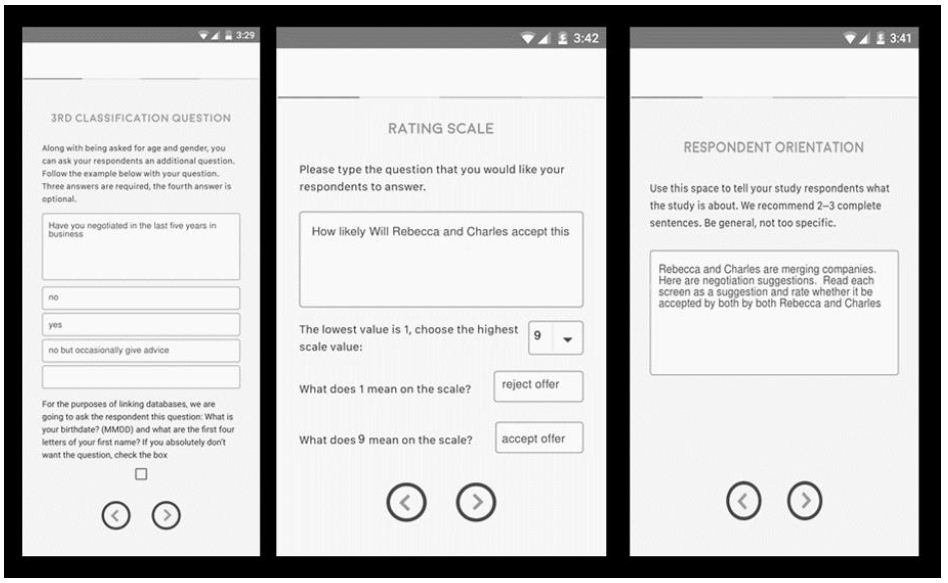

Figure 3: The final user screen (left), and the two respondent screens (middle, self-profiling classification; right, sample vignette to be rated).

The next set of screens shown in Figure 2 instruct the user first to add a question pertaining to the respondent (left panel), then the rating scale, and finally the orientation to the study that the respondent will read. The Mind Genomics project is entirely private, so that the respondent is only identifiable by age gender and the third question.

Have you negotiated in the last five years in business?

1=no 2=yes 3=no but occasionally give advice 4=Not applicable

The rating scale provides the opportunity for the respondent to voice her or his opinion about the merger (whether or not the offer for merger will be rejected (rating = 1) or accepted (rating = 9)). This scale is called the Likert Scale, showing the magnitude of feeling. More recent practice has been to use a shorter 5-point scale. The scale is anchored at both ends, serving as a tool to show the respondent’s opinion AFTER the respondent has read the vignette, the test stimulus, described below.

Low Anchor: Rating question 1=reject offer

High Anchor: Rating question 9=accept offer

The orientation provides the user with a way to tell the respondent about the project. Creating the orientation is easy, but the user should be sure to provide as little specific information as possible. It will be the vignettes, small combinations of 2-4 messages which will provide the necessary ‘real’ information about the communications pertaining to the proposed merger.

Rebecca and Charles are merging companies. Here are negotiation suggestions. Read each screen as a suggestion and rate whether it be accepted by both by both Rebecca and Charles

Figure 3 shows the final screen of the user’s set-up experience (left panel), and then the respondent’s experience (middle and right panels, respectively). The user is given a set of options, to declare the study a business study or an academic study, to define the number of respondents to participate, and then to define the sourcing of the respondents. The study here involved the selection of 25 respondents, a sufficient and affordable number of respondents to provide necessary information about the ‘case.’ The user specified recruiting from the preferred provider (Luc.id, Inc.), and did not choose any specifics about the respondent. The final step is either to review and edit, or to ‘launch’ the study, and pay with a credit card.

The respondents are selected by country, and by other criteria available through the Luc.id system of literally hundreds of user-specified criteria. The respondents are sent notifications, and within minutes, many of the respondents from the ‘blast email’ participate. The respondent goes through two major steps for this study. The first step is completion of a self-profiling questionnaire (middle panel), which asks for gender, age, and response to the classification question shown in Figure 2 (left panel. The second step is the evaluation of 24 vignettes, set up like the vignette shown in Figure 3 (right panel).

The right panel of Figure 3 shows all of the information that the respondent needs to decide, but using information presented in an unusual way. Every one of the 24 vignettes that the respondent will see is set up the same way:

a. Orientation about the topic

b. Reminder to consider the entire vignette (viz., all the elements) as one idea

c. The rating question/scale

d. Three elements put together seemingly ‘at random,’ left justified

e. The response scale and the anchors

The vignette itself comprises 2-4 elements, combined according to an experimental design. The combination may look random, but the combination(s) is set up according to a strict structure called an experimental design [15]. Each of the 24 screens has a defined number of elements, and a defined listing of the specific elements to be incorporated. As a consequence, each of the 16 elements appears exactly five times, and is absent from 19 vignettes. Each question or grouping of four elements is allowed to contribute either one or no elements, but never two or more elements. This design feature means that for bookkeeping purposes, one should put into the same question two, three or four elements which are mutually contradictory. Finally, the 16 elements are statistically independent of each other, allowing for regression analysis. The novel part of the design is that by the correct permutation each respondent can evaluate the same ‘structure’ of vignettes, but the combinations are different. One can liken this metaphorically to the MRI, magnetic resonance imaging, which takes many pictures of the same tissue, all from different angles, and during the processing phase recombines them to arrive at a single in-depth image with 25 respondents, each viewing 24 DIFFERENT combinations, we end with 600 pictures of the topic, and the associated rating of that vignette or ‘picture’. The study was launched approximately 20 minutes from the start, although the novice may require at first 30-40 minutes to set up, and then launch. The actual data collection and basis, automated analysis, required 30 minutes. The results were ready for discussion approximately 60 minutes from the start, and available in printed form (easy-to-read EXCEL booklet). The speed and cost of the process are worth emphasizing before we look at the data. If nothing else, the process actually helped the merger negotiation by surfacing issues and the responses to the issues.

The First Experience with the Data – Average Ratings by Total and by Key Subgroups

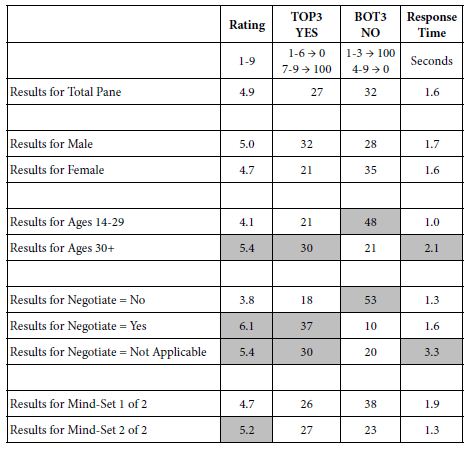

Mind Genomics experiments generate a great deal of information, much of it usable. Our first analysis looks at the averages. We will look at complementary groups, shown in Table 2. The averages are computed on four measures

a. Rating = average of the 1-9 rating (1=merger offer not accepted .. 9=merger offer accepted)

b. TOP3 = a new binary variable, showing either strong acceptance (ratings 7-9) or all else (1-6)

c. BOT3 = a new binary variable, showing either strong rejection (ratings 1-3) or all else (4-9)

d. Response time in seconds = The Mind Genomics program measured the time from the presentation of

Table 2: Average values for ratings, binary variables and response times for Total Panel and key subgroups.

The test vignette to the time when the respondent rated the vignette. The authors’ experiences in a variety of studies suggest most response times of approximately 1.5-4.5 seconds for a vignette. Typically, response times of 8 or more seconds suggest that the respondent was multi-tasking. These longer response times (about 1/5 of the data) were simply eliminated from all analyses, but the remainder of the data from the respondent was kept.

Based upon Table 2 we see a simple story emerging when we look data from the total panel.

a. An average rating of 4.9 on the anchored 9-point scale, suggesting neither MERGER-YES (higher averages) or MERGER-NO (lower averages).. This may be due to most of the ratings clustering in the middle, or the decisions about equally divided between TOP3 (YES to the merger), and BOT3 (NO to the merger). Table 1 shows that the responses are divided about equally among YES (27%), NO (32%) and the rest MAYBE (100% – 27% – 32% = 41%)

b. The response time is short, about 1.6 seconds. The information in this merger is not difficult to comprehend and does not require much thinking. The information appears to be more emotionally driven than fact driven.

The self-profiling questionnaire allows us to identify respondents by gender, by age group, and by involvement in negotiations. Further analysis to uncover mind-sets (groups of people who think alike) reveal two mind-sets. These two mind-sets will be further explicated below. For the current analyses, it suffices to measure the average ratings for each of these defined groups. Table 3 suggests some differences, such as the fact that the younger respondents (ages 14-29) are far more negative about the prospects for the merger (BOT3 = 48), almost beginning with a negative attitude), and read the vignettes on average twice as quickly than do the older respondents (1.0 vs 2.1 seconds). For those with experience in negotiating, the average is overwhelmingly positive, and the time to read the vignettes is shorter. The two mind-sets differ from each other and are explicated below in depth.

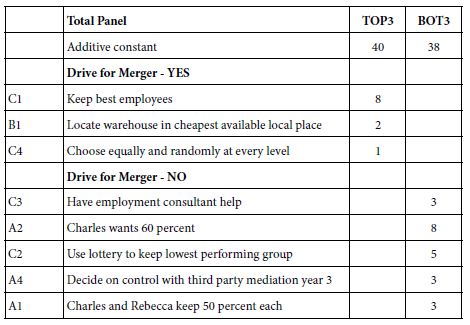

Table 3: How the elements drive a third-party group (respondents) to feel whether there will be a merger (TOP3) or there won’t be a merge (BOT3).

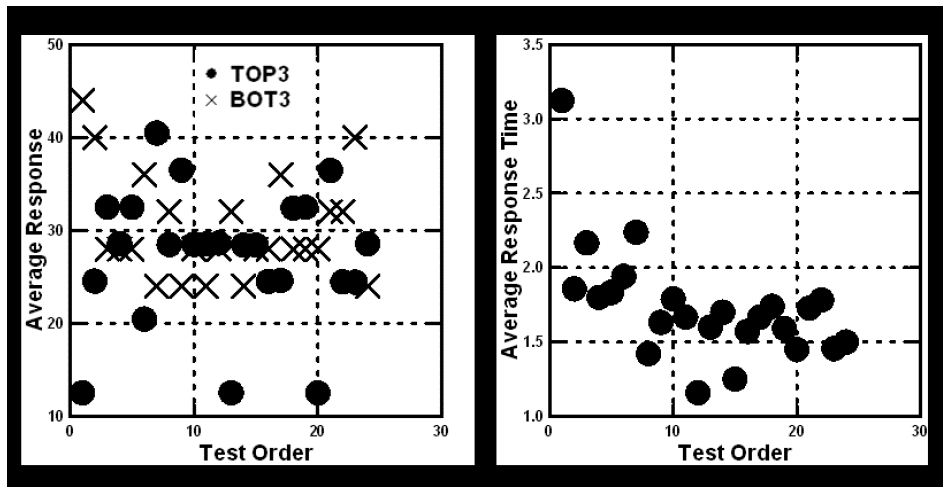

Beyond Averages to the Stability/Instability of the Averages across the 24 Vignettes

A continuing issue in attitude research concerns how stable the responses are over time, especially when the respondent is evaluating many test stimuli. Practitioners have discovered the so-called ‘tried first bias’ [16], which means that the stimulus evaluated first may score aberrantly higher or lower than it would score when tried in the middle of a set of similar stimuli. This bias, sufficient to affect the validity of the data, has led to different ‘best practices’ such as testing only stimulus per person (so-called pure monadic), evaluating many products and rotating the order of the products to minimize the ‘tried first bias.’ The Mind Genomics system ensures that the respondents each evaluate a different set of vignettes, so that there is no tried first bias. Yet, there is always the possibility that the vignette evaluated first is biased, even though we cannot measure the effect of that bias due to the different combinations. Figure 4 shows two panels. The left panel shows the average TOP3 (merger = YES), and average BOT3 (merger = NO). The right panel shows the average response time. The graph shows the change in the averages across the 24 positions. Figure 3 does not suggest a systematic bias in the ratings for TOP3 or BOT3, although one might make a case for the variation in averages being at the start of the evaluation. The effect of repeated evaluations is far clear when the dependent variable is average response time. Over time the response times become shorter, presumably because at some point the respondent both knows what to do and responds more quickly when recognizing those elements which are important. It might an interesting study to compare different sets of messages around the same topic of mergers, to see whether the pattern of decrease of response time with experience in rating time is affected by the type of message.

Figure 4: The change in the average responses (TOP3 – merge; BOT3 – no merge; Response time) as a function of position in the 24 vignettes evaluated by the respondent.

Linking Elements to Response to Determine ‘What Messages’ Work

The most important aspect of the Mind Genomics effort is the ability to link together the elements and the responses, and by so doing discover what elements might be driving the response. The benefit of the Mind Genomics design is that cognitive richness of the test stimuli. Up to now we have simply looked at the pattern and surmised what might be happening. Up to now we had to be content with discovering that there are regularities in the data, such as the drop in the response time with increasing experience, or the difference in the average rating by key subgroup. For practical applications, such as study of the efficacy of messages, we must move beyond general patterns of responses, and into the specific elements themselves. The strategy of combining the messages by underlying experimental design ensures that that the combinations have some semblance of reality, and that the respondent cannot ‘game the system.’ The elements are combined in a way which precludes the respondent from changing the criterion of judgment. Such change of criterion may occur when the messages, the elements, are presented one at a time. The respondent might well adopt one criterion when the issue is division of ownership, and another criterion when the issue is which employees and assets to retain. By combining the elements into vignettes, Mind Genomics makes it virtually impossible for the respondent to adjust the judgment criterion. As explicated above, each respondent evaluated a unique 24 different vignettes, with the elements statistically independent of each other [17]. The underlying experimental design makes it feasible to use OLS (ordinary least-squares) regression to relate the presence/absence of the 16 elements to the newly created binary dependent variables, TOP3 and BOT3, respective, as well as Response Time. The equation deconstructs the newly created binary variables into the part-worth contribution of each element, as well as a baseline value the additive constant.

The equation is written as: Dependent Variable = k0 + k1(A1) + k2(A2).. k16(D4)

The additive constant, also called the intercept, shows the expected value of the dependent variable (e.g., TOP3) when all 16 elements are absent. Of course, the experimental design ensures that every vignette comprises a minimum of two and a maximum of four elements, at most one element from each question. Thus, the additive constant is strictly theoretical, but does provide a sense of the baseline. Table 3 shows that the additive constant for TOP3 is 40, and the additive constant for BOT3 is 38. We conclude from that the basic likelihood is equal for votes for (TOP3) versus against (BOT3) the merger. It is in the coefficients where matters become interesting, informative. A positive coefficient means that including the element in a vignette will increase the vote, either for the merger (TOP3) or against the merger (BOT3). A negative or a 0 coefficient men that including the element in a vignette will not increase the vote, either for the merger or against the merger. In the interest of making the study simple to report, and patterns easy to spot It has become customary in Mind Genomics studies to report only the positive coefficients, and to highlight the strong positive coefficients, viz., those around 8 or higher. The negative and 0 coefficients do not tell us much. For TOP3 they tell us the strength of failure to push for TOP3. When we are really interested in the elements which actively drive away agreement (Merger – NO), we are better served by looking at the coefficients for BOT3. Positive elements for BOT3 are those which actively drive away agreement. Table 3 presents the positive coefficients for TOP3 and for BOT3, respectively. When an element fails to have a positive coefficient for either TOP3 or BOT3 the element does not appear. In this way it becomes easier to see the patterns. The data suggest that the there is an equal proclivity for Merger and No Merger. The elements which push for a merger are those about the way the merger will combine the companies. The elements which push away from a merger are those about ownership. It becomes clear that control is a major issue, as perceived by an outside group of people evaluating the different propositions for merger. The data do not mean that these are the actual issues that will be discussed, but rather perceived to be potentially contentious.

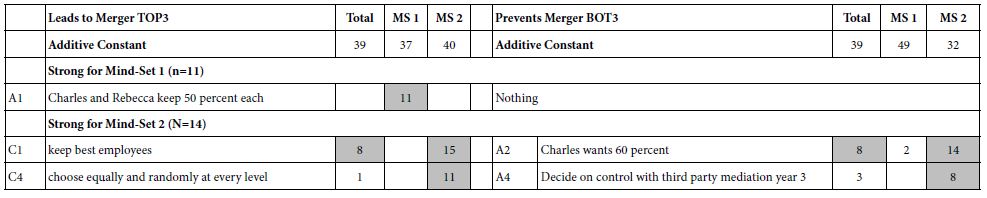

Mind-Sets and Negotiations

If we were to stop at the results in Table 3, the effort to understand the ‘sticking points’ of the merger would have emerged, in a matter of 30 minutes, from a small group of 25 respondents acting as ‘consultants,’ albeit unknowingly since their job was to evaluate the likely outcome of a set of discussion points. We could stop here and have our job more or less compete. Yet, there is more to be learned. That ‘more’ is the discovery of different ways of looking at the same information and arriving at different decisions. These different way are called mind-sets. Mind-sets emerging from segmentation have been a hallmark of marketing for decade [18], and is now interesting, or even better carving out new areas of the practice of business and law. Even with as few as 25 respondents it is possible to discover meaningful mind-sets. The researcher creates individual-levels, two per respondent, one for TOP3 vs elements, and the other for BOT3 vs elements. The models do not have an additive constant. The database comprises 25 rows (one per respondent), with 32 columns (16 for TOP3; 16 for BOT3). The numbers in the body of the data matrix are coefficients. The researcher clusters the respondents into two groups, based upon pattern of the coefficients. Within each cluster or mind-set are respondents whose pattern of 32 coefficients are ‘similar to each other, and dissimilar to the patterns of the 32 coefficients generated by respondents in the other cluster or mind-set [19]. After all is done, Table 4 reveals two clear mind-sets. Both mind-sets are equal in their desire for a merger, with the additive constants of 37 and 40. It is the elements which are important. Mind-Set 1 wants equity in the division. Nothing really turns off Mind-Set 1, viz., there is nothing driving BOT3. In contrast, Mind-Set 2 wants a rational merger, and is turned off by either unequal division of stock, or loss of control. The important thing about Table 4 is a sense of the fine-grained needs of the different mind-sets, setting the agenda about what to discuss, and what to ‘take off the table.’

Table 4: Strong performing elements for the two emergent mind-sets, created the combination of the 16 TOP3 coefficients, and the 16 BOT3 coefficients.

A Shortened, Which is Both Inclusive (Participatory) and Objectively (Data Centric)

The analysis above suggests a rich database can be developed quickly, viz., in less than 60 minutes, from start to analysis. The nature of the Mind Genomics approach forces the use into a disciplined presentation of the ‘case.’ Some may consider the speed and the concomitant ‘structuralizing’ of the process as a negative, viz, that those involved may be forced to study the topic without having a chance to think deeply about the topic. This criticism is absolutely correct. The spirit of the Mind Genomics process is founded on the alluring combination of structure, speed, and depth. The very design, as a computer-based app, with almost automatic front-to-back effort, and an automated basic analysis, prevents deep thinking, at least at the time of the evaluation. The focus is on pulling out the salient ideas, putting them into a template, involving a third part as judges in a way which prevent judgment biases, and h nth return with structured data. What might be the way such a system could be used in the world of the everyday? The first use is a subtle one. The structure forces the people involved to think about alternatives or options facing them. The user must contribute the question and the four elements for each question. The thinking, therefore, is to support one’s position, but rather to focus on the different aspect of the topic. Ongoing work with Mind Genomics suggests that simply requiring the participants to offer ideas in a structured manner improves their thinking. There is a second benefit as well. That benefit is the ability to identify what specifics work, and whether there exist hitherto unknown or only suspected mind-sets of individuals having different points of view [20]. Such information is important to the people involved in the case because it demonstrates the very real possibility that there are different ways to approach the same topic. The disagreements between people become more explainable. Even more promising, however, is the possibility of finding ideas which are very acceptable to one mind-set and to another, or at least ideas which are acceptable to one mind-set, and do not turn off the other. There is a third benefit, perhaps the most important. That benefit is improved access to the law, something being regularly recognized as a major need. Howard [21-25]. With an opposing party threatening to sue, or at least to damage by driving up legal fees, there is a need for rapid, inexpensive DIY (do it yourself) methods. It is quite possible that Mind Genomics might be one of those methods, a simple DIY system, executed collaboratively by the different groups involved in the negotiation, leading to speedier, fruitful negotiations, filled with mutual understanding, less expensive, and ultimately being far more productive.

References

- Frandino J (2018) (Personal communication).

- Moskowitz H, Wren J, Papajorgji, P (2020) Mind Genomics and the Law. LAP Lambert Academic Publishing.

- Arunachalam, V, Dilla WN (1995) Judgment accuracy and outcomes in negotiation: A causal modeling analysis of decision-aiding effects. Organizational Behavior and Human Decision Processes 61: 289-304.

- Chetkow-Yanoov B (1996) Conflict-resolution skills can be taught. Peabody Journal of Education. 71: 12-28.

- Gettinger J, Koeszegi ST, Schoop M (2012) Shall we dance?—The effect of information presentations on negotiation processes and outcomes. Decision Support Systems 53: 161-174.

- Nadai E, Maeder C (2008) Negotiations at all points? Interaction and organization. In Forum Qualitative Research (online), 9: 1-19.

- Julian V, Sanchez-Anguix V, Heras S, Carrascosa C (2020) Agreement Technologies for Conflict Resolution. In Natural Language Processing: Concepts, Methodologies, Tools, and Applications pg: 464-484. IGI Global.

- Kersten GE, Lo G (2003) Aspire: an integrated negotiation support system and software agents for e-business negotiation. International Journal of Internet and Enterprise Management. 1: 293-315.

- Neijens P, Swaab R, Postmes T (2004) Negotiation support systems: communication and information as antecedents of negotiation settlement. International Negotiation 9: 59-78.

- Moskowitz HR, Gofman A, Beckley J, Ashman H (2006) Founding a new science: Mind genomics. Journal of Sensory Studies 21: 266-307.

- Gladwell M (2004) Choice, happiness and spaghetti sauce.

- Moskowitz HR, Gofman A (2007) Selling Blue Elephants: How to Make Great Products that People Want Before They Even Know They Want them. Pearson Education.

- Kover A, Moskowitz H, Papajorgji P (2020) Applying Mind Genomics to Social Sciences, Book submitted, In Review.

- Gabrielidis C, Stephan WG, Ybarra O, Dos Santos Pearson VM, Villareal L (1997) Preferred styles of conflict resolution: Mexico and the United States. Journal of Cross-Cultural Psychology 28: 661-677.

- Ryan TP, Morgan, JP, (2007) Modern experimental design. Journal of Statistical Theory and Practice 1 ; 501-506.

- Malhotra N (2008) Completion time and response order effects in web surveys. Public Opinion Quarterly 72: 914-934.

- .Gofman A, Moskowitz H (2010) Isomorphic permuted experimental designs and their application in conjoint analysis. Journal of Sensory Studies 25: 127-145.

- Wedel M, Kamakura WA (2012) Market segmentation: Conceptual and methodological foundations (Vol. 8). Springer Science & Business Media.

- Likas A, Vlassis N, Verbeek JJ (2003) The global k-means clustering algorithm. Pattern Recognition 36: 451-461.

- Sternberg RJ, Soriano LJ (1984). Styles of conflict resolution. Journal of Personality and Social Psychology 47: 115-126.

- Howard D (2001) The Law School Consortium Project: Law Schools Supporting Graduates to Increase Access to Justice for Low and Moderate-Income Individuals and Communities.” Fordham Urb. LJ 29 (2001): 1245.

- Nader L (1980) No Access to Law: Alternatives to the American Judicial System (pp. 64-67). New York: Academic Press.

- Trebilcock M, Duggan A, Sossin L (2018) Middle Income Access to Justice. University of Toronto Press.

- Ware SJ (2012) Is adjudication a public good: Overcrowded courts and the private sector alternative of arbitration. Cardozo Journal of Conflict Resolution 14.

- Wren J (2021) (Personal communication).