Abstract

Background: As a result of the Covid panidemic, face masks are routinely used around the world. These could hamper the recognition of (basic) emotions and lead to misunderstandings. People with chronic pain typically struggle with (non-) verbal communication, and their behavior is frequently interpreted differently by their surroundings. This paper examines the variance in emotion recognition (accuracy and speed) between individuals with chronic pain and asymptomatic subjects.

Methods: Four validated measures (Central Sensitization Inventory (CSI), Graded Chronic Pain Scale (GCPS), The Brief Pain Inventory (BPI, and the Toronto Alexithymia Scale (TAS-20)) were used to differentiate between the asymptomatic control (CP) and chronic pain group (PG). In addition, a computerized emotion recognition test (ERT) comprised of 42 morphed images depicting six fundamental emotions (with) out lower fase covering was utilized to measure the accuracy and time required for this study.

Results: The recruitment and analysis of 170 patients included 98 subjects with chronic pain (PG). There was a statistically significant difference (p<0.01) between CP and PG (with) out lower face covering in the recognition of all basic emotions. In both groups, fear (from 57.1% to 66.3%) and disgust (from 67.1% to 69.1%) were more prevalent in the PG. Sadness and disgust (p<0.0001) in the PG were especially harder to identify when the lower face was concealed. There was no statistically significant difference in time between CG and PG without facial covering (p=0.34, p=0.4).

Conclusion: People in a chronic pain state have more difficulty with emotion recognition without lower face covering, but there is no difference in time. Emotion recognition, especially sadness and disgust with face covering are significantly diminished in PG were disgust was frequently confused with anger and fear with astonishment.

Significance: As indicated in this paper, distributing collected data of perceived emotions supplied by a standardized ERT and perceived emotions by the participants in a table (confusion matrix) may provide a clear overview of correct and incorrect answers, both within and between the two groups.

Introduction

When facial emotion recognition is present, a reflexive ocular scan of the face occurs, allowing the emotion to be interpreted by detecting the underlying muscles involved [1,2]. In this way, observers attempt to direct their attention to critical components to distinguish facial emotions [3]. According to Guo [4], the eye region, as well as the nose and the mouth are frequently observed to differentiate emotional expressions. Other studies indicate that fundamental emotion expressions are associated with a set of features expressed in our face; for example, fear is associated with gestures at the eye levelprimary facial feature change to help facilitate the identification of this emotion. In comparison, joy would be primarily associated with gestures/movement of the lips in order to facilitate its recognition, but the region of the brows, cheeks, and lower eyelid tension all contribute to its detection [5]. The lower face appears to have been strongly associated with feelings of satisfaction and attractiveness [6]. For instance, it is stated that facial regions such as the nasolabial fold and commissure broad, as well as the upper lip vermilion, are critical for identifying mood levels like satisfaction [7]. According to some studies, the left side of the face exhibits pleasant but contrived facial expressions, whereas the right half exhibits genuine feelings [8]. The lower half of the face conveys pleasant and joyful emotions, while the upper half conveys surprised and anxious emotions. On the upper-lower face axis, spontaneous facial expressions are more prevalent than on the left-right facial axis. This may account for everyone’s unique search pattern for emotion identification, which is not predictable but varies according to task, face location, and face coverage [9].

The Covid19 epidemic has facilitated the widespread usage of facemasks around the world. While facemasks aid with infection prevention, there are worries about their influence on facial recognition, expression, and hence social communication [10]. According to the most widely accepted theory of face perception, emotional expression recognition and facial identity are distinct perceptual processes encoded by distinct psychological [11] and neural mechanisms [12,13]. More precisely, in terms of emotion identification and expression, multiple studies have examined the quantity and kind of social information provided by various parts of the face, concluding that the mouth plays a critical role in understanding emotions, particularly basic emotions like happiness and disgust [14,15]. Indeed, the mouth is a vital component of human face recognition, being almost symmetrical and typically visible from any angle, making it the ideal aspect to focus on in all those instances where the user can be scrutinized from any angle. According to a recent study, mouth-based emotion identification does not vary from full-face emotion recognition but greatly supports subtle emotion recognition in general [16]. As a result, it is obvious that covering the lower half of the face with a facemask impairs emotion perception. While the absence of facial processing signals can be compensated for by more expressive gestures, and cognitive and coping strategies, covering the lower half of the face with a facemask may have resulted in higher disability in individuals with impaired compensation abilities, such as deafness, congenital prosopagnosia, and autism.

Little is known about how individuals with chronic pain conditions cope with emotion recognition during face mask-wearing. Chronic pain is defined as any type of pain that persists for more than three months, either continuously or recurrently [17,18]. It is estimated to affect 20% of the population and imposes a massive cost on both individuals and the healthcare system (Goldberg et al. 2011). Current models of chronic pain demonstrate the complex interaction of sensory, environmental, psychological, and pain regulation risk variables that contribute to an individual’s pain vulnerability [19] and may lead to chronic pain maintenance (Koechlin et al. 2017). Chronic pain may also be associated with Alexithymia which may be one of the characteristics of emotional dysregulation in chronic pain [20] and is characterized by difficulties identifying (i) and describing emotions (ii), as well as externally oriented thinking (iii) [21]. It is identified in a variety of chronic pain conditions, including Low back pain (LBP), chronic facial pain and Temporomandibular Disorders (TMD), fibromyalgia, chronic migraine, irritable bowel syndrome and Complex Region Pain Syndrom (CRPS) [22] among others. Alexithymia is often associated with depression or depression feelings (Saariaho et al. 2017). It is hypothesized that long-term peripheral nociception alters brainstem and central nervous system circuits, resulting in the spread of pain and perceptual abnormalities of the body schema [23]. This somatorepresentation distortion may result in a perturbation of the somatosensory-motor system [24] and is strongly associated with the inability to recognize refined (facial) motor patterns. For instance, in experimental research involving chronic low back pain (CLBP) [25] TMD, and chronic facial pain, the accuracy and speed of basic emotion identification are decreased, and other asymmetrical performed emotions such as disgust and fear are exchanged by others [26]. Based on this data it is an obvious question whether wearing a surgical mask or covering the lower face has an impact on the accuracy and time of recognition of the (basic) emotions in persons in a chronic pain state more than people without pain.

On the basis of this information, the primary aim of our study is to examine whether emotion recognition (accuracy and time) is different between asymptomatic subjects and those with chronic pain when the lower face is covered as when wearing a surgical mask. We hypothesized that persons with chronic pain would perform worse at emotion recognition in all conditions when compared with asymptomatic subjects.

Material and Method

Participants and Sample Size

A sample size of 160 participants was calculated a priori via power analysis [27] targeting a repeated measures analysis of variance (ANOVA) with six groups (emotions) and four measurements (mask vs. no mask in control and pain group) and the ability to detect a medium effect size of f=0.25, given an α=0.05 and a test power (1-β)=0.80. Since the actual number of participants recruited was greater than the required number, a post hoc power calculation demonstrated that a power of 0.88 was reached.

Inclusion criteria were participants between 18 and 60 years, able to understand the task and to recognize and click on the specified emotions on a laptop with a computer mouse. Individuals were excluded if they could not write or speak German, as well as those who had impairment in the hand or vision.

Measuring Instruments

The measurements were divided into two sections. The first section requested demographic information as well as five questionnaires regarding participants’ current health status. The second section was a computer task that consists of two sets of 42 pictures depicting basic emotions, with (out) covering the lower face.

Questionnaires

Central Sensitization Inventory (CSI)

The CSI is a screening instrument to help identify symptoms related to central sensitization or indicate the presence of a central sensitive dysfunction [28]. In this study, the entire sum of part A (central sensitisation characteristics) is computed. Part B is not utilized; it is just for the purpose of providing information on existing diagnoses in the domain of central sensitisation. It is comprised of 25 multiple-choice questions with a possible score of zero to four (never – rarely – occasionally – often – always). As a result, a possible total score of 100 is possible. A score of 40 or more points has been reported to be indicative of central sensitisation.

Graded Chronic Pain Scale (GCPS) is a validated standard self-assessment tool used in clinical pain research and quality management. It provides a hierarchical classification system (I-IV). The outcomes are classified into four subgroups, with grades I and II seen as a slight limitation (functional chronic pain) and grades III and IV as strong limitations (dysfunctional chronic pain) [29]. In our study we used the German validated version and included volunteers who are classified as grade II, III and IV [30].

The Brief Pain Inventory (BPI) was established to give a quick and simple method for determining the level of pain and the extent to which pain interferes with lives of patients with pain [31]. Four questions concerning pain intensity and seven about pain interference are asked, as well as four about the present pain experience, the region of discomfort, the medicine or therapy used to alleviate the pain, and the extent of treatment outcome. On a scale of 0 to 10, pain intensity (no pain to the most severe agony imaginable) and pain interference (no interference to complete interference) are quantified. The responses to the questions on pain severity are put together and divided by four. After summarizing the responses about the impact of pain, they are split by seven. As a result, a total of 11 points may be earned. If a responder scores more than five points or answers more than four questions with pain, the test is deemed positive for pain-related disability. We used the validated German language BPI in our investigation.

Beck Depression Inventory (BDI). The BDI consists of twenty-one items that assess the frequency and severity of depression symptoms. The maximum score is 93 points (severe depression) and a mild depression can be observed from 14 points. The responses are computed on a zero-to-three-point scale and demonstrate a high reliability of 0.92 (Chronbach’s alpha) and acceptable validity of 0.73 to 0.96 for discriminating between depressed and non-depressed participants.

Toronto Alexithymia Scale (TAS-20). A subjective self-evaluation questionnaire which allows making a reliable identification alexithymia characteristics (i.e., difficulty in identifying emotions, difficulties in describing emotions, and externally oriented thinking style) [32,33]. The items of this tool are graded on a 5-point Likert scale, with answers ranging from “strongly agree” to “strongly disagree.” The points are totaled up to a maximum of 100 points. Scores under 51 on this scale indicate no alexithymia. A score of 51-60 points indicates a transitional period in which alexithymia may be present. Scores of 61 or more indicate alexithymia [34].

Emotion Recognition Task (ERT)

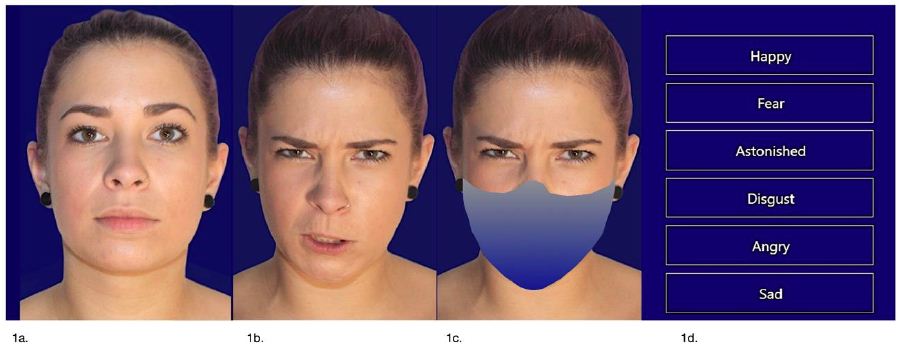

The CRAFTA Facial Recognition Test and Training software was used to perform this task ( www.myfacetraining.com) and was divided in two sections. The first part was the recognition of basic emotions (happiness, sadness, disgust, anger, fear, and surprise) without lower face covering. Participants were required to choose the appropriate expression for each image shown by clicking on it with their mouse on the screen of the PC (maximum time to choose was 5 sec.). Accuracy and time to respond for a standardized sequence of 42 pics were measured. After this first task, the participant had one minute rest followed by the same standard test but the pictures had a lower face covering (i.e., lower face mask)(section 2) (Figure 1).

Figure 1: Emotion Recognition Computer task (ERT). Example of a morph from neutral face (1a) expressed in the basic emotion (in this case disgust) with (out) lower face covering in a shape of a mask (1b and 1c). (1d) are the choices the participant can click on. Computer program calculates the time and the (in) correct choices of 42 pictures.

Procedure

The study was run from November 2020 until June 2021during the COVID-19 pandemic when general legal obligations to wear masks in Germany were already in action. Volunteers were recruited randomly in the mid-west of Germany through physiotherapy clinics, (sports) organizations, local universities, and via posters and advertisements. The procedure was done by 3 assessors (physical therapists) with more than 5-year of experience. The assessors were calibrated by specific training. Prior to the experimental session, written informed consent was obtained from each participant. All data were collected anonymously. Firstly, the participants were informed about the aim of the study and then were asked to sign a consent form, fill the questionnaires followed by the emotion recognition task (ERT). Before starting the ERT, a one-minute explanation and a trial with 5 random images was obligatory. Afterwards the ERT with (out) lower face covering was executed. A fourth (blinded) assessor anonymously acquired the data and classified the participants into groups such as control (CG) and (chronic) pain (PG) and carried out data analysis.

Statistical Analysis

Data was collected into SPSS 26 and the data of emotion perception of the CG and the PG (with) out lower face covering are distributed in a confusion matrix. The the Chi² test was used calculating statistical significance. Pearson Chi-square test was used to determine differences between conditions using nominal data (e.g., gender, age. BMI.). Mann-Whitney/U-Test for ordinal and metric data were performed for continuous data (e.g., age, BMI, questionnaires, time to respond), as the cohorts were not normally distributed. The significance level was set at 5%.

Results

Participants

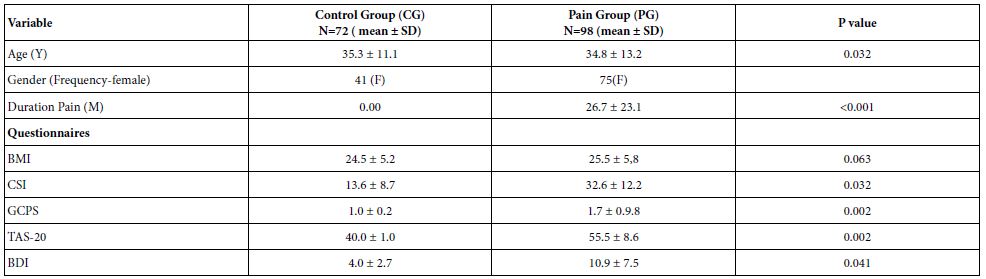

In total 170 subjects were recruited and analyzed. From these, as mentioned two groups were created. The control group (CG), as mentioned above did not refer pain based on their responses to the questionnaires. The second group includes all subjects who were regarded as having chronic pain (PG), based on their answers to the questionnaire’s medication usage was not asked. Table 1 summarizes the demographic data and questionnaires scores for both groups.

Table 1: Demographic data and mean scores of questionnaires by group (control group (CG) and chronic pain group (PG)). Y=years, M=Months F=Female.

Based on the information from the questionnaires, participants were divided in two groups:

Asymptomatic Subjects (control group). Those subjects who based on their answers were identified as not having pain (CSI<40 p., GCPS class I, BPI score less than 5 points and less than 4 questions with pain, TAS-20<51 p., BDI<14 p.)

Subjects with chronic pain (pain group). Those subjects who reported pain as evidenced in their responses to all questionnaires (CSI >40 p., GCPS class 2-4, BPI score 5 points and more than four questions with pain, TAS-20 >51, BDI>14 p.) and whose pain was longer than 3 months.

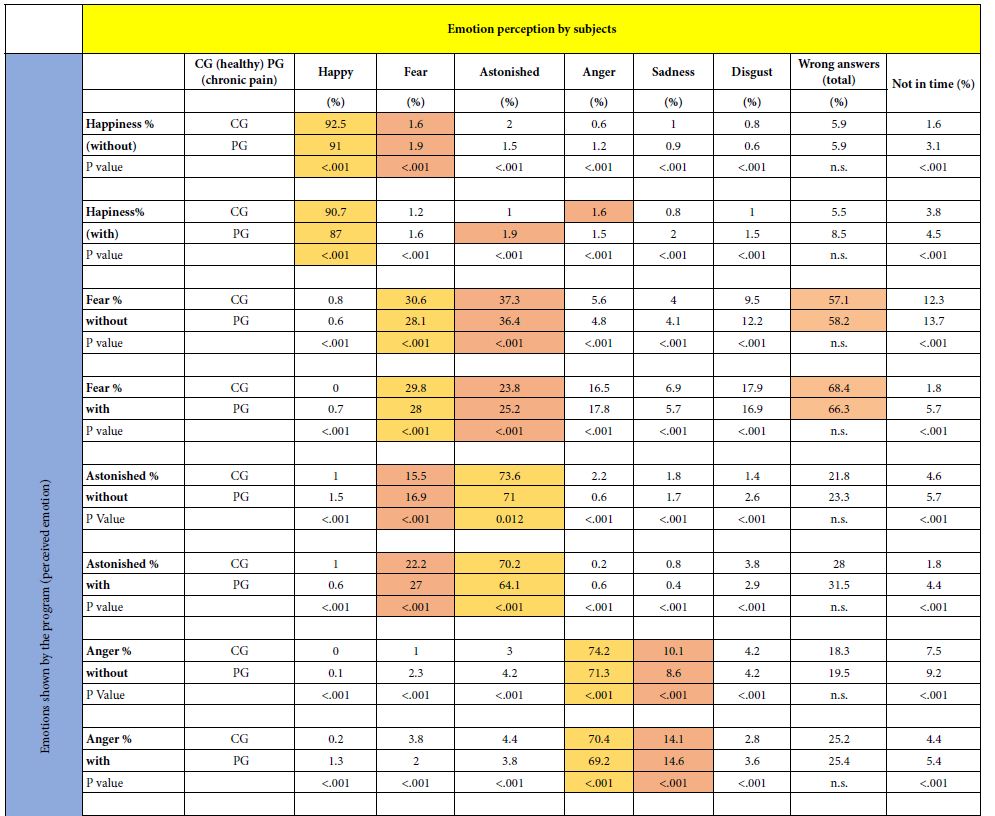

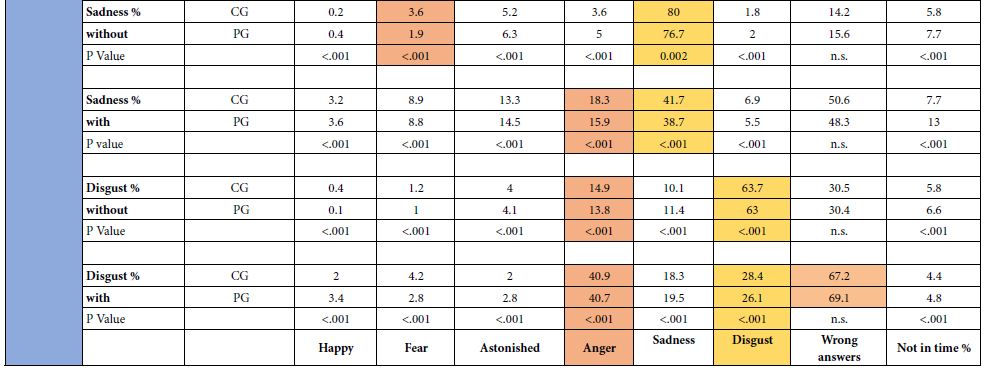

Accuracy of Emotion Recognition and Confusion

By this analysis we wanted to explore whether subjects with chronic pain were less accurate than asymptomatic subjects at distinguishing basic emotions and were confused with the emotion recognition. We examined the data in a modified confusion matrix recently described by Carbon et al. (Table 2). Hereby the emotions displayed by the program (perceived) are compared to the emotions specified by the test participants. (recognized) The answers highlighted in orange are the participants’ greatest percentages, the red ones indicate the most frequent confusion about an emotion and the green boxes the most wrong chosen emotion.

Table 2: Confusion matrix of expressed and perceived emotions of control group (CG) and pain group (PG) with (out) mask. Segments of the table in orange are the highest score. The red segments indicate the most frequent confusion about an emotion.

Without Lower Face Covering. In both groups, happiness was recognized the most (CG: 92.5%, PG: 91%) and it was exchanged dominantly with fear (CG: 1.6%, PG: 1.9%). Fear was recognized the least in both groups (CG: 30.6%, PG: 28.1%) and was most often mistaken for astonished (CG 37.8%, PG 36.4%). Fear also had the highest number of incorrect answers (CG 57.1%, PG 58.2%; highlighted in green in Table 2).

With Lower Face Covering. Happiness was best recognized in both groups (CG 90.7%, PG 87%). A small number of participants in the CG mixed up happiness with anger (1.6%) and astonished in the PG (1.9%). More strikingly, disgust was recognized much less in both groups (CG 28.4%, PG 26.1%) and was mostly confused with anger (CG 40.9%, PG 40.7%).

Incorrect Chosen Emotion. Based on our results, it seems that with (out) covered lower face “fear “is the most chosen incorrect emotion (without; CG=57.1%, PG 58.2% and with: CG=68.4%, PG 66.3%). A clear misjudgment was also made in both groups of “disgust“ with lower face covering (CG 67.2%, PG 69.1%) but less without lower face covering (30.5%, PG 30.4%). In Table 2 they are highlighted in green.

Confused Emotions. In both groups more than 40% confused “disgust” with “anger” (CG 40.9%, PG 40.7%) when the lower face was covered which was clearly more than without covering (CG 14.9%, PG 13.8%). Also “fear” clearly swopped with “astonishes\d” in both groups without (CG 37.3%, PG 36.4%) and with lower face covering (CG 23.8%, PG 25.2%)

Differences with (out) Face Covering in Both Groups and Answering Time

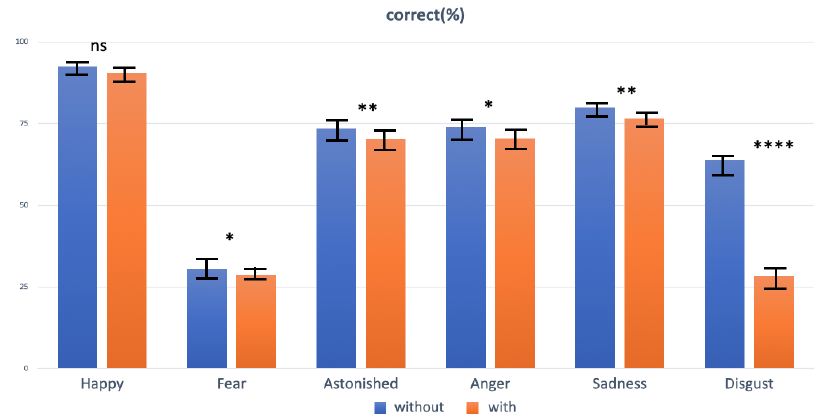

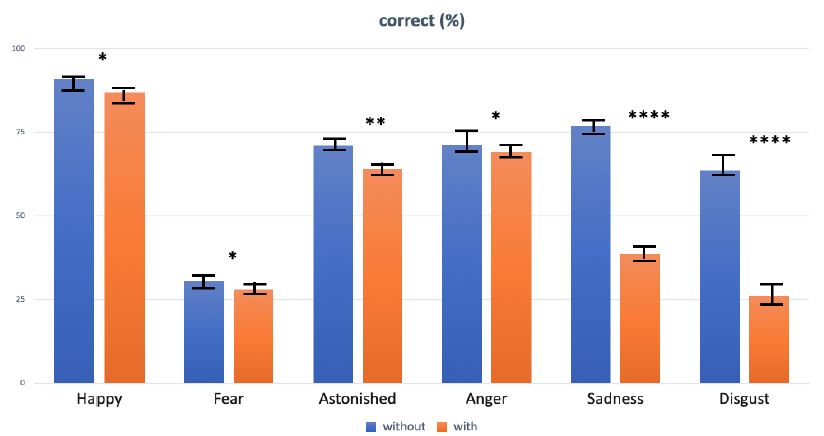

An overview of the mean percentage of correct basic emotions with (out) covered lower face (red) of control group (CG) and the chronic pain group (PG) are depicted in Figures 2 and 3. It may be concluded that between the CG and the PG with (out) face covering showed a clear significant (P<0.001) difference of all basic emotions except that of happiness. In the PG there is an extremely significant difference in emotion recognition with (out) lower face covering times of “sadness” and “disgust” (Figure 3) Average answer time. The average time of the CG (with) out face covering was both 3,1 (±0.8), p=0.2 and the PG 3,2 (±0.9), p=0,3. There was no significance in time between CG and PG with (out) face covering (p=0.34 p=0.4).

Figure 2: Mean percentage of correct basic emotions with (out) covered lower face (red) of the control group (CG) (n=72) Asterisks indicate statistical differences between conditions of wearing and non-wearing on basis of paired t-tests: *p < 0.05, **p < 0.01, ****p < 0.0001; ns, not significant.

Figure 3: Mean percentage of correct basic emotions with (out) covered lower face (red) of the chronic pain group (PG) (n=98) Asterisks indicate statistical differences between conditions of wearing and non-wearing on basis of paired t-tests: *p < 0.05, **p < 0.01, ****p < 0.0001.

Discussion

In the present study we tested the impact of covered lower face with imitated face masks on basic emotion recognition during a computer task. We confronted participants with (out) chronic pain with faces in a neutral emotion that morphed into one of six different basic emotions (angry, disgusted, fearful, happy, astonished and sad) during a computer standard test. Variables we tested were accuracy and time. Control group (CG); comparing the results of the CG with the results of Carbon (2020) it should be noted that they do not completely match. Without a mask, the Carbon study had a 92.5% recognition for the fear emotion and while only 30.6% did in this study. Happy (Carbon 98.8% to 92.5%) and angry (Carbon 83.7% to 74.2%) were also recognized significantly differently. With mask, happy is recognized 90.7% correctly in this work and only 74.2% during the study of Carbon. At Carbon, fear is still correctly recognized 93.5% of the time when wearing a face mask, but only 29.8% in our study. Even sadness is recognized correctly with 62.6% during the Carbon study and in ours 41.7%. Disgust and angry are almost the same in the CG. It may be concluded that the same trend of accuracy in both studies of the different emotions can be observed, but not of all outcomes.

In both groups it can be registered that sadness and disgust are significantly less recognizable with a covered lower face. A feature of these two emotions is that they have excessive asymmetric facial expression changes in the lower face [35]. In the Carbon study ‘astonished” was not tested and in our study astonished was also significant in both groups (with) out lower face covering. Happiness was with (out) face covering in our study was not significant in both groups (CG p=0.053, PG p=0.041) in contrast to the Carbon study.

The possible differences in results may be determined by the difference in test set-up. Carbon’s study only used photos of the basic emotions without seeing the neutral state of different ages and gender with (out) an artificial mask and there was no time limit. In our study we used a computer program with morphing of basic emotions with (out) a covered lower face with a double task; emotion recognition and recognition as soon as possible (time). In our study the neutral state of the recognized person is not measured.

Controle Group (CG) versus Pain Group (PG); the results of CG and PG, indicates that accuracy in emotion recognition was strongly reduced in both groups with (out) lower face covering. This seems to be compatible with parts of the literature employing different types of covering, for instance, by rigidly covering the mouth area with cardboard, using the bubbles technique or, much closer to the present study, using block based partial square face covering [36] or a shawl or cap. For fearful faces, as shown before in the literature, the upper face, special the eye region, which was not covered, was most relevant for judging someone emotional state. It is evident that in our sample with chronic pain there is an obvious significant difference in nearly all emotions with control group except happiness (Figure 2), but an obvious significant difference with without lower face covering (Figure 3). This observation suggests that chronic pain patients with, for example, a surgical mask have facial emotional communication impairments in emotional perception, expression special in the emotions “sadness” and “disgust” [37-43].

Strength and Limitations

As far the authors concerned, with respect to the literature this is the first observational study on face recognition with covering the lower face done in patients with a chronic pain state. The strength is that the PG sample is a clear primary or secondary chronic pain group diagnosed by questionnaires as suggested by the International Association of Study of Pain (IASP) [37]. Therefore use of medication and the medical diagnosis is not asked. This may influence the variability of the results within the PG for example in the speed of recognition. The authors are aware of this, but concerning the literature on chronic pain it is more seen as a disease in itself, we left out the individual pain regions, medical diagnosis and medication usage. A limitation may be that the covering of the lower face was not a real mask but a substitute of plastic material that has the form of a mask. This may influence the results of the variables accuracy and time. On the other hand, the test was conducted in both groups in the same way.

Conclusion

- People in a chronic pain state are worse at emotion recognition (with) out lower face covering than persons without pain but there is no difference in time

- Recognition of all basic emotions especially sadness and disgust with lower face covering are clearly reduced in the chronic pain state group and disgust was often confused with anger and Fear with astonished.

- This results support the need of face rehabilitation and training which may contribute appropriate non-verbal communication and quality of life in persons with chronic pain

- Future studies in subclassification of chronic pain (use of medication, risk factors) and outcome studies in facial emotion training may support the influence of face covering like wearing a mask.

Acknowledgements

We thanks Jetske Olde Oudhof and Karin Jungmann for their assistance in participant recruitment and assessment and Dr. Jennifer Nelsson for prove reading.

Conflict of Interest

There is no conflict of interest

Authors’ Contributions

HP conceived the design, AG was in charge of data collection, and all authors participated in data analysis and discussed the findings. HP composed the initial draft of the manuscript. All authors contributed to the final version of the paper.

References

- Martinez AM (2017) Visual perception of facial expressions of emotion. Current Opinion in Psychology 17: 27-33. [crossref]

- Wang R, Li J, Fang H, Tian M, Liu J (2012) Individual differences in holistic processing predict face recognition ability. Psychological Science 23: 169-177.

- Rapcsak SZ (2019) Face Recognition. Current Neurology and Neuroscience Reports 19:

- Guo K (2012) Holistic gaze strategy to categorize facial expression of varying intensities. PLoS One 7: 8. [crossref]

- Smith ML, Cottrell GW, Gosselin F, Schyns PG (2005) Transmitting and decoding facial expressions. Psychological Science 16: 184-189.

- Patel V, Mazzaferro DM, Sarwer DB, Bartlett SP (2020) Beauty and the mask. Plastic and Reconstructive Surgery Global Open 8: 8.

- Maestripieri D, Henry A, Nickels N (2017) Explaining financial and prosocial biases in favor of attractive people: Interdisciplinary perspectives from economics, social psychology, and evolutionary psychology. Behavioral and Brain Sciences 40.

- Ross ED, Prodan CI, Monnot M (2007) Human facial expressions are organized across the upper-lower facial axis. The Neuroscientist 13: 433-446.

- Birmingham E, Meixner T, Iarocci G, Kanan C, Smilek D, et al. (2013) The moving window technique: a window into developmental changes in attention during facial emotion recognition. Child Development 84: 1407-1424. [crossref]

- Marini M, Alessandro A, Fabio P, Fausto C, Marco V (2021) The impact of facemasks on emotion recognition, trust attribution and re-identification. Scientific Reports 11: 1-14. [crossref]

- Bruce V, Young A (1986) Understanding face recognition. British Journal of Psychology. 7: 305-327. [crossref]

- Adolphs R (2002) Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews 1: 21-62. [crossref]

- Blais C, Roy C, Fiset D, Arguin M, Gosselin F (2012) The eyes are not the window to basic emotions. Neuropsychologia 50: 2830-2838.

- Roberson D, Kikutani M, Döge P, Whitaker L, Majid A (2012) Shades of emotion: What the addition of sunglasses or masks to faces reveals about the development of facial expression processing. Cognition 125: 195-206.

- Wegrzyn M, Vogt M, Kireclioglu B, Schneider J, Kissler, J (2017) Mapping the emotional face How individual face parts contribute to successful emotion recognition. PloS One 12: 5. [crossref]

- Franzoni V, Biondi G, Perri D, Gervasi O (2020) Enhancing Mouth-Based Emotion Recognition Using Transfer Learning. Sensors 20: 5222. [crossref]

- Cohen МQJ, Buchanan D (2013) Is Chronic Pain a Disease. Pain Medicine Article first published online 7.Cohen J (1988) Statistical Power Analysis for the Behavioral Sciences.

- Treede RD, Rief W, Barke A, Aziz Q, Bennett MI.et al. (2019) Chronic pain as a symptom or a disease: the IASP Classification of Chronic Pain for the International Classification of Diseases (ICD-11) Pain 160: 19-27. [crossref]

- Baeyer C, Champion G (2011) Commentary: multiple pains as functional pain syndromes, J Pediatr Psychol 36: 433-437. [crossref]

- Saariaho AS, Saariaho TH, Mattila A K., Ohtonen P, Joukamaa M I.et al. (2017) Alexithymia and depression in the recovery of chronic pain patients: a follow-up study. Nordic Journal of Psychiatry 71: 262-269. [crossref]

- Taylor GJ (1984) Alexithymia: concept, measurement, and implications for treatment. The American Journal of Psychiatry141: 725-32. [crossref]

- Di Tella M, Castelli L (2016) Alexithymia in chronic pain disorders. Current Rheumatology Reports 18: 1-9 [crossref]

- Schwoebel J, Friedman R, Duda N, Coslett HB (2001) Pain and the body schema: evidence for peripheral effects on mental representations of movement. Brain 124: 2098-2104.

- Moseley GL, Flo H (2012) Targeting cortical representations in treatment of the chronic pain: a review. Neurorehabilitation and Neural Repair 26: 646-652. [crossref]

- von Korn K, Richter M, von Piekartz H (2014) Einschränkungen in der Erkennung von Basisemotionen bei Patienten mit chronischem Kreuzschmerz. Der Schmerz 28: 391-397.

- Haas J, Eichhammer P, Traue HC, Hoffmann H, Behr M, et al. (2013) Alexithymic and somatisation scores in patients with temporomandibular pain disorder correlate with deficits in facial emotion recognition. Journal of Oral Rehabilitation 40: 81-90. [crossref]

- Faul F, Erdfelder E, Lang AG, Buchner A (2007) G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods 39: 175-191. [crossref]

- Neblett R (2018) The central sensitization inventory: A user’s manual. Journal of Applied Biobehavioral Research. 23: e12123.

- Michael VK, Johan O, Francis JK, Samuel FD (1992) Grading the severity of Chronic pain. Pain 50: 133-149. [crossref]

- Bodéré C, Téa SH, Giroux-Metges MA, Woda A (2005) Activity of masticatory muscles in subjects with different orofacial pain conditions. Pain 116: 33-41.

- Cleeland CS, Nakamura Y, Mendoza TR, Edwards KR, Douglas J, et al. (1996) Dimensions of the impact of cancer pain in a four country sample: new information from multidimensional scaling. Pain 67: 267-273. [crossref]

- Kupfer J, Brosig B, Brähler E (2000) Testing and validation of the 26-Item Toronto Alexithymia Scale in a representative population sample. Zeitschrift fur Psychosomatische Medizin und Psychotherapie 46: 368-384. [crossref]

- Kupfer J, Brosig B, Brähler E (2001) Toronto-Alexithymie-Skala-26 (TAS-26) Hogrefe.

- Veirman E, Van Ryckeghem DM, Verleysen G, De Paepe AL, Crombez G (2021) What do alexithymia items measure? A discriminant content validity study of the Toronto alexithymia scale–20. PeerJ 9: e11639. [crossref]

- Bassili JN (1979) Emotion recognition: the role of facial movement and the relative importance of upper and lower areas of the face. Journal of Personality and Social Psychology 37: 2049. [crossref]

- Fischer M, Ekenel HK, Stiefelhagen R (2012) Analysis of partial least squares for pose-invariant face recognition. Fifth International Conference on Biometrics: Theory, Applications and Systems (BTAS).

- Nicholas M, Vlaeyen JW, Rief W, Barke A, Aziz Q, et al. (2019) The IASP classification of chronic pain for ICD-11: chronic primary pain. Pain 160: 28-37. [crossref]

- Bushnell MC, Čeko M, Low LA (2013) Cognitive and emotional control of pain and its disruption in chronic pain. Nature Reviews Neuroscience 14: 502-511. [crossref]

- Franzoni V, Biondi G, Perri D, Gervasi O (2020) Enhancing Mouth-Based Emotion Recognition Using Transfer Learning. Sensors 20: 5222. [crossref]

- Faul F, Erdfelder E, Lang AG, Buchner A (2007) G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods 39: 175-191. [crossref]

- Maestripieri D, Henry A, Nickels N (2017) Explaining financial and prosocial biases in favor of attractive people: Interdisciplinary perspectives from economics, social psychology, and evolutionary psychology. Behavioral and Brain Sciences 40. [crossref]

- von Piekartz H, Wallwork SB, Mohr G, Butler DS, Moseley GL (2015) People with chronic facial pain perform worse than controls at a facial emotion recognition task, but it is not all about the emotion. Journal of Oral Rehabilitation 42: 243-250.

- Wallwork SB, Butler DS, Fulton I, Stewart H, Darmawan I, et al. (2013) Left/right neck rotation judgments are affected by age, gender, handedness and image rotation. Manual Therapy 18: 225-230. [crossref]